Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 40 minutes

From the beginning of the Internet's conventional breakout, many search engine provider companies and e-commerce companies/ organizations struggled with regular growth in data day by day. Even some social networking sites like Facebook, Twitter, Instagram, etc. also undergo the same problem. Today, numerous associations understand that the information they gather is a profitable asset for understanding their customers, the impact of their activities in the market, their performance and the effectiveness of their infrastructure etc. So this is where Hadoop emerged as a preserver which provide us with an efficient way to handle huge datasets using HDFS(Hadoop Distributed File System) and imposes MapReduce for separating calculation errands into units that can be dispersed around a cluster of hardware (commodity hardware) providing scalability (horizontal).

Some big challenges need to be resolved like: How would someone move existing data structure to Hadoop when that framework depends on Relational database system and the Structured Query Language (SQL)? And what about data security, where both master database creators, and admins, and some regular users who use SQL to take information from their data warehouse?

This where the role of HIVE comes into the picture. Hive provides a SQL dialect known as Hive Query Language abbreviated as HQL to retrieve or modify the data. which is stored in the Hadoop. Apache Hive is an open-source data warehouse system built on top of Hadoop Cluster for querying and analyzing large datasets stored in the Hadoop distributed file system. HiveQL automatically converts SQL-like queries into MapReduce jobs.

History of Hive

The HIVE is developed by the Data Infrastructure team of Facebook. At Facebook, Hive's Hadoop cluster is capable to store more than 2 Petabytes of raw data, and daily it processes and loads around 15 Terabytes of data. Now it is being used by many companies also. Later, the Apache Foundation took over Hive and developed it further and made it an Open Source. It is also used and developed by other companies like Netflix, Financial Industry Regulatory Authority (FINRA), etc.

Versions

The very first version of Hive was v0.3 released in October 2010.

Then in January 2013: v0.10.0 was released.

In February 2015: v1.0.0 was released.

In February 2016: v2.0.0 was released.

In May 2018: v3.0.0 was released.

Now the latest version of Hive is v2.3.5 released in May 2019 which is the stable one. There are some stable versions released between 2.0 and 3.0, which are mostly preferred to use.

Features

Hive is a declarative SQL based language, mainly used for data analysis and creating reports. Hive operates on the server-side of a cluster. Hive provides schema flexibility and evolution along with data summarization, querying of data, and analysis in a much easier manner.

In Hive, we can make two types of tables:

- partitioned table

- bucketed table

which make it feasible to process data stored in HDFS and improves the performance as well.

Hive tables are defined directly in the Hadoop File System (HDFS). In Hive, we have JDBC/ODBC drivers. Hive is fast and scalable, and easy to learn. Hive has a rule-based optimizer for optimizing plans. Using Hive we can also execute Ad-hoc queries to analyze data.

Hive Architechture

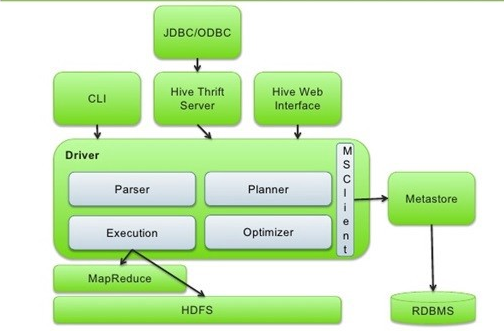

Following is the Hive architecture:

Basic components of Hive are:

- Metastore

- Driver

- Compiler

- Optimizer

- Executor

- CLI, UI, and Thrift Server

METASTORE

It is used to store metadata of tables schema, time of creation, location, etc. It also provides metadata partition to help the driver to keep the track of the progress of various datasets distributed over the cluster. The metadata helps the driver to keep track of the data and it is crucial. Hence, a backup server regularly replicates the data which can be retrieved in case of data loss.

DRIVER

It acts as a controller. The driver starts the execution of the statement by creating sessions and monitors the life cycle and progress of execution. It also stores metadata generated during the execution of an HQL query.

COMPILER

It is used to compile a Hive query, which converts the query to an execution plan. This plan contains the tasks and steps needed to be performed by the Hadoop MapReduce to get the output as translated by the query.

OPTIMIZER

It optimizes and performs transformations on an execution plan to get an optimized Directed Acyclic Graph abbreviated as DAG. Transformation such as converting a pipeline of joins to a single join, and splitting the tasks, such as applying a transformation on data before a reduce operation, to provide better performance and scalability.

EXECUTOR

It executes tasks after compilation and optimization have been done. It interacts with the job tracker of Hadoop to schedule tasks to be run. It takes care of pipelining the tasks by making sure that a task with dependency gets executed only if all other prerequisites are run.

CLI, UI, and Thrift Server -

It is used to provide a user interface to an external user to interact with Hive by writing queries, instructions and monitoring the process. Thrift server allows external clients to interact with Hive over a network, similar to the JDBC or ODBC protocol.

Working

- First of all, the user submits their query and CLI sends that query to the Driver.

- Then the driver takes the help of query compiler to check syntax.

- Then compiler request for Metadata by sending a metadata request to Metastore.

- In response to that request, metastore sends metadata to the compiler.

- Then compiler resends the plan to the driver after checking requirements.

- The Driver sends the plan to the execution engine.

- Execution engine sends the job to Job tracker and assigns the job to Task Tracker. Here, the query executes MapReduce job. And in meantime execution engine executes metadata operations with Metastore.

- Then the execution engine fetches the results from the Data Node and sends those results to the driver.

- At last, the driver sends the results to the hive interface.

Hive Metastore

Hive Metastore is the central repository for metadata. It stores metadata for Hive tables (like their schema and location) and partitions in a relational database. It provides client access to this information by using the metastore service API.

MODES-

-

Embedded: In Hive by default, metastore service and hive services run in the same JVM. In this mode, Data in the local file system are stored using the embedded derby database.

-

Local: Hive is a SQL based framework, that should have multiple sessions. In Local mode, multiple Hive sessions are allowed. This can be achieved by using any JDBC application like MySQL that runs in a separate JVM.

-

Remote: In this mode, metastore and hive services run in a separate JVM. Thrift network APIs are used by different processes to communicate among them.

Hive APIs

Hive APIs are exposed for the developers who are want to integrate their applications and framework with Hive ecosystem. Here are some of the APIs-

-

HCatalog CLI (Command Based) - It is a query-based API which means that it only permits execution and submission of HQL.

-

Metastore (JAVA) - It is a Thrift based API which is implemented by IMetaStoreClient interface using JAVA. This API decouples metastore storage layer from Hive Internals.

-

Streaming Data Ingest (JAVA) - It is used to write the continuous streaming data into transactional tables using ACID properties of Hive.

-

Streaming Mutation (JAVA) - It is used in transformational operations like Update, Insert, Delete to convert it into transactional tables as well using ACID property.

Limitations of Hive

Apache Hive has some limitations also:

- Read-only views are allowed but materialized views are not allowed.

- It does not support triggers.

- Apache Hive queries have very high latency.

- No difference between NULL and null values.

How Hive is different from RDBMS?

- RDBMS supports schema on Write whereas Hive provides schema on Read.

- In Hive, we can write once but in RDBMS we can write as many times as we want.

- Hive can handle big datasets whereas RDBMS can't handle beyond 10TB.

- Hive is highly scalable but scalability in RDBMS costs a lost.

- Hive has a feature of Bucketing which is not there in RDBMS.

Hive Query Language

Starting with basic commands ..

- Command to create a databse

create database < Database Name >;

- Listing the aviliable databases

show databases;

- Creating tables

create table XYZ( XYZ int, XYZ string, XYZ string) row format delimited fields terminated by ',';

- To enter the data using a csv file copy it in the warehouse directory of hive using

$ hdfs dfs -put record.csv /user/hive/warehouse/XYZ

And then type this in hive shell

LOAD DATA LOCAL INPATH '/home/cloudera/input.txt' INTO TABLE XYZ;

- Display table

select * from XYZ;

- Describe table

describe XYZ;

OR

describe extended XYZ;

- We can make various types of tables like

a. External - it helps in storing the data at an external location

b. Managed - it is managed inside the hive warehouse

c. Temporary - it is managed tempoararily for a session

External:

create external table XYZ_external(XYZ int, XYZ string, XYZ string) row format delimited fields terminated by ',' LOCATION '/hive_demo/';

Managed:

create table XYZ_Managed(XYZ int,XYZ string,XYZ string) partitioned by (branch string) clustered by (section) into 2 buckets row format delimited fields terminated by ',';

Temporary:

create temporary table XYZ(XYZ int,XYZ String);

For more better understanding of HiveQL visit this Github repository [LINK] (https://github.com/OpenGenus/hive_guide)