Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 35 minutes

In this article, we will take a look at some of the basic ways we can use OpenMP to parallelize a C++ code. OpenMP is a feature of the compiler like GCC which when used correctly can significantly improve program execution time and better utilize system resources. It is widely used in production software systems such as TensorFlow.

Before going into the details, we will go through some basic ideas.

Process and Thread?

Process means any program is in execution. Process control block controls the operation of any process. Process control block contains the information about processes for example: Process priority, process id, process state, CPU, register etc.

Whereas, Thread is the segment of a process means a process can have multiple threads and these multiple threads are contained within a process. A thread have 3 states: running, ready, and blocked.

OpenMP (www.openmp.org) makes writing the Multi-threading code in C/C++ so easy. OpenMP is cross-platform and can normally be seen as an extension to the C/C++, Fortran Compiler i.e. OpenMP hooks the compiler so that we can use the specification for a set of compiler directives, library routines, and environment variables in order to specify shared memory parallelism.

Example:

// HelloWorld.cpp

#include <stdio.h>

int main() {

#pragma omp parallel

{

printf("Hello\n");

}

return 0;

}

Now we need to compile this code. So, type the below statements in the terminal.

gcc -o hello_openmp helloWorld.cpp -fopenmp

Run the generated executable helloWorld

$ ./hello_openmp

Hello

Hello

Hello

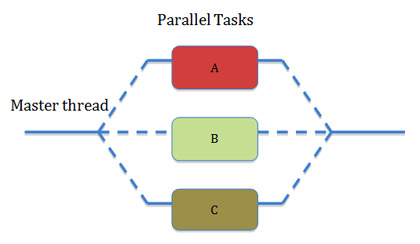

The OpenMP code Parallel Construct basically says: "Hey, I want the following statement/block to be executed by multiple threads at the same time.", So depending on the current CPU specifications (number of cores) and a few other things (process usage), a few threads will be generated to run the statement block in parallel, after the block, all threads are joined.

Explicitly specify the number of steps

If we want to specify explicitly the number the threads to execute the parallel statement block, then we need to use the num_threads() compiler directive, as shown below.

#include <stdio.h>

int main() {

#pragma omp parallel num_threads(3)

{

printf("Hello\n");

}

return 0;

}

This will guarantee that only three threads are generated. ) Please note that it doesn't have to be constant, actually, you can pass almost any expressions, including variables. You can see the example below.

#include <stdio.h>

int main() {

int x = 1;

int y = x + 2;

#pragma omp parallel num_threads(y * 3)

{

printf("Hello\n");

}

return 0;

}

In this example, 9 threads will be running at the same time as y*3=9, and we should see 9 lines of messages.

Getting number of threads and thread ID

OpenMP provides the omp_get_thread_num() function in the header file omp.h. To get the number of total running threads in the parallel block, we can use the function omp_get_num_threads().

Example:

#include <stdio.h>

#include <omp.h>

int main() {

#pragma omp parallel num_threads(3)

{

int id = omp_get_thread_num();

int data = id;

int total = omp_get_num_threads();

printf("Greetings from process %d out of %d with Data %d\n", id, total, data);

}

printf("parallel for ends.\n");

return 0;

}

Output:

Greetings from process 0 out of 3 with Data 0

Greetings from process 2 out of 3 with Data 2

Greetings from process 1 out of 3 with Data 1

parallel for ends.

Please note that the variables defined inside the block are separate copies between threads, so it is guaranteed that data field sent to console is unique. If you move the data outside the parallel struct. See the example below:

#include <stdio.h>

#include <omp.h>

int main() {

int data;

#pragma omp parallel num_threads(3)

{

int id = omp_get_thread_num();

data = id; // threads may interleaving the modification

int total = omp_get_num_threads();

printf("Greetings from process %d out of %d with Data %d\n", id, total, data);

}

printf("parallel for ends.\n");

return 0;

}

Output:

Greetings from process 2 out of 3 with Data 2

Greetings from process 0 out of 3 with Data 2

Greetings from process 1 out of 3 with Data 1

parallel for ends.

We can also tells the compiler which variables should be private so each thread has its own copy by using directive private field.

Example:

#include <stdio.h>

#include <omp.h>

int main() {

int data, id, total;

// each thread has its own copy of data, id and total.

#pragma omp parallel private(data, id, total) num_threads(6)

{

id = omp_get_thread_num();

total = omp_get_num_threads();

data = id;

printf("Greetings from process %d out of %d with Data %d\n", id, total, data);

}

printf("parallel for ends.\n");

return 0;

}

private(data, id, total) asks OpenMP to create individual copies of these variables for each thread.

Output:

Greetings from process 5 out of 6 with Data 5

Greetings from process 4 out of 6 with Data 4

Greetings from process 2 out of 6 with Data 2

Greetings from process 3 out of 6 with Data 3

Greetings from process 1 out of 6 with Data 1

Greetings from process 0 out of 6 with Data 0

parallel for ends.

Default clause

There are two versions of the default clause. First, we focus on default(shared) option and then we consider default(none) clause.

These two versions are specific for C++ programmers of OpenMP. There are some additional default possibilities for Fortran programmers.

Default (shared)

The default(shared) clause sets the data-sharing attributes of all variables in the construct to shared. In the following example

int a, b, c, n;

...

#pragma omp parallel for default(shared)

for (int i = 0; i < n; i++)

{

// using a, b, c

}

a, b, c and n are shared variables.

Another usage of default(shared) clause is to specify the data-sharing attributes of the majority of the variables and then additionally define the private variables. Such usage is presented below:

int a, b, c, n;

#pragma omp parallel for default(shared) private(a, b)

for (int i = 0; i < n; i++)

{

// a and b are private variables

// c and n are shared variables

}

Default (none)

The default(none) clause forces a programmer to explicitly specify the data-sharing attributes of all variables.

A distracted programmer might write the following piece of code

int n = 10;

std::vector<int> vector(n);

int a = 10;

#pragma omp parallel for default(none) shared(n, vector)

for (int i = 0; i < n; i++)

{

vector[i] = i * a;

}

But then the compiler would complain

error: ‘a’ not specified in enclosing parallel

vector[i] = i * a;

^

error: enclosing parallel

#pragma omp parallel for default(none) shared(n, vector)

^

The reason for the unhappy compiler is that the programmer used default(none) clause and then she/he forgot to explicitly specify the data-sharing attribute of a. The correct version of the program would be

int n = 10;

std::vector<int> vector(n);

int a = 10;

#pragma omp parallel for default(none) shared(n, vector, a)

for (int i = 0; i < n; i++)

{

vector[i] = i * a;

}

Some Guidelines

-

The first guideline is to always write parallel regions with the default(none) clause. This forces the programmer to explicitly think about the data-sharing attributes of all variables.

-

The second guideline is to declare private variables inside parallel regions whenever possible. This guideline improves the readability of the code and makes it clearer.

Critical Sections

You can also fix the above example by using locks, mutexs, critical sections etc. But the easiest method will be to use the omp critical directive as provided by OpenMP.

#include <stdio.h>

#include <omp.h>

int main() {

int data;

#pragma omp parallel num_threads(3)

{

int id = omp_get_thread_num();

int total = omp_get_num_threads();

#pragma omp critical

{ // make sure only 1 thread exectutes the critical section at a time.

data = id; // threads may interleaving the modification

printf("Greetings from process %d out of %d with Data %d\n", id, total, data);

}

}

printf("parallel for ends.\n");

return 0;

The omp ciritical ensures only 1 thread enters the block at a time.