Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 25 minutes

Machine Learning has developed several efficient techniques for a wide range of problems. A single technique or model like Googlenet is used across various problems such as image classification, object detection and others. Some models like MobileNet are designed to work efficiently in computational limited resources like mobile devices. It is a challenge to figure out the technique/ model that will be the best fit for your problem. To solve this, we will show how to evaluate models to determine if its performance meets your expectations/ requirements.

There are 3 major steps in solving a machine learning problem:

- Define your problem

- Prepare your dataset

- Apply machine learning algorithms to solve the problem

When you reach step 3, a major issue arises: Which algorithm is best suited to solve our problem?

There are a large number of machine learning algorithms out there but not all of them apply to a given problem. We need to choose among those algorithms the one that best suits our problem and gives us the desired results. This is where the role of Model Evaluation comes in. It defines metrics to evaluate our models and then based on that evaluation, we choose one or more than one model to use. Let’s see how we do it.

We evaluate a model based on:

- Test Harness

- Performance Measure

- Cross-validation

- Testing Algorithms

Test Harness

The test harness is the data on which you will train and test your model against a performance measure. It is crucial to define which part of the data will be used for training the model and which part for testing it. This may be as simple as selecting a random split of data (66% for training, 34% for testing) or may involve more complicated sampling methods.

While training the model on the training dataset, it is not exposed to test dataset. Its predictions on the test dataset are indicative of the performance of the model in general.

Performance Measure

The performance measure is the way you want to evaluate a solution to the problem. It is the measurement you will make of the predictions made by a trained model on the test dataset.

There are many metrics used for performance measure according to the type problem that we’re solving. Let’s see some of them:

- Classification Accuracy: It is the ratio of the number of correct predictions to the total number of input samples.

$$ Accuracy = \frac{Number\ of\ correct\ predictions}{Total\ number\ of\ predictions\ made} $$

This metric is used only in those cases where we have an equal or nearly equal number of data points belonging to all the classes.

For example, if we have a dataset where 98 percent of the dataset belongs to class A and 2 percent to class be, then a model which simply predicts a given data point to be belonging to class A all the time will have 98 percent accuracy. But in reality, such a model is performing very poorly.

- Logarithmic Loss: Suppose, there are N samples belonging to M classes, then the Log Loss is calculated as below :

$$ Logarithmic Loss = -\frac{1}{N}\sum_{i=0}^N\sum_{j=0}^M\ {y_i}_j*log({p_i}_j) $$

where,

$ {y_i}_j $ indicates whether sample i belongs to class j or not

$ {p_i}_j $ indicates the probability of sample i belonging to class j

This function generates values between [0, $ \infty $). Log loss nearer to zero indicates higher accuracy and log loss away from zero indicates lower accuracy. It is mostly used in multiclass classification and gives fairly good results.

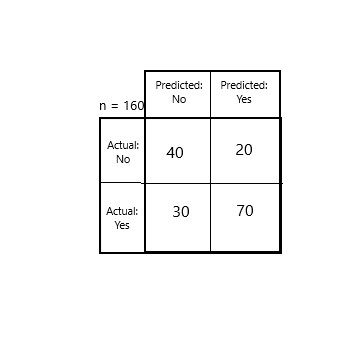

- Confusion Matrix: Let's assume we have a binary classification problem. We have some samples belonging to two classes: YES or NO. Also, we have our own classifier which predicts a class for a given input sample. On testing our model on 160 samples, we get the following result.

There are 4 important terms :

- True Positives: The cases in which we predicted YES and the actual output was also YES.

- True Negatives: The cases in which we predicted NO and the actual output was NO.

- False Positives: The cases in which we predicted YES and the actual output was NO.

- False Negatives: The cases in which we predicted NO and the actual output was YES.

Accuracy for the matrix can be calculated by taking the average of the values lying across the “main diagonal” i.e

$$ Accuracy = \frac{True\ Positives\ +\ True Negatives}{Total\ number\ of\ samples} $$

i.e., $$ Accuracy = \frac{40+70}{165} $$

-

Mean Squared Error: Mean Squared Error(MSE) is quite similar to Mean Absolute Error, the only difference being that MSE takes the average of the square of the difference between the original values and the predicted values. The advantage of MSE is that it is easier to compute the gradient, whereas Mean Absolute Error requires complicated linear programming tools to compute the gradient. As we take the square of the error, the effect of larger errors become more pronounced then smaller error, hence the model can now focus more on the larger errors.

$$ Mean Squared Error = \frac{1}{N} \sum_{j=1}^N (y_j\ -\ \hat y_j)^2 $$

Cross-validation

Here we use the entire dataset to train the model and test the model as well. Here’s how.

Step 1: we divide our dataset into equally sized groups of data points called folds.

Step 2: Then we train our data on all the folds except 1.

Step 3: Next we test our data on that fold that was left out.

Step 4: Repeat step 2 and 3 such that all the folds get to be the test data one by one.

Step 5: The performance measures are averaged across all folds to estimate the capability of the algorithm on the problem.

For example, if we have 3 folds.

Step 1: Train on fold 1+2, test on fold 3

Step 2: Train on fold 2+3, test on fold 1

Step 3: Train on fold 3+1, test on fold 2

Step 4: Average the results of all the above steps to get the final result.

Usually, the number of folds we use are 3, 5, 7 and 10 folds.

Testing Algorithms

Now, its time to test a variety of machine learning algorithms.

Select 5 to 10 standard algorithms that are appropriate for your problem and run them through your test harness. By standard algorithms, one means popular methods no special configurations. Appropriate for your problem means that the algorithms can handle regression if you have a regression problem.

If you have quite a few numbers of algorithms to test, then you may want to reduce the size of the dataset.

Following the above steps, you will be able to determine the best algorithm for your system configuration and problem.