Accuracy and Binary Cross Entropy Loss

Introduction

When using machine learning and data analysis, it's important to check how well your model is doing on the task it's supposed to be performing. Two popular metrics for measuring the performance of a model are Accuracy and Binary Cross Entropy.

Accuracy tells you how many correct predictions your model makes, while Binary Cross Entropy measures the difference between the predicted and true output. Both of these metrics uses many different areas, such as image classification, natural language processing, and detecting anomalies.

In this article at OpenGenus, we will explore the concepts of Accuracy and Binary Cross Entropy in depth, and discuss their advantages and limitations. We will also provide examples of how to use these metrics in different contexts and compare their strengths and weaknesses. Finally, we will highlight the importance of selecting the appropriate metric to test the performance of a model, and provide recommendations for future research in this area.

By the end of this article, readers will have a better understanding of the role of Accuracy and Binary Cross Entropy in evaluating model performance, and will be able to make informed decisions about which metric to use in their own work.

Accuracy

[Accuracy is a metric that measures the percentage of correct predictions made by a model. It calculates the number of correct predictions divided by the total number of predictions. For example, if a model predicts 90 out of 100 samples, its accuracy is 90%.

Accuracy is a popular metric because it is easy to understand and interpret. Yet, it has some limitations. For instance, it assumes that all errors are important, which is not always the case. In some scenarios, false positives (predicting something as positive when it is negative) or false negatives (predicting something as negative when it is positive) can have different consequences.

Moreover, accuracy can be misleading when the data imbalances. For example, if a model trains to predict whether a rare disease is present, and only 1% of the samples have the disease, a model that always predicts "not present" will achieve 99% accuracy. In this case, the model may seem to perform well, but it is not useful for the intended purpose.

Despite its limitations, accuracy is still a useful metric in many scenarios. For example, in image classification, accuracy can be a good metric to test how well a model can recognize different objects. In natural language processing, we use accuracy to measure how well a model can classify text data into different categories.

It is important to consider the advantages and limitations of accuracy when selecting a performance metric for a model. In some cases, it may be appropriate to use other metrics, such as precision, recall, or F1 score, which take into account the importance of different types of errors and the balance of the data.

Binary Cross Entropy

Binary Cross Entropy is a loss function that measures the difference between the predicted output and the true output. It uses binary classification problems, where the goal is to predict whether an input belongs to one of two classes, such as "positive" or "negative", "spam" or "not spam", or "fraudulent" or "non-fraudulent".

The formula for Binary Cross Entropy is:

- (y * log( ŷ) + (1-y) * log(1- ŷ))

where y is the true label (0 or 1) and ŷ is the predicted probability of the positive class (a value between 0 and 1). The loss function calculates the log loss of the predicted probability, penalizing models that assign low probabilities to the true positive class or high probabilities to the true negative class.

Binary Cross Entropy is a useful metric because it uses a guide for training machine learning models. By minimizing the Binary Cross Entropy loss during training, the model can learn to make better predictions. It is also useful because it is differentiable, which means that it optimizes using gradient descent or other optimization techniques.

But, Binary Cross Entropy also has some limitations. For example, it assumes that the data balances out, meaning that there are an equal number of positive and negative examples. When the data imbalances out, such as in cases where the positive class is rare, Binary Cross Entropy may not be the best metric to use. In these cases, alternative metrics such as the F1 score or AUC-ROC curve may be more appropriate.

Binary Cross Entropy is a valuable metric to use in binary classification problems, but it's important to be aware of its assumptions and limitations, and to choose the appropriate metric depending on the specific task at hand.

Comparing Accuracy and Binary Cross Entropy

Accuracy and Binary Cross Entropy are two important metrics used in machine learning for evaluating the performance of classification models. While they both measure different aspects of model performance, they are often used together to provide a more comprehensive picture of how well a model is doing.

Accuracy tells us how many correct predictions the model makes, as a percentage of the total number of predictions. It's a simple and intuitive metric that is easy to understand and interpret. However, it can be misleading in some cases, such as when the classes are imbalanced or the cost of false positives and false negatives is different.

Binary Cross Entropy, on the other hand, measures the difference between the predicted output and the true output, using a log loss function. It's a more nuanced metric that takes into account the probabilities of the predicted output and is useful for optimizing the model during training. However, it assumes that the data is balanced, and may not be the best metric to use in all cases.

To get a more complete picture of model performance, it's often useful to use both Accuracy and Binary Cross Entropy together. For example, during training, we may want to minimize the Binary Cross Entropy loss function, while also tracking the Accuracy of the model on a validation set. This allows us to optimize the model while also keeping track of its performance on the task at hand.

In summary, both Accuracy and Binary Cross Entropy are important metrics for evaluating the performance of classification models. While they have their strengths and limitations, using them together can provide a more comprehensive view of how well a model is performing on a given task. By choosing the right metrics and interpreting them correctly, we can build better models and make more informed decisions.

Applications and Use Cases

Accuracy and Binary Cross Entropy are widely used in machine learning for a variety of applications, including image classification, natural language processing, and anomaly detection. Here are some specific use cases where these metrics are commonly used:

Image classification: In image classification tasks, models are trained to classify images into different categories. Accuracy is often used to evaluate how well the model is doing overall, while other metrics such as Precision and Recall may be used to evaluate the model's performance on specific categories. Binary Cross Entropy is used as the loss function during training to optimize the model.

Natural language processing: In natural language processing, models are trained to classify text data into different categories, such as sentiment analysis or topic modeling. Accuracy is often used to evaluate how well the model is doing overall, while other metrics such as F1 Score and AUC-ROC curve may be used to evaluate the model's performance on specific categories. Binary Cross Entropy is used as the loss function during training to optimize the model.

Anomaly detection: In anomaly detection tasks, models are trained to identify unusual or unexpected events in data. Accuracy may be used to evaluate how well the model is doing overall, while other metrics such as Precision and Recall may be used to evaluate the model's performance on detecting anomalies. Binary Cross Entropy may be used as the loss function during training to optimize the model.

In addition to these specific use cases, Accuracy and Binary Cross Entropy are also used in many other areas of machine learning, such as recommendation systems, fraud detection, and predictive maintenance. By using these metrics effectively, we can build better models and make more informed decisions in a wide range of applications.

Example:

from sklearn.datasets import make_classification

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score, log_loss

from sklearn.model_selection import train_test_split

//Generate random binary classification data

X, y = make_classification(n_samples=1000, n_features=10, n_informative=5, n_redundant=0, n_clusters_per_class=1, random_state=42)

//Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

//Train a logistic regression model on the training data

model = LogisticRegression(random_state=42).fit(X_train, y_train)

//Make predictions on the test data

y_pred = model.predict(X_test)

//Calculate the Accuracy and Binary Cross Entropy

accuracy = accuracy_score(y_test, y_pred)

binary_cross_entropy = log_loss(y_test, y_pred)

//Print the results

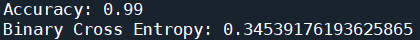

print("Accuracy:", accuracy)

print("Binary Cross Entropy:", binary_cross_entropy)

Output:

Explaination:

In this example, we first generate a random binary classification dataset using the make_classification function from scikit-learn. We then split the data into training and testing sets, and train a logistic regression model on the training data.

Next, we make predictions on the test data using the trained model, and calculate the Accuracy and Binary Cross Entropy using the accuracy_score and log_loss functions from scikit-learn.

Finally, we print out the results, which in this case are the Accuracy and Binary Cross Entropy scores for the logistic regression model on the test data.

Conclusion

In conclusion, Accuracy and Binary Cross Entropy are two important metrics that are widely used in machine learning and data analysis. Accuracy measures the percentage of correct predictions made by a model, while Binary Cross Entropy measures the difference between the predicted output and the true output. Both metrics are important in evaluating the performance of a model and are used in various domains such as image classification, natural language processing, and anomaly detection.

When comparing Accuracy and Binary Cross Entropy, it's important to note that they measure different aspects of a model's performance. While Accuracy is a simple metric that provides a measure of how well a model is classifying data, Binary Cross Entropy provides a more nuanced view of the model's performance by taking into account the confidence of the model's predictions.

Overall, it's important to use multiple metrics when evaluating a model to get a more comprehensive understanding of its performance. By using both Accuracy and Binary Cross Entropy, we can get a more complete picture of how well a model is performing on a given task, and use this information to improve the model's performance.