Learning to Walk via Deep Reinforcement Learning [Summary]

Summary

This article at OpenGenus is a brief summary on the subject "Learning to Walk via Deep Reinforcement Learning" from a Berkeley research paper. Before diving in, here are some core ideas from the paper:

- Deep Reinforcement Learning

- Maximum Entropy Reinforcement Learning

- Asynchronous Learning

- Data Collection

- Motion Capture

- Training of Deep Learning Models

- Automation of Temperature Adjustment

- Real-World Application

- Sample Efficiency

- Robustness and Adaptability

Table of Contents

- Introduction

- Background

- Related Work

- Asynchronous Learning System

- Reinforcement Learning Preliminaries

- Automating Entropy Adjustment for Maximum Entropy RL

- Experimental Results

- Conclusion

To read their full study with illustrations, please view the following site: https://arxiv.org/pdf/1812.11103.pdf

Introduction

The field of robotic locomotion has long faced challenges in designing effective controllers for legged robots. Current approaches rely on complex pipelines and require extensive knowledge of the robot's dynamics. However, deep reinforcement learning (deep RL) offers a promising alternative by enabling the acquisition of complex controllers that can directly map sensory inputs to low-level actions. Applying deep RL to real-world robotic tasks is difficult due to poor sample efficiency and sensitivity to hyperparameters. This paper presents a sample-efficient deep RL algorithm based on maximum entropy RL that minimizes per-task tuning and requires a modest number of trials. The algorithm is applied to train a Minitaur robot to walk, achieving a stable gait in about two hours of real-world time without relying on explicit models or simulations.

Background

This research paper was written by Tuomas Haarnoja, Sehoon Ha, Aurick Zhou, Jie Tan, George Tucker and Sergey Levine for the Google Brain Berkeley Artificial Intelligence Research at the Unversity of California, Berekeley. This paper was published in 2019 and was very innovative in defining and exploring applications of deep learning to autonomous machines.

Related Work

Current state-of-the-art locomotion controllers for legged robots typically employ a pipelined approach, which requires expertise in various components and accurate knowledge of the robot's dynamics. In contrast, deep RL approaches aim to learn controllers without prior knowledge of the gait or the robot's dynamics, making them more applicable to a wide range of robots and environments. However, applying deep RL directly to real-world tasks is challenging, often resulting in the need for extensive sample collection and hyperparameter tuning. Previous research has explored learning locomotion gaits in simulation and transferring them to real-world robots, but performance can suffer due to the discrepancies between simulation and reality. This paper focuses on acquiring locomotion skills directly in the real world using neural network policies.

Asynchronous Learning System

To facilitate real-world reinforcement learning for robotic locomotion, the paper proposes an asynchronous learning system that consists of three components:

- Data Collection Job:

Autonomous robots equipped with sensors and actuators explore real-world environments, gathering diverse and representative datasets. These datasets capture sensory inputs, motor actions, and corresponding outcomes, forming the foundation for subsequent training. - Motion Capture Job:

Specialized motion capture systems accurately capture the robot's movements during data collection. High-resolution cameras or other sensing technologies track position, orientation, and joint angles in real-time, providing precise motion information that complements the collected data. - Training Job:

Deep learning models for robotic locomotion are trained using the collected data and motion information. Reinforcement learning and imitation learning techniques optimize the robot's locomotion skills, updating neural network architectures iteratively. This training job enables continuous improvement of the robot's locomotion abilities over time.

These subsystems run asynchronously on different machines, allowing for easy scaling and error recovery. The data collection job executes the latest policy, collecting observations, performing policy inference, and recording the observed trajectories. The motion capture job computes the reward signal based on the robot's position, periodically pulling data from the robot and motion capture system. The training job randomly samples data from a buffer and updates the value and policy networks using stochastic gradient descent. This asynchronous design enables subsystems to be paused or restarted independently, enhancing robustness and scalability.

Reinforcement Learning Preliminaries

Reinforcement learning (RL) aims to learn a policy that maximizes the expected sum of rewards. In the context of Markov decision processes, RL deals with continuous state and action spaces. The paper introduces the concept of maximum entropy RL, which optimizes both the expected return and the entropy of the policy. The objective of maximum entropy RL encourages exploration and robustness by injecting structured noise during training. However, finding the right temperature parameter, which determines the trade-off between exploration and exploitation, can be challenging. Scaling the reward function also affects the trade-off, making the selection of an optimal scale crucial. The paper discusses the challenges posed by the temperature parameter and proposes an algorithm that automates temperature adjustment during training, significantly reducing the effort of hyperparameter tuning.

Automating Entropy Adjustment for Maximum Entropy RL

To address the sensitivity of maximum entropy RL to the temperature parameter, the paper presents an algorithm that automates temperature adjustment at training time. Rather than fixing the entropy to a specific value, the algorithm formulates a modified RL objective that treats entropy as a constraint. The expected entropy of the policy is constrained, allowing for variation in entropy across different states. By Lagrangian relaxation, the modified problem leads to the original RL objective with an additional term that constrains the expected entropy. The algorithm optimizes this modified objective using stochastic gradient descent, effectively adjusting the temperature to meet the entropy constraint. This automation reduces the need for manual tuning and results in policies that are more robust to variations in the environment.

Experimental Results

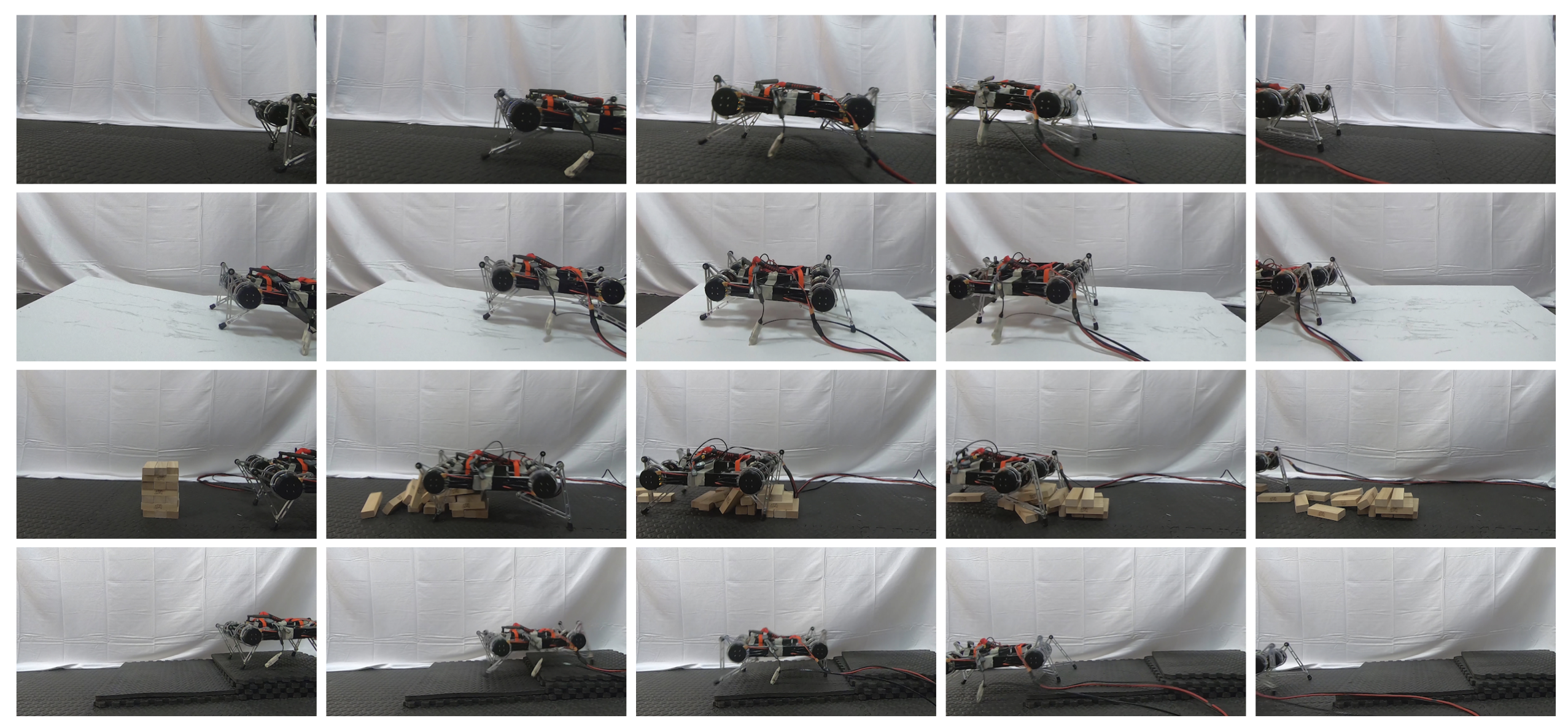

The paper presents experimental results demonstrating the effectiveness of the proposed algorithm. Using the Minitaur robot, the authors trained a walking gait directly in the real world. The algorithm achieved stable locomotion within approximately two hours, showcasing its sample efficiency and applicability to physical robots. Furthermore, the algorithm's performance was compared to simulated benchmark tasks, where it achieved state-of-the-art results with the same set of hyperparameters. The trained policy demonstrated robustness to variations in the environment, maintaining stable locomotion even when confronted with challenging terrains.

Conclusion

The paper introduces a sample-efficient deep RL algorithm based on maximum entropy RL for training locomotion controllers on legged robots. The proposed algorithm addresses challenges related to poor sample efficiency and hyperparameter tuning. It enables the acquisition of stable locomotion gaits directly in the real world without relying on explicit models or simulations. The asynchronous learning system facilitates easy scaling and error recovery, making it suitable for large-scale deployment. The experimental results demonstrate the algorithm's effectiveness and robustness, showcasing its potential for automating the acquisition of complex locomotion controllers for legged robots.