The RUM Conjecture

Table of Contents:

- Introduction

- What is an Access Method?

- What is the RUM Overhead?

a. Read Overhead

b. Update Overhead

c. Memory Overhead - RUM Conjecture

a. Formal Explanation

b. Examples of Application - Conclusion

Introduction

In this article at OpenGenus, let's understand the concept of RUM Conjecture. In simple words, it states that designing an access method for a storage system that is optimal in terms of all three : Read, Update and Memory overhead is impossible. Thus, we have to trade one to make the other two optimal. But first,

What is an Access method?

An access method is like a guide that helps us find and retrieve specific information from a large collection of data. It's like having a well organized filing system where each file has a label to quickly locate it. The access method provides efficient ways to search, add, update or remove data based on certain criteria. It ensures that data operations are performed quickly and accurately, allowing us to find the information we need without searching through the entire collection.

What is the RUM overhead?

RUM overhead refers to the additional costs or resources required when implementing access methods for storage systems. It is the price we pay for achieving higher levels of consistency. An ideal storage system would be the one that has an access method that has lowest Read overhead, minimal Update cost and does not require any extra Memory space, over the main data. In reality, achieving this is impossible and that is what the RUM Conjecture states.

Read Overhead:

Read overhead occurs when the storage system needs to perform additional reads on auxiliary data structures to fetch the main data that is required. Think of it like having to look up information in a separate book to find what you're looking for. These extra reads add overhead because they take extra time and resources. The storage system needs to access these auxiliary structures to speed up the read process, but it comes at the cost of additional overhead. It's like taking a detour to find the information you need, which can slow down the overall reading process.

It is measured as Read Amplification and is determined by the ratio between the total data read (including both main data and auxiliary data) and the intended amount of main data to be read. This can be expressed as:

Read Overhead = Total Data Read / Intended Main Data Read

Update Overhead

Update overhead occurs when the storage system needs to make additional writes to auxiliary data while performing updates to the intended main data. Think of it like making changes to a document and also needing to update an index or table of contents at the same time. These extra writes add overhead because they require additional effort and resources. The storage system needs to update these auxiliary structures alongside the main data to keep everything in sync. It's like having to make multiple changes across different parts of a project, which can take more time and resources compared to just updating the main data alone.

It is measured as Write Amplification and is calculated by dividing the total amount of data written (including both main data and auxiliary data) by the intended amount of main data to be updated. This can be expressed as:

Update Overhead = Total Data Written / Intended Main Data Updated

Memory Overhead

Memory overhead happens when the storage system needs extra space to store additional data structures that help make reading and writing data faster or serve common access patterns. It's like having a special toolbox with extra tools that you use to complete tasks more efficiently. These additional data structures take up extra storage space alongside the main data that needs to be stored. So, it's like having an extra storage area to keep all the tools you need to work on a project, in addition to the space needed for the project itself.

It is measured as Space Amplification and is calculated by dividing the space utilized for both auxiliary and main data by the space utilized by the main data alone. This can be expressed as:

Memory Overhead = (Space Utilized for Auxiliary Data + Space Utilized for Main Data) / Space Utilized for Main Data

Now that we know the basics, we can state the RUM Conjecture more formally.

RUM Conjecture

"The RUM Conjecture states that an access method that can set an upper bound for two out of the read, update and memory overheads, also sets a lower bound for the third overhead."

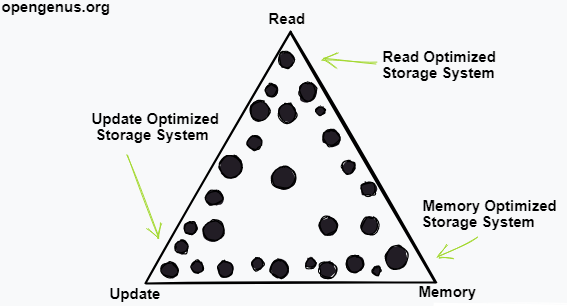

Or in simple terms, The RUM Conjecture suggests that while designing an access method for a storage system, we are faced with a trade off between three important factors: read performance, update performance and memory usage. These three factors form a competing triangle.

It means that we cannot optimize all three factors simultaneously. If we prioritize improving one, it will come at the cost of the other two. So, it's crucial to find the right balance based on the needs of the system and prioritize which factor is most important to achieve the desired performance and efficiency.

Examples of how the RUM Conjecture can be applied in practice:

When designing a storage system, it's important to select the appropriate access method based on the system's specific requirements. Here are some examples:

-

Read Optimized: Access methods such as hash-based indexes and logarithmic time structures like B-Trees, Tries, Prefix B-Trees and Skiplists provide efficient and quick access to data.

-

Write Optimized: Access methods such as the Log-structured Merge Tree, Partitioned B-tree, Materialized Sort-Merge (MaSM) algorithm, Stepped Merge algorithm and Positional Differential Tree consolidate updates and apply them in bulk to the base data thus improving write performance.

-

Space Optimized: These access methods focus on reducing storage requirements. They include compression techniques, lossy index structures like Bloom filters and count min sketches, bitmaps with lossy encoding and approximate tree indexing. Sparse indexes like ZoneMaps, Small Materialized Aggregates and Column Imprints are other examples of space optimized access methods.

Conclusion

To conclude, The RUM Conjecture is a valuable tool for understanding the balance between read, update and memory overhead in data access. It can help us make informed decisions about the design of our systems and it can help to guide the development of new access methods that are more efficient and scalable.