Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

This article aims to improve our understanding of oversampling and undersampling which are important concepts in Data Science.

Table of contents

- Need for over and under sampling

- Random oversampling

- Random undersampling

Need for over and under sampling

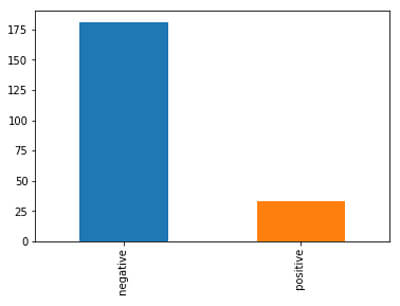

When we have a severe skew in the class distribution of our data, we call it imbalanced dataset. This means that in our dataset, members of one class (usually the minority class) are way higher than the other(majority class). When we train our machine learning model with such skewed data, it affects its performance. One effect is that, our machine learning model may completely ignore the minority class whose predictions we are most interested in. For example, in cancer detection, we are likely to have a majority of data of people who do not have cancer. This may affect our model's way to predict patients with cancer, which concerns us. This is known as imbalanced classification problem.

One way to deal with such imbalanced datasets is random resampling. This is also known as a naive technique as when performed, it has no assumptions about the data and creates an entirely new balanced dataset. There are two ways to randomly resample an imbalanced dataset are to duplicate data samples from minority class or delete data samples from majority class. The former is known as oversampling and the latter, undersampling.

Both these techniques involve bringing in a bias to pick out more samples from one class than the other to compensate the imbalance already present in the dataset. It is to be noted that resampling techniques are only applied to the training dataset and not the test or validation dataset.

Random oversampling

In random oversampling, samples from the minority class are duplicated and added to the dataset in a random manner.

Here, we select data samples belonging to the minority class with replacement. This means that data samples belonging to the minority class are selected from the imbalanced dataset, added to the new dataset and again are replaced in their original place. This allows the same data to be selected again and again.

This technique is highly effective for machine learning algorithms like support vector machine and decision tree that seek a good balance in data. Oversampling is also an effective solution for models that are affected by imbalance in distribution like the artificial neural networks.

When it is done on datasets that are severely imbalanced, random oversampling may increase the likelihood of the model to be overfitted as exact copies of the minority samples are made which results in generalization error. As a result, our model may perform very well with the training dataset but give us bad results with the test data.

Random undersampling

In random undersampling, samples from the majority class are deleted from the training dataset in a random manner.

Random undersampling results in a new dataset containing reduced number of data from the majority class. This may be repeated until we get a balanced dataset. This method may prove useful when the minority class contains sufficient data samples despite the severe imbalance in the dataset.

In this technique, there is no way to preserve the 'good data'. While discarding large amounts of data, data that are important or useful too may get deleted. When such useful data are deleted, it may become hard for the machine learning algorithm to learn the decision boundary between instances from majority and minority classes.

With this article at OpenGenus, you must have the complete idea of Over and under sampling in Data Science.