Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

There are multiple techniques and approaches to automating the process of acquiring information from the web resources, both for the simplest tasks and for advanced work automation. In this article, different solutions are explored together with the pros and cons.

All examples in this article can be categorized as "web-scraping", as they automate client-server relationships from the client-side. Another way to retrieve data from the website is to apply a standardized API like REST if supported by the website.

Table of contents:

- How web browser gets webpages

- Download the source code of the webpage

- Download and render webpage

- Control system web-browser programmatically

- Conclusion

How web browser gets webpages

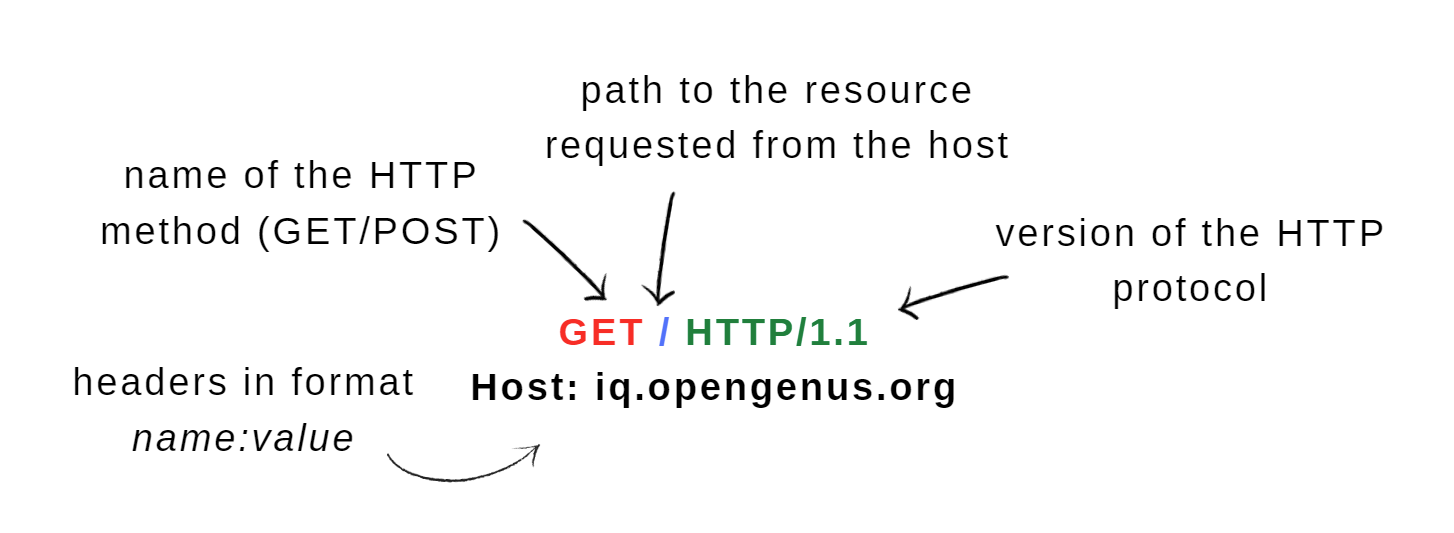

To download a webpage from the network the first step is to send a request to the address of the webserver and ask for the data. This is usually done with a GET HTTP request and is automated by the web browsers. Upon receiving the request, the web server sends back the response, and if no error occurred along the way, it applies the requested data to the body of the response.

The simpliest GET request looks like this:

Response of the web-server:

HTTP/1.1 200 OK

Server: nginx/1.14.0 (Ubuntu)

Date: Thu, 28 Apr 2022 04:26:49 GMT

Content-Type: text/html; charset=utf-8

-----------------------------------------> additional headers ...

...

body of the response with the html:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<meta http-equiv="X-UA-Compatible" content="IE=edge" />

-----------------------------------------> the whole html page is recieved

</body>

</html>

What happens next is that the web browser renders HTML and runs scripts if any, so the page can be viewed by a human reader in a nice formatted way.

This is the basic process that goes underneath each time the webpage is loaded by the web browser, now let's explore how this process can be automated with Java.

Download the source code of the webpage

This approach relies on generating a GET request and retrieving HTML code from the body of the server response just like the web browser gets it.

HttpURLConnection

Standard Java class URLConnection, and its child HttpURLConnection allow to read from and write to the resource referenced by the URL.

import java.io.BufferedReader;

import java.io.BufferedWriter;

import java.io.FileWriter;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.HttpURLConnection;

import java.net.MalformedURLException;

import java.net.URL;

public class WebPageDownloader {

public static void main(String[] args){

try {

// Translate address of the webpage to the URL form

URL address = new URL("https://iq.opengenus.org//");

// Instantiate connection object

HttpURLConnection connection = (HttpURLConnection) address.openConnection();

// Set the properties of the connection (optional step)

connection.setRequestMethod("GET"); // GET or POST HTTP method, GET is default

connection.setRequestProperty("accept-language", "en"); // add headers to the request

// Send HTTP request to the server - the connect is triggered by methods like:

// getInputStream

// getOutputStream

// getResponseCode

// getResponseMessage

// connect

// Proceed with downloading only if server sends code OK 200

int http_status = connection.getResponseCode();

if(http_status != HttpURLConnection.HTTP_OK) {

throw new IOException("Server response with error, code: " + http_status);

}

// Set up input stream to read data from the server

BufferedReader in = new BufferedReader(new InputStreamReader(connection.getInputStream()));

// Set up output stream to the file on disk

BufferedWriter out = new BufferedWriter(new FileWriter("page.html"));

// Save data to the html file

String line;

while ((line = in.readLine()) != null) {

out.write(line + "\n");

}

// Close input output streams after work is done

in.close();

out.close();

} catch (MalformedURLException e) {

System.out.println("Wrong URL address");

} catch (IOException e) {

System.out.println("Download error " + e.getMessage());

}

}

}

The output is the HTML file for a single page. With additional parsing tools, specific data can be fetched from the file. Trying to open the file in a web browser reveals that multiple formatting elements are lost: this is because webpages rely heavily on relative references to load additional styles/images/scripts.

| Original webpage | Downloaded webpage |

|---|---|

|

|

To load all elements that were included via relative references it is necessary to either change relative references to the absolute or to go through each of the references and load them as well. The Chrome web browser, for example, when saving a webpage to the local disk does exactly this: it converts some references to absolute form and downloads resources to the local folder.

-----> the relative reference to the image

<link rel="shortcut icon" href="/favicon.png" type="image/png">

-----> the same reference in the saved page - Chrome added full path to make reference absolute

<link rel="shortcut icon" href="https://iq.opengenus.org/favicon.png" type="image/png">

-----> the relative reference to the CSS style sheet

<link rel="stylesheet" type="text/css" href="/assets/built/prism.css?v=f19783a367">

-----> Chrome downloaded css file to the local folder and fixed the reference

<link rel="stylesheet" type="text/css" href="./OpenGenus IQ_ Computing Expertise & Legacy_files/prism.css">

JSoup

JSoup - is a Java HTML Parser that provides tools for loading webpages and scraping data. It does not execute JS scripts but has tools for interacting with web elements and requires significantly less code compared to the HttpURLConnection approach.

As JSoup is a third-part library, so to run it additional work needs to be done: either manually download and add it to the build path, or use Gradle or Maven tools for automated building.

To use Maven, create Maven project and add dependency to the pom.xml file, so all needed packages will download automatically.

this dependency should be added to the pom.xml file, number of version may vary

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.13.1</version>

</dependency>

import org.jsoup.Connection;

import org.jsoup.Jsoup;

import java.io.BufferedWriter;

import java.io.FileWriter;

import java.io.IOException;

public class WebPageDownloaderJsoup {

public static void main(String[] args) {

try {

// Send GET request to the server and fetch the response

Connection.Response response = Jsoup.connect("https://iq.opengenus.org//").execute();

// Parse response to get string with html code

String html = response.body();

// Save HTML to file

BufferedWriter writer = new BufferedWriter(new FileWriter("page.html"));

writer.write(html);

writer.close();

} catch (IOException e) {

System.out.println("Download error: " + e.getMessage());

}

}

}

JSoup simplifies fetching of data and parsing a lot, so depending on the task this might be a great balance of simplicity/functionality.

Similar solutions based on fetching of the HTML can be implemented with Apache HttpClient, HtmlCleaner, and HtmlUnit.

Download and render webpage

If it is important to load a webpage with original formatting and to be able to interact with it and run embedded scripts, Java provides solutions to render the page with its GUI packages.

JavaFX

WebEngine of the JavaFX applications allows to load webpage, apply styles and run JS scripts. WebView node manages WebEngine, allows user mouse and keyboard input, and can be added to the app window.

import org.w3c.dom.Document;

import javafx.application.Application;

import javafx.scene.Scene;

import javafx.scene.layout.Pane;

import javafx.scene.web.WebEngine;

import javafx.scene.web.WebView;

import javafx.stage.Stage;

public class WebPageDownloaderJavaFX extends Application {

// Entry point to the JavaFX application

public static void main(String[] args) {

launch(args);

}

// Start called by the launch() method

@Override

public void start(final Stage stage) {

// WebView automatically creates WebEngine

final WebView webView = new WebView();

final WebEngine webEngine = webView.getEngine();

// Set webView to render in application window

Pane root = new Pane(webView);

Scene scene = new Scene(root);

stage.setScene(scene);

stage.show();

// Load webpage - webEngine automatically sends get request

webEngine.load("https://iq.opengenus.org//");

// Get reference to the DOM document of the webpage

Document page = webEngine.getDocument();

}

}

| Original webpage | JavaFX WebView |

|---|---|

|

|

So, with JavaFX webEngine downloaded webpage can be rendered and interacted with, and with some work, the required data will be fetched. The problem with this approach is that although it works, there is a variety of more popular and easier to work with solutions.

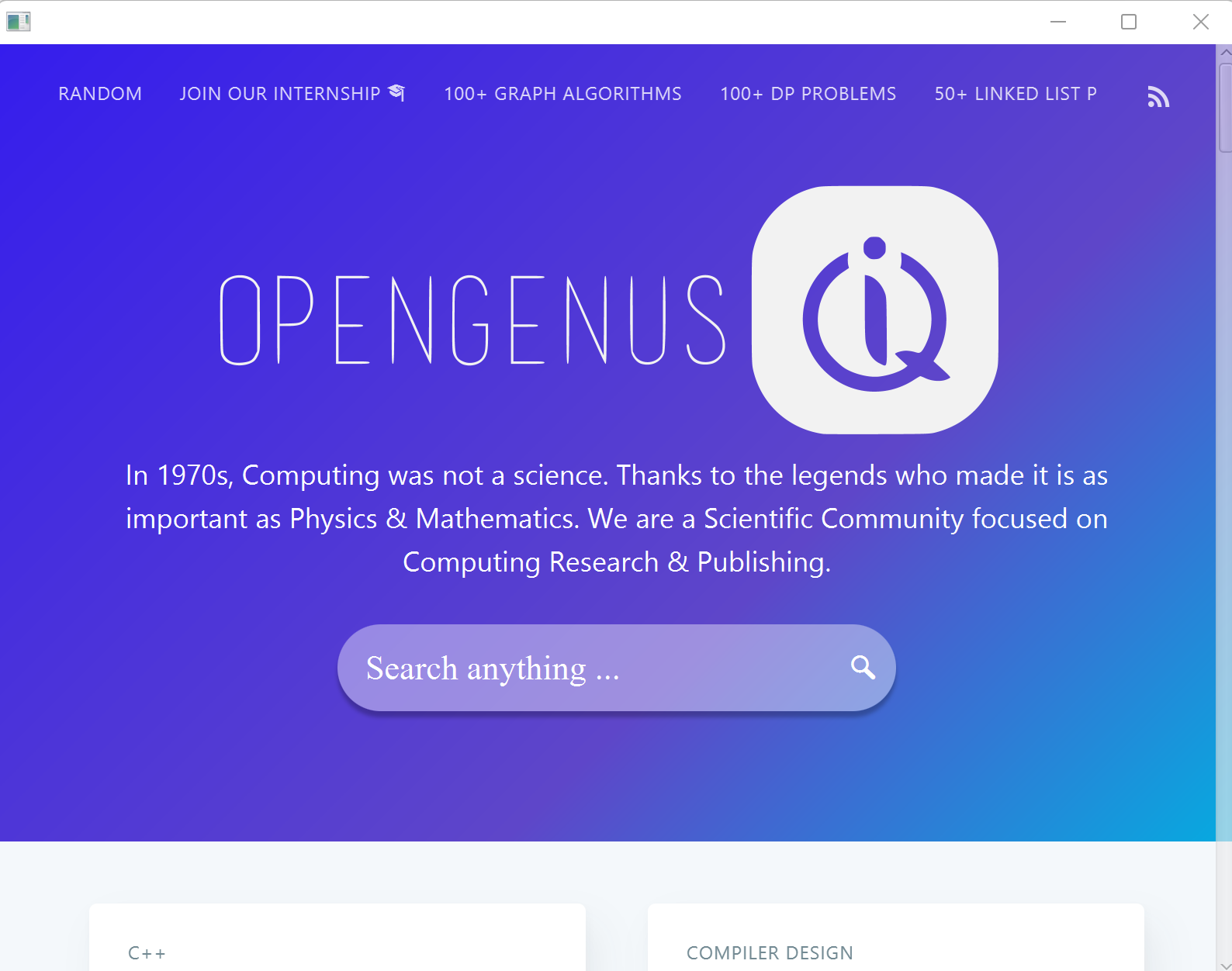

Control system web-browser programmatically

Every OS has a pre-installed web browser that is optimized for running web requests and downloading files from the network, so instead of creating new programs from scratch, it might be easier to automate the work of the existing web browser, especially with the webpages that have multiple interactive elements and styles.

Load webpage with default system browser

If the only thing to do is to open a webpage, Java can get do it easily with the default system browser.

import java.awt.Desktop;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

public class WebPageDownloaderWindowsDefaultBrowser {

public static void main(String[] args){

try {

// Convert string to URI object

URI address = new URI("https://iq.opengenus.org//");

// Get default browser and command it to open address in new tab

Desktop.getDesktop().browse(address);

} catch (IOException e) {

System.out.println("Launching default browser error: "+ e.getMessage());

} catch (URISyntaxException e) {

System.out.println("Wrong address:" + e.getMessage());

}

}

}

The solution is very simple, but it gives no control regarding browser choice or further interaction with webpage elements. Additionally, the solution is OS-dependent, so different versions of the program should be developed for Unix-like or Mac operating systems.

Web-browser automation with Selenium

Expanding further the idea of programmatical control of the web browser, on stage comes the Selenium WebDriver, which provides extensions to emulate user interaction with browsers (Chrome, Firefox, Opera, Safari, IExplorer).

To make Selenium run in the Java project, the following steps should be done:

- download Selenium library in jars and add it to the project build

- download the driver for the browser and add it to the project build

The driver for the browser is developed by the developers of the browser, not by the Selenium team, so the Selenium library and browser driver have distinctive download routines.

With regular updates of Selenium library, browser driver, and other third-party libraries, loading everything manually becomes a tedious task so it is preferable to use Maven (or Gradle) instead. With Maven, the steps of creating a project in Eclipse IDE with Selenium reduce to the:

- Create a new Maven project in Eclipse - notice BuildPath has no Maven dependencies yet

- Copy-paste dependencies as below to the pom.xml file → the maven will automatically load all needed packages.

Autogenerated pom.xml file:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>MyMavenProject</groupId>

<artifactId>MyMavenProjectWithSelenium</artifactId>

<version>0.0.1-SNAPSHOT</version>

Place to paste dependencies to:

<dependencies>

<!-- https://mvnrepository.com/artifact/org.seleniumhq.selenium/selenium-java -->

<dependency>

<groupId>org.seleniumhq.selenium</groupId>

<artifactId>selenium-java</artifactId>

<version>3.141.59</version>

</dependency>

<!-- https://mvnrepository.com/artifact/io.github.bonigarcia/webdrivermanager -->

<dependency>

<groupId>io.github.bonigarcia</groupId>

<artifactId>webdrivermanager</artifactId>

<version>4.4.3</version>

</dependency>

</dependencies>

</project>

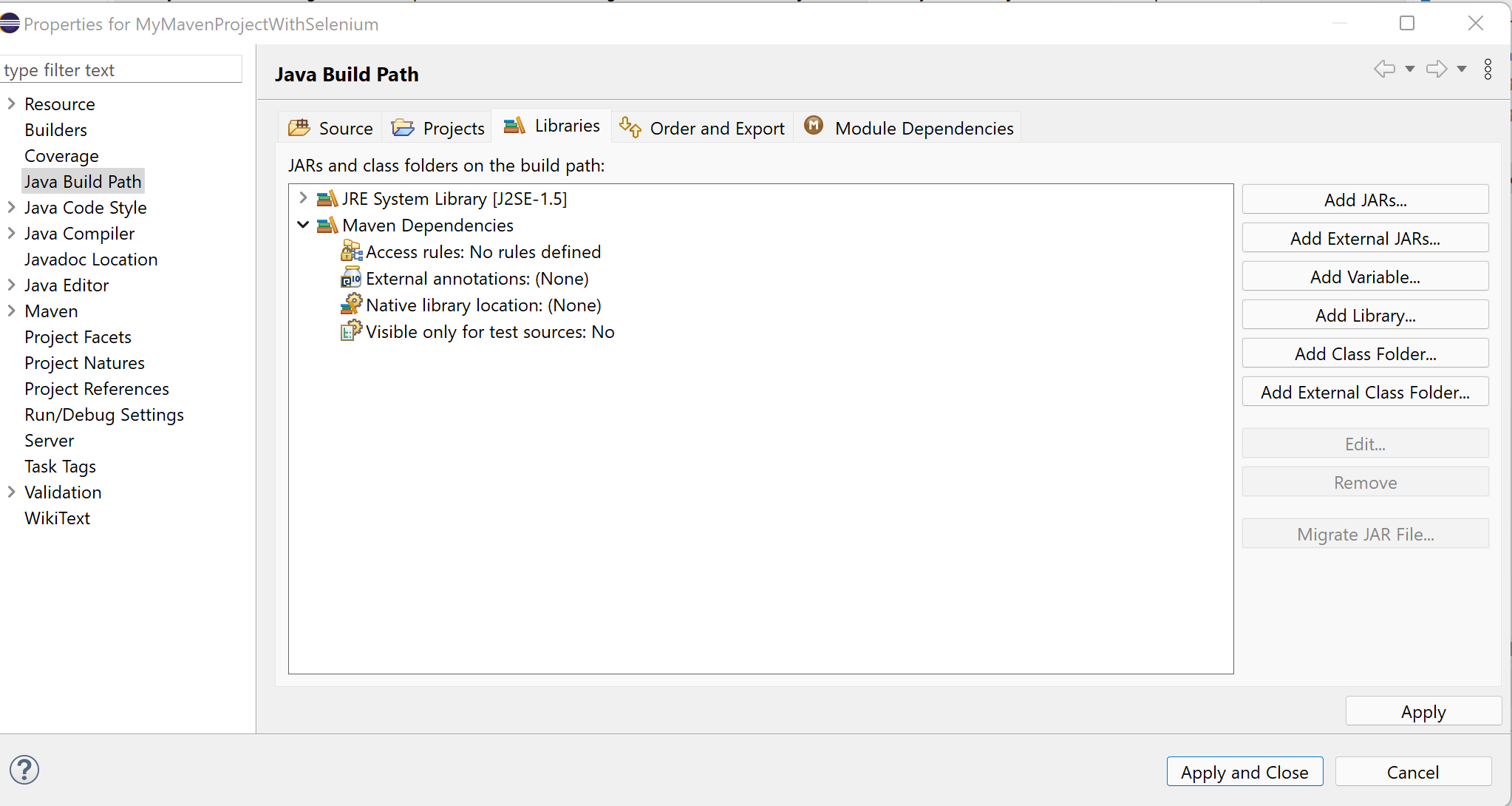

Check that jars files for Selenium downloaded successfully:

- Automate web-browser driver downloading with the io.github.bonigarcia.wdm.WebDriverManager (simply copy-paste this command to the beginning of the program:WebDriverManager.chromedriver().setup();) → the WebDriverManager will automatically load drivers and check for updates with future runs of the program.

- At this step, all settings are finished and Selenium is ready to work:

import org.openqa.selenium.chrome.ChromeDriver;

import java.io.BufferedWriter;

import java.io.FileWriter;

import java.io.IOException;

import java.util.concurrent.TimeUnit;

// Automatic load of the web-browser driver

import io.github.bonigarcia.wdm.WebDriverManager;

public class WebPageDownloaderSelenium{

public static void main(String[] args) throws IOException, InterruptedException {

// Set up latest web-browser driver

WebDriverManager.chromedriver().setup();

// Launch session in the Chrome browser

ChromeDriver driver = new ChromeDriver();

// Simulate user input of the page address

driver.get("https://iq.opengenus.org//");

// Get source code of the webpage

String html = driver.getPageSource();

// Save source code to the file

BufferedWriter writer = new BufferedWriter(new FileWriter("page.html"));

writer.write(html);

writer.close();

// Exit session with web-browser

driver.quit();

}

}

The page downloaded successfully, so Selenium, although a little bit hard to set up, can do the job in a few lines of code. It is especially useful for more advanced or often repeating tasks, for example, login into multiple forms before downloading the page.

Alternatives to Selenium (paid) are Sahi Pro or IBM Rational Functional Tester.

Conclusion

The simple task of downloading a webpage can be approached in many ways in Java, and in this article at OpenGenus different example solutions were explored. For most cases, either JSoup or Selenium can provide an effective solution with the minimum development time.