Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Stack allocations are often restrictive and we may want resizable arrays which can survive function invocations, in this article we discuss heap allocation in compilers.

Table of contents.

- Introduction.

- Memory management.

- Computer memory hierarchy.

- Program locality.

- Reducing fragmentation.

- Summary.

- References.

Pre-requisites:

Introduction.

The heap is used for dynamically allocated memory.

It is useful for deallocation and allocation operations.

It is the portion of the store that stores data that will live indefinitely or until the program terminates.

Programming languages such as C++ or java allow the creation of objects whose existence is not tied to the procedure that creates them because objects/pointers to objects may be passed from procedure to procedure.

These objects are stored on the heap.

The memory manager interfaces the application programs and the operating system and is responsible for the allocation and deallocation of space within the heap.

Computer memory management.

The memory manager is the subsystem that allocates and deallocates space within a heap.

It is responsible for tracking all free space at all times.

It has two functions, allocation and deallocation.

Allocation when a program needs memory for a variable or object, the memory manager will produce a chunk of contiguous heap memory for the requested size.

If the size is available the allocation request is satisfied by the free heap space otherwise if the requested space is not available, it increases the heap storage by acquiring consecutive bytes of virtual memory from the operating system.

If there is no space at all, this information is passed back to the application program by the memory manager.

Deallocation, during deallocation, the deallocated space is returned to the pool of free space so as to reuse it to satisfy expected allocation requests.

This deallocated memory is never returned to the operating system.

Simplifying memory management

- Same size allocation requests.

- Predictable storage release, e.g first-allocated, first-deallocated.

In lisp language, only one data element(a two-pointer cell) is used to build all data structures.

In some situations e.g having data that can be allocated on the run-time stack is possible in lisp.

The above two points allow simple memory management in lisp language however in most programming languages, neither conditions hold, data elements are of different sizes and there is no way to predict the lifetimes of allocated objects.

Properties of memory manager.

Program efficiency - It should make good use of the memory subsystem so as to assist fast execution of programs.

Programs exhibit locality - a non-random clustered way for accessing memory, the memory manager by attending to the placement of objects, it can make better use of space which might improve the speed of execution for the program.

Space efficiency - It should minimize the needed heap space by a program so as to allow larger programs to run using a fixed virtual address space.

This is achieved by reducing fragmentation.

Low overhead - overhead is the fraction of execution time spent performing allocation and deallocation these are frequent operations in many programs and as such must be as efficient as possible.

Computer memory hierarchy.

Modern machines are designed in such a way programmers write correct programs without concern for the memory sub-system.

The efficiency of a program is dependent not only on the number of executed instructions but also the time taken to execute each instruction.

Time to execute an instruction is influenced by the time taken to access memory locations and can vary from nanoseconds to milliseconds.

A variance in memory access times is caused by hardware limitations in that, we can either build small and fast storage or large and slow storage but not both large and fast i.e It is impossible to build a memory with gigabytes of storage and fast access times.

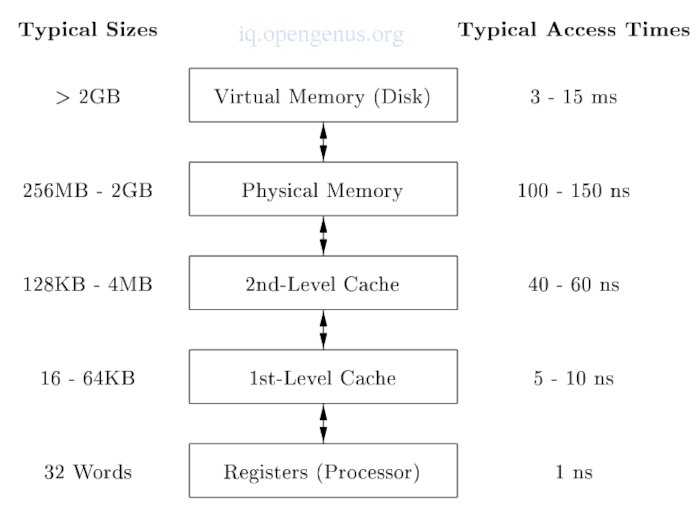

The memory hierarchy

As can be seen from the hierarchy it is a series of storage elements with smaller faster ones closer to the processor and larger slower ones further from the processor.

A processor will have a small number of registers whose contents are controlled by the software.

Following this, it has levels of cache made up of static RAM which are in kbs to several mbs in size.

Then the main memory which will have hundreds of mbs or gbs of made up of dynamic RAM.

Then the virtual memory which is implemented by gbs of disks.

During memory access, the machine will first look for the closest data(lowest level) and if it is absent here, it looks in the next higher level and so on.

Since registers are scarce, their usage is tailored for specified applications and as such they are managed by generated compiler code.

Other levels are managed automatically and in this way not only does programming become simpler but the same program can work effectively across different memory configurations in different machines.

For each access to memory, in succession from the lowest level, the machine will search each level until data is located.

Caches are managed by the hardware so as to keep up with fast RAM access times.

Disks are relatively slow and the virtual memory is managed by the operating system assisted by the hardware structure(translation lookaside buffer).

Data is transfered in contiguous blocks, to amortize costs of access, larger blocks are used with the slower levels of hierarchy.

Between main-memory and cache, data is transfered in blocks - cache lines of 32 - 256 bytes long.

Between virtual and main memory, data is transfered in block 0 pages of 4k - 64k bytes in size.

Program locality.

Most programs spend a lot of time executing a relatively small fraction of the code while touching only a small fraction of the data - locality.

Temporal locality of a program is whereby the memory locations a program accesses are likely to be accessed again within a short period of time.

Spatial locality is whereby a program's memory locations close to the location accessed are likely to be accessed within a short period of time.

"Programs spend 90% of their time executing 10% of the code"

- Programs will contain many instructions that are never executed e.g programs built with multiple components and libraries only to use a small fraction of the provided functionalities. Requirements change and programs evolve and therefore legacy systems will have instructions no longer being used.

- Only a fraction of code that could be invoked is executed e.g instructions for handling illegal inputs and exceptional cases are critical for program correctness but are seldom invoked on any particular execution.

- A typical program will spend time executing inner loops and recursive cycles in a program.

Locality will allow us to take advantage of the memory hierarchy by placing the most common instructions and data in the fast-small storage while leaving the rest in the slow-large storage and by that we lower memory access times of a program significantly.

The above might work for common programs but not for data-sensitive programs that cycle through e.g large arrays.

Optimizing using memory hierarchy.

Placing the mostly used instructions in the cache works well.

When a new instruction is executed, there is a high probability that the next will also be executed, this is a case of spatial locality.

We improve spatial locality by having the compiler place basic blocks - sequences of instructions always being executed sequentially, on the same page or cache line.

i.e instructions belonging to the same loop or function.

We can improve temporal and spatial locality by changing the data layout or order of computation e.g programs visiting large amounts of data to perform small computations won't perform well, it is more preferable to bring some data from the slow level memory hierarchy to the faster level and perform all necessary computations while it resides here.

We can apply the above recursively so as to reuse data in physical memory, caches and registers.

Reducing fragmentation.

When a program begins execution, the heap is one contiguous unit of free space, as the program allocates and deallocates memory, this space is broken up into used and free chunks.

We define holes as free memory chunks.

For each allocation request the memory manager places the requested chunk of memory into a large enough hole and unless a hole is exactly the right size, we split a hole creating a smaller hole.

For each deallocation request, freed chunks are added back to the free space pool.

We coalesce contiguous holes into larger blocks as the holes only get smaller. If we are lousy in this process we might end up with a fragmented memory consisting of a large number of small non-contiguous holes.

It is now possible that no hole will be large enough so as to satisfy future requests even though there is sufficient aggregate free space.

Best-Fit, Next-Fit object placement.

We can reduce fragmentation by controlling the placement of objects in a heap. A good strategy is to allocate the requested memory in the smallest large enough hole - best-fit.

This best-fit algorithm spares large holes so as to satisfy subsequent larger requests.

Alternatively first-fit is whereby an object is placed in the lowest hole first(lowest address) which fits. Although it is faster in placing objects, it is inferior to best-fit in terms of performance.

For a more efficient best-fit, we separate free space chunks size-wise into bins. We can have more bins for smaller sizes since there are many small objects.

How binning strategy makes it easier to find the best-fit chunk.

- If for all small sizes requested from the memory manager, there are bins for chunks of that size only, we can take a chunk from that bin.

- For sizes without a private bin, we find one bin that is allowed to include chunks of the desired size and within it we can use either first-fit or best-fit strategy.

- If the target bin is empty or all chunks in the bin are too small to satisfy the request, we can repeat the search using the bin for the next larger size(s), eventually we will either find a usable chunk oe reach the wilderness chunk(a large-sized bin in the Lea memory manager) from which we can obtain the needed space.

Best-fit improves space utilization however it might not be best for spatial locality since chunks allocated at about the same time tend to have similar reference patterns and lifetimes.

Placing them close to each other will improve the program's spatial locality.

We can modify the placement in case when a chunk of the exact requested size can't be found, we use the next-fit strategy whereby we try to allocate the object in the chunk that has last been split, whenever enough space for a new object remains in the chunk. This improves the speed of allocation.

Managing and coalescing free space.

During manual object deallocation, the memory manager must make its chunks free so as to be allocated again.

It might also be possible to combine(coalesce) the chunk with adjacent chunks so as to form a larger heap. An advantage is that we can always use a large chunk to do the work of small chunks of equal size but small chunks can't hold larger objects as the combined chunk could.

If we keep a bin for chunks on a fixed size, we might not prefer coalescing as it is simpler to keep all chunks on one size in as many pages as needed.

A simple allocation/deallocation scheme is to use a bitmap with a bit for each chunk in the bin.

When a heap is managed as a whole without binning or we are willing to coalesce adjacent chunks and move the resulting chunk into different bins if necessary. We can use data structures to support coalescing of adjacent blocks.

- Boundary Tags, We keep information(free/used bit) at the low and high ends of each free or allocated chunk which will tell us if the block is currently allocated or not. A count of total number of bytes is kept adjacent to each bit in the chunk.

- Doubly Linked, Embeded Free List, We link free chunks in a doubly linked list whereby the pointers for the list are within the blocks adjacent to the boundary tags at their end. No additional space is needed for the free list although its existence places a lower bound on how small the chunks can get since they accommodate two boundary tags and two pointers even if the object is a single byte.

Summary.

Data that is heap allocated will stay allocated until a program terminates or until it is explicitly deallocated or until it is deamed dead by a run-time memory management system.

The size of the array or data may not be know until it is allocated, size can also be increased later whereby a new array is allocated and references to the old array are redirected to point to the new array.

References.

- Basics of Compiler Design Torben Ægidius Mogensen

- Compilers, principles, techniques and tools, Alfred V. Aho, Monica S. Lam, Ravi Sethi, Jeffrey D. Ullman.