Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

In this article, we discuss memory allocation, the process of handing out memory blocks to programs to facilitate their execution.

Table of contents.

- Introduction.

- Data allocation with explicit deallocation.

- Basic Memory Allocation.

- Naive Malloc.

- Optimizations for basic memory allocation.

- Applications of memory allocation in a compiler.

- Remark.

- Summary.

- References.

Introduction

Memory management is responsible for handing out and reclaiming chunks of the data segment such that all chunks fit inside the heap and no memory location is shared by chunks.

All compilers use dynamically sized data whose size is not known in advance therefore room for it must be created during run time.

Dynamic data may comprise of symbol tables, abstract syntax trees, strings from source code etc.

When a program is executed, the operating system allocates the following 3 memory segments.

-

Code segment: this will contain the source code usually in read-only format to avoid accidental editing.

-

Stack segment: contains the stack and is able to detect stack overflows and underflows. It is addressed by stack pointers which are manipulated by machine instructions.

-

Data segment/heap: This is a single contiguous stretch of memory locations at the disposition of the source code for the purpose of data storage.

It's start location and size are accessible to the program and its contents can be manipulated by machine instructions.

Allocating memory in a heap is as follows

- Keep a pointer to the first free location in the heap.

- Allocate the requested block from there.

- Move the pointer to the next free location.

Drawbacks

- Heap space will run out sooner rather than later, even the largest sized memory will eventually fill up. We solve this by releasing a block not in use(garbage collection).

- Handling memory as a programmer is a very daunting task as to perform memory deallocation correctly, one must be aware of the lifetime of every memory block even in the most complex data structures and ensure that a block is released once after its last access.

This procedure may lead to mistakes, e.g, freeing memory very early which leads to a dangling pointer.

This will cause errors that are very hard to debug.

Data allocation with explicit deallocation.

Basic memory allocation in most systems works in the form of a routine which finds a block of unused memory of the requested size, marks it as used and returns a pointer to the block otherwise if no such block exists, the results will vary from a null pointer being returned to an error routine being called or termination of the program.

This memory allocation routine takes the requested size as its parameters.

A marking on a block will prevent it from being passed as a parameter more than once.

Another routine is used to return the block to the system and mark it as not in use.

Marking can be in the form of a bit map with one marking per bit for each byte.

In the C programming language these routines are *void malloc(size_t size) and free(void *ptr).

Basic Memory Allocation.

A memory allocation request of N bytes will give the user a pointer to the first byte of a block of N free bytes.

This process requires the chunk to be embedded in a complex data structure because of additional administration, that is, it will contain a block plus some administration such as length of the chunk.

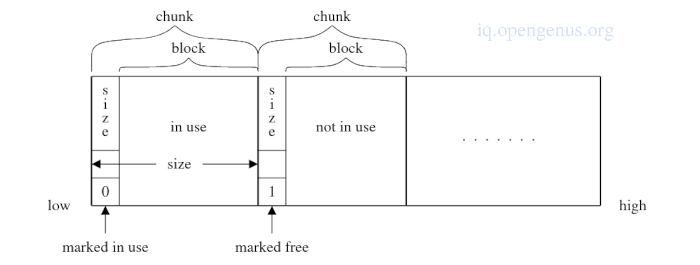

chunks and blocks.

blocks - are memory fragments handled by memory allocator.

A block pointer points to the first byte available to the user and are found in program variables, blocks on the heap, registers.

chunks - are memory fragments handled by the user and consists of a block plus administration usually length of the chunk.

A chunk pointer points to the beginning of the chunk and are only found in chunk administrators.

Relationship between chunks and heap.

A heap is divided into contiguous sequences of chunks and each chunk is marked with a size.

The last byte of a chunk is followed by the first byte of the next chunk.

One can find the start and end of a chunk given the length of the chunk is known.

Bits are incorporated in each chunk for administration purposes in addition to the length.

A free bit in a chunk is an indicator that the chunk is free.

Free chunks are chained together to form a free list.

Naive Malloc.

firstChunkPointer <- beginingOfAvailableMemory;

onePastAvailableMemory <- beginingOfAvailableMemory + sizeOfAvailableMemory;

firstChunkPointer.size <- sizeOfAvailableMemory;

firstChunkPointer.free <- True;

function Malloc(blockSize) returns a polymorphic block pointer;

Pointer <- pointerToFreeBlockOfSize(BlockSize)

if Pointer != nullPointer:

return Pointer;

coalesceFreeChunks;

Pointer <- pointerToFreeBlockOfSize(BlockSize)

if Pointer != nullPointer:

return Pointer;

solveOutOfMemoryCondition (BlockSize);

//if solveOutOfMemoryCondition returns, there exists blockSize space

return Malloc(BlockSize);

function Free(BlockPointer):

chunkPointer <- BlockPointer-AdmininstrationSize;

chunkPointer.free <= True;

Explanation:

We need to allocate block B of the required blockSize to a chunk labelled free that is large enough to accommodate B so Malloc steps through the chunks until a chunk is found.

Chunk C is broken down into C1 and C2 such that C1 has the proper size for block B unless it fits exactly.

Size fields C1 and C2 have set their new values therefore free bit of C1 is turned off and free bit of C2 turned on.

A pointer to block C1 is returned as a response.

To free a block pointed to by a pointer, the free bit of the corresponding chunk is turned on.

If malloc can't find a large enough chunk it coalesces adjacent free chunks into larger chunks.

If two adjacent chunks C1 and C2 are found, size of C1 is set to sum of their sizes.

The above operation can occur during any scanning of the memory upon freeing a block.

If a large chunk can't be found, solveOutOfMemoryCondition() routine is called in the last attempt to remedy the situation.

Optimizations for basic memory allocation.

We face to issues in basic memory allocation, First finding a suitable chunk is a linear operation which is not optimal and Second, coalescing is done in a separate phase which is executed only when the linear search fails.

Solving the first problem involves chaining free chunks using a linked list data structure. The pointers are accommodated in the unused space following the administration area.

An even better optimization involves classification of free chunks according to size and storing a free list for each interval.

Solving the second problem involves copying the size information of each chunk at its end where it can be found by the free operation such that during each free call, we have easy access to the chunk preceding the one being freed and therefore the one following it will be within reach by using the size information.

Combining the two optimizations results in a very efficient memory allocator.

function PointerToFreeBlockOfSize(blockSize) returns a polymorphic block pointer

chunkPointer <- firstChunkPointer;

requestedChunkSize <- administrationSize + blockSize;

while chunkPointer != onePastAvailableMemory;

if chunkPointer.free:

leftOverSize <- chunkPointer.size - requestedChunkSize;

if leftOver >= 0:

//large enough chunk found

splitChunk(chunkPointer, requestedChunkSize);

chunkPointer.free <- false;

return chunkPointer + administrationSize;

//try next chunk

chunkPointer <- chunkPointer + chunkPointer.size;

return nullPointer;

//spliting a chunk

splitChunk(chunkPointer, requestedChunkSize):

leftOverSize <- chunkPointer.size - requestedChunkSize;

if leftOverSize > administrationSize:

//there exists a non-empty chunk

chunkPointer.size <- requestedChunkSize;

leftOverChunkPointer <- chunkPointer + requestedChunkSize;

leftOverChunkPointer.size <- leftOverSize;

leftOverChunkPointer <- true;

//coalsce free

coalesceFreeChunks:

chunkPointer <- firstChunkPointer;

while chunkPointer != onePastAvaiableMemory:

if chunkPointer.free:

coalesceWithAllFollowingFreeChunks(chunkPointer);

chunkPointer <- chunkPointer + chunkPointer.size;

coalesceWithAllFollowingFreeChunks(chunkPointer):

nextChunkPointer <- chunkPointer + chunkPointer.size;

while(nextChunkPointer != onePastAvaialbeMempory and nextChunkPointer.free

//coalesce them

chunkPointer.size <- chunkPointer.size + nextChunkPointer.size;

nextChunkPointer <- chunkPointer + chunkPointer.size;

Applications of memory allocation in a compiler.

Linked Lists.

Linked lists in compilers are used for string storage, syntax trees, code fragments, identifier lists, symbols tables etc.

Data in linked lists are added and removed at irregular intervals.

A naive implementation could be to request these records one by one from the standard memory manager is, malloc() and return them using free().

We can optimize this by batching these records in blocks rather than allocating them one by one.

A separate set of block will be allocated an maintained for each recode type.

Each block will be an fixed size array(usually 16 or 32 per block) of records and it is obtained from the standard memory manager.

A free list will also be maintained for linking the free records in their blocks.

To begin the system will start with an empty free list and zero blocks.

The first allocation request will find the free list empty and

- allocates a block of type ARRAY OF T

- creates a free list linking free records using the space in the same free records

- hands out the first record.

The following allocations requests will normally obtain their records directly from the free list which will speed up memory management.

Records can be returned to this system and reattached to the free list.

Blocks are never returned to the standard memory manager.

Extensible arrays.

These are arrays to which elements can be added to the high-index end.

They are more efficient compared to linked lists when random access is needed or when the elements being dealt with are never changing or small.

A naive approach to implement extensible arrays would be to first allocate an array of reasonable size and when the size is used up, we extend it with a reasonable size and repeat this when needed.

This yields a quadratic algorithm which is not optimal.

This can be done in linear time by increasing the size of the array by a constant factor rather than a constant amount, that is, when an array needs to be extended, we allocate a new array that is times a big.

A drawback with both approaches is that eventually the array has to be moved.

Remarks.

The basic allocation schemes used by malloc(), free(), linked lists and extensible arrays all have the characteristics that deallocation will be explicitly indicated by the user.

Summary.

By this point, we have grasped the concept of how memory is allocated in a compiler, from the basics to optimizing memory allocation to improve efficiency.

We have also considered allocation problems of linked lists and extensible arrays which are in great demand for modern compilers for a correct and efficient implementation is fundamental for efficiency and correctness of a compiler.

References.

- Introduction to Compilers and Language Design Second Edition Prof. Douglas Thain Chapter 9.

- Modern Compiler Design part IV.