Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Imagine a situation where you're using your computer to work on a complex project, with multiple applications running simultaneously. As you switch between applications, you notice a significant slowdown in performance. The once snappy response times have now transformed into frustrating delays, hindering your productivity. You find yourself waiting for what feels like an eternity for an application to load or respond to your commands. Little do you know, the root cause of this sluggishness lies in the way your computer manages its memory.

In this article at OpenGenus, we explore paging which can fix the issues that many users face without even realizing it - the absence of an essential memory management technique. Without this mechanism, your computer struggles to efficiently allocate and deallocate memory resources, leading to a cascade of negative consequences.

But before understanding, we need to understand what are pages.

Table of Content

- What are Pages?

- What is Paging?

- How does paging works?

- Page Table

- Page Replacement

- Memory Management Unit(MMU)

- Page Fault prevention Strategies

- Advantages of Paging

- Disadvantages of Paging

What are Pages?

In OS, pages refers to fixed-size blocks of memory used for memory management.

These pages are typically a few kilobytes in size and are used to divide the virtual address space of a process into manageable chunks. Each page is assigned a unique identifier called a page number.

The use of pages allow for efficient memory allocation and management. When a process requires memory, the operating system assigns it a certain number of pages. These pages are stored in physical memory (RAM), or if necessary, swapped out to secondary storage to free up space in RAM.

By dividing memory into fixed-size pages, the operating system can allocate and deallocate memory resources more efficiently.

What is Paging?

Paging is a memory management mechanism used in operating systems to efficiently allocate and management memory resources. It involves dividing the memory into certain number of pages and mapping them to physical memory or secondary storage.

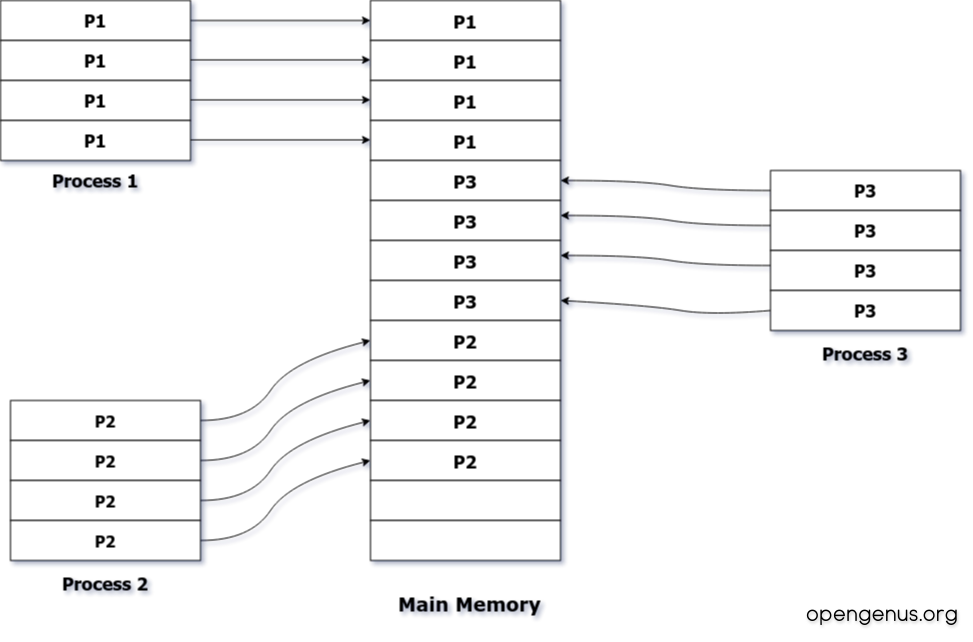

In paging, the virtual address space of a process is divided into equal-sized pages. The physical memory is also divided into fixed-sized blocks called frames, which are the same size as the pages. The operating system maintains a page table that keeps track of the mapping between virtual pages and physical frames.

When a process needs to access a specific memory address, the operating system translates the virtual address to a physical address using the page table. If the required page is already present in physical memory, the translation is straightforward, and the process can access the data directly. However, if the required page is not in physical memory (a page fault), the operating system needs to retrieve it from secondary storage and bring it into memory.

How does Paging works?

Page Table

Paging starts with page table management, which is a crucial aspect of memory management in operating systems. The page table is a data structure used by the operating system to keep track of the mapping between virtual pages and physical frames.

When a process is created, the operating system allocates a page table for that process. The page table is typically implemented as an array or a tree-like structure, depending on the specific page table management technique used.

The page table maintains the mapping between virtual pages and physical frames. Each entry in the page table contains the virtual page number and the corresponding physical frame number. Initially, the page table is empty, and all entries are marked as invalid or empty.

As the process starts executing, it generates memory references using virtual addresses. When a memory reference occurs, the operating system checks the page table to determine if the corresponding virtual page is mapped to a physical frame.

Page Replacement

If there is no available physical frame in RAM to bring in a required page, the operating system needs to select a page to evict from memory. This involves using page replacement algorithms like FIFO, LRU, or Optimal to determine which page to replace based on certain criteria. The page table is updated accordingly to reflect the new mapping or the invalid state of the evicted page.

- First In, First Out (FIFO) Algorithm

- Least Recently Used (LRU) Algorithm

- Optimal Algorithm

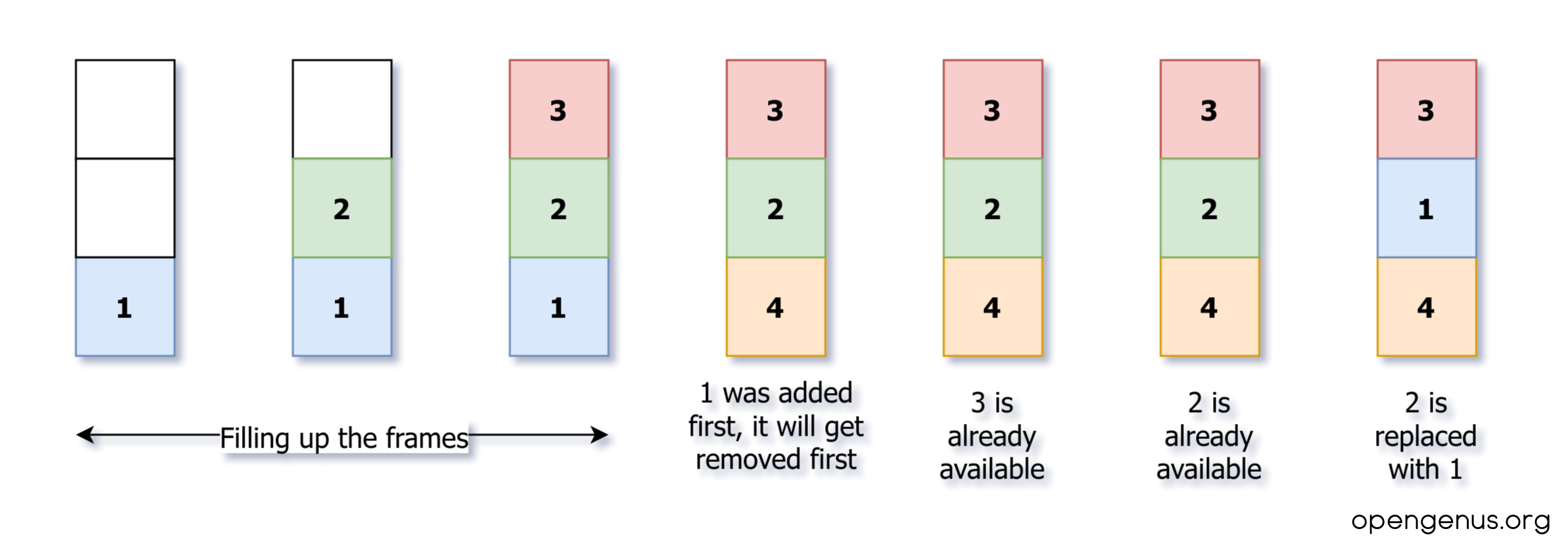

First In, First Out (FIFO) Algorithm

This is one of the simplest page replacement algorithms. It evicts the page that has been in memory the longest. It maintains a queue of pages in the order they were brought into memory, and the page at the front of the queue is selected for replacement.

Example

Consider memory references: 1 2 3 4 3 2 1 with a frame size of 3.

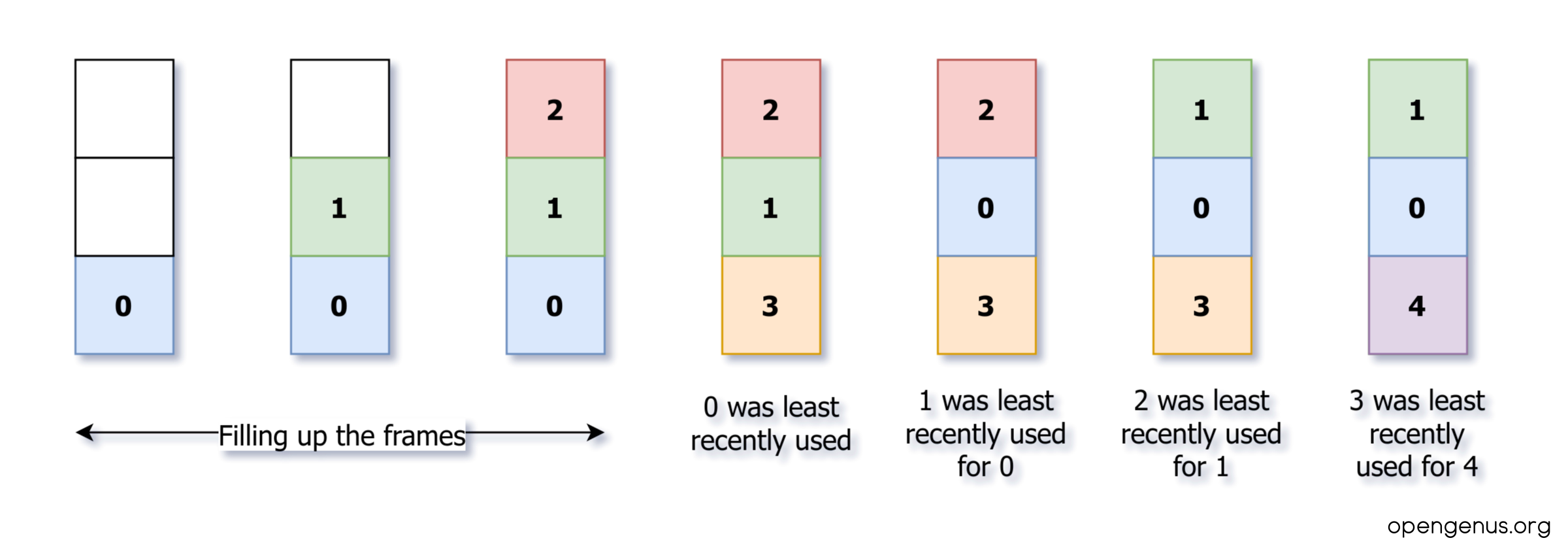

Least Recently Used (LRU) Algorithm

LRU is based on the principle that the page that has been least recently used is likely to be the least useful in the future. It keeps track of the time of the last access for each page and selects the page with the oldest access time for replacement.

Example

Consider memory references: 0 1 2 3 0 1 4 with a frame size of 3.

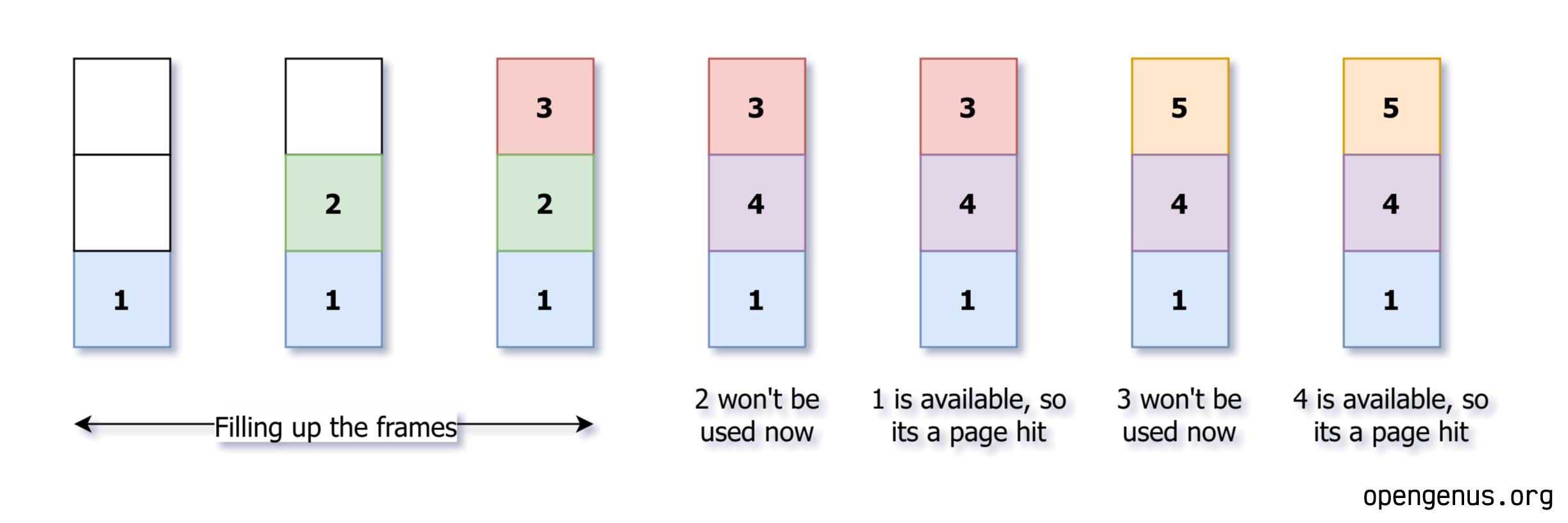

Optimal Algorithm

The Optimal algorithm is an idealized page replacement algorithm that selects the page that will not be used for the longest period of time in the future. It requires knowledge of future memory references, which is not practical in real-world scenarios. However, it serves as a benchmark for evaluating the performance of other algorithms.

Consider memory references: 1 2 3 4 1 5 4 with a frame size of 3

Theses are just mere examples of some paging algorithms that are widely used through out the operating systems.

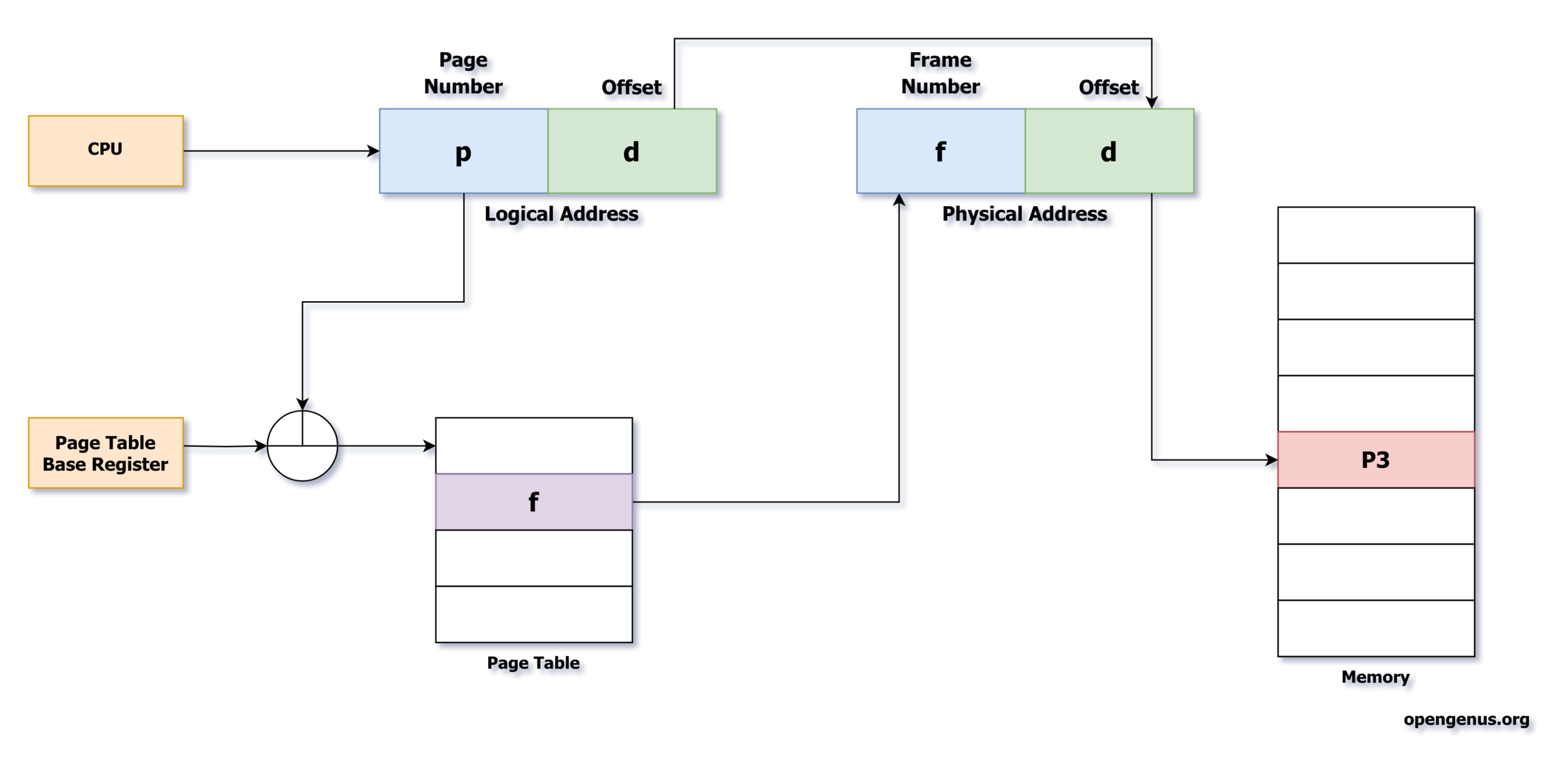

Memory Management Unit

Memory Management Unit is responsible for converting logical addresses to physical addresses and stores them in page tables. It basically stores base address of each page combined with offset to denote physical address.

The physical address consists of Frame Number & Offset.

Frame Number denotes the page which has the required words stored.

Offset denotes which word has to be read from the page.

The page size depends on hardware but generally its power of 2 of something that lies between 512 bytes to 16 megabytes per page.

When the logical address space is of size 2 raised to the power of m and the page size consists of 2 raised to the power of n addressing units, the page number is determined by the higher m-n bits of the logical address, while the page offset is specified by the lower n bits.

Page Fault Prevention Strategies

- Preemptive Paging

- Page Prefetching

- Working Set Model

- Page Clustering

- Intelligent Page Replacement Policies

Preemptive Paging: Preemptive paging, also known as proactive paging, involves bringing in pages into physical memory before they are actually needed. The operating system predicts the future memory access patterns of processes and loads the required pages in advance. This strategy helps to reduce the likelihood of page faults by ensuring that the necessary pages are already in memory when they are needed.

Page Prefetching: Page prefetching is a technique where the operating system anticipates future memory accesses and brings in pages before they are explicitly requested by the process. By analyzing the access patterns of processes, the operating system can identify pages that are likely to be accessed in the near future and fetch them into memory. This helps to reduce the latency associated with page faults and improves overall system performance.

Working Set Model: The working set model is a concept that defines the set of pages that a process actively uses during a specific time interval. By monitoring the working set of each process, the operating system can ensure that the necessary pages are always present in memory. This can be achieved by employing techniques such as the Working Set Clock algorithm, which dynamically adjusts the size of the working set for each process based on its recent memory access patterns.

Page Clustering: Page clustering involves grouping related pages together in memory. By clustering pages that are frequently accessed together, the likelihood of page faults can be reduced. This strategy takes advantage of spatial locality, where processes tend to access nearby memory locations in a short period of time. By keeping related pages close to each other, the operating system can minimize the number of page faults and improve memory access performance.

Intelligent Page Replacement Policies: Page replacement policies determine which pages should be evicted from memory when a page fault occurs and there are no free frames available. Intelligent page replacement policies, such as the Least Recently Used (LRU) algorithm, aim to minimize the number of page faults by evicting the least recently used pages. By keeping frequently accessed pages in memory, these policies help prevent unnecessary page faults.

Advantages of Paging

- Efficient memory utilization

- Simplified memory management

- Increased process size

- Memory Protection

- Simplified Virtual Memory Management

- Faster Context Switching

Efficient memory utilization: Paging allows for efficient memory utilization by dividing the logical address space into fixed-size pages. This enables the allocation of memory in smaller, manageable chunks, reducing internal fragmentation and maximizing the use of available memory.

Simplified memory management: Paging simplifies memory management by providing a uniform and consistent memory model. It eliminates the need for contiguous memory allocation, allowing processes to be loaded into non-contiguous physical memory locations. This flexibility improves memory allocation and reduces memory fragmentation.

Increased process size: Paging enables the execution of larger processes that may not fit entirely into physical memory. The operating system can load only the required pages into memory, swapping out less frequently used pages to disk. This allows for efficient utilization of secondary storage and enables the execution of larger programs.

Memory protection: Paging provides memory protection by assigning each page a specific protection level. This prevents unauthorized access to memory regions, ensuring the security and integrity of the system. It also allows for efficient sharing of memory between multiple processes while maintaining isolation.

Simplified virtual memory management: Paging is an essential component of virtual memory management. It allows the operating system to create a virtual address space for each process, providing the illusion of a larger memory space than physically available. This enables efficient multitasking and improves overall system performance.

Faster context switching: Paging facilitates faster context switching between processes. Since each process has its own page table, the operating system only needs to update the page table and flush the translation lookaside buffer (TLB) during a context switch. This reduces the overhead associated with switching between processes.

Disadvantages of Paging

- Overhead

- Fragmentation

- Increased disk I/0

- Thrashing

- Complexity

- Increased Latency

Overhead: Paging introduces additional overhead in terms of memory management. The operating system needs to maintain page tables to map logical addresses to physical addresses, which requires additional memory and computational resources. This overhead can impact system performance, especially in scenarios with a large number of processes or when the page tables become too large.

Fragmentation: Paging can lead to external fragmentation, where free memory is scattered throughout the physical memory space. As processes are loaded and unloaded, free memory blocks become fragmented, making it challenging to allocate contiguous memory for larger processes. This can result in inefficient memory utilization and increased memory access times.

Increased disk I/O: Paging involves swapping pages between physical memory and disk storage when there is a shortage of available physical memory. This swapping process, known as page swapping or paging to disk, can result in increased disk I/O operations. Excessive paging can lead to a high number of disk accesses, which can significantly impact system performance.

Thrashing: Thrashing occurs when the system spends a significant amount of time swapping pages between memory and disk, rather than executing useful work. This can happen when the system is overloaded with too many processes or when the available physical memory is insufficient to hold the working set of active processes. Thrashing can severely degrade system performance and responsiveness.

Complexity: Paging introduces additional complexity to the memory management subsystem of an operating system. Managing page tables, handling page faults, and coordinating page swapping require sophisticated algorithms and mechanisms. This complexity can make the system more prone to bugs, performance issues, and resource contention.

Increased latency: Paging can introduce additional latency in memory access. When a process accesses a page that is not currently in physical memory, a page fault occurs, and the operating system needs to retrieve the required page from disk. This disk access introduces significant latency compared to accessing data directly from physical memory, which can impact overall system performance.

Real-time usage of paging in Linux

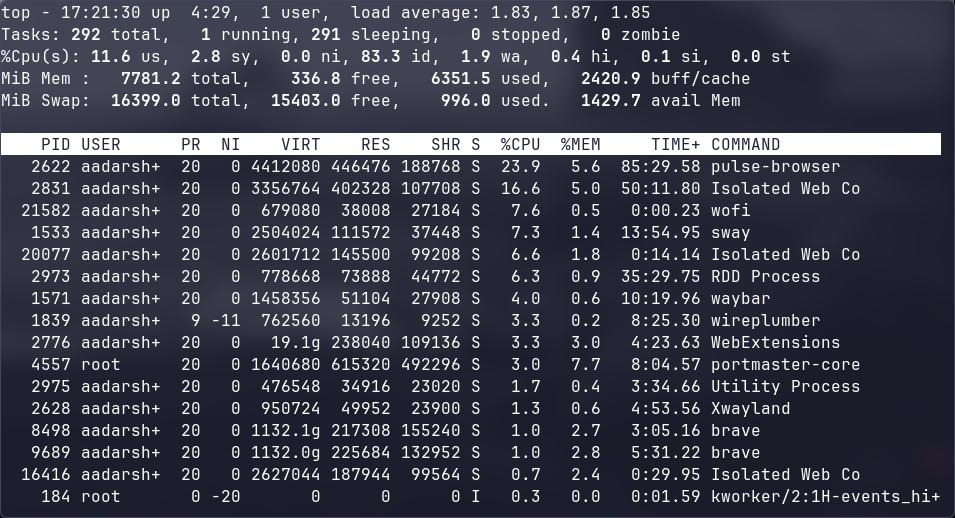

In order to monitor paging activity for a specific process, we can use some utilities to find the running processes where we can find the process ID of all the running processes.

$ top

The top program provides a dynamic real-time view of a running system. It can display system summary information as well as a list of processes or threads currently being managed by the Linux kernel.

It shows various details about memory components as well such as physical memory, swap space memory etc. Here we can find process ID (PID) for each process and thread.

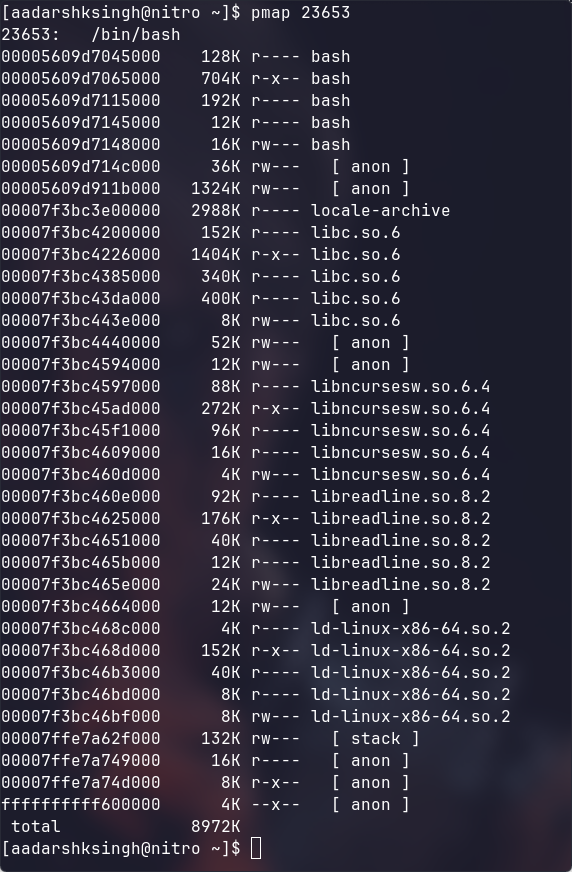

To find the paging or memory map of a particular process, we can use pmap utility.

$ pmap PID

The pmap command reports the memory map of a process or processes.

Here I have tried to find the memory map of the bash process which gives me the output which shows different memory spaces & addresses, their sizes, permissions and the particular library or thread which is mapped at that particular space.

This is how paging works in real time in operating systems

Comparison with other approaches

Paging vs Demand Paging

In traditional paging, all the pages of a process are loaded into the physical memory at the start of execution. This approach ensures that all the required pages are readily available in memory, minimizing the chances of page faults. However, it can lead to inefficient memory utilization as some pages may not be accessed during the entire process execution.

Demand paging, on the other hand, takes a more efficient approach by loading only the necessary pages into memory at the start of execution. When a process references a page that is not currently in memory, a page fault occurs. The operating system then fetches the required page from secondary storage (usually a hard disk) and replaces a page in memory with the new one. This process is known as page swapping.

Demand paging offers better memory utilization as only the required pages are loaded into memory, saving space for other processes. It also allows for larger processes to be executed even if the physical memory is limited. However, the introduction of page faults and page swapping adds overhead to the system. Whenever a page fault occurs, the processor has to pause its execution and wait for the required page to be fetched from secondary storage, causing a delay in process execution.

Paging vs Segmentation

Paging and segmentation are both memory management techniques used by operating systems, but they differ in how they divide and allocate memory.

Paging divides physical memory into fixed-sized blocks called pages, while segmentation divides logical memory into variable-sized blocks called segments which may represent different parts of a program, such as code , data or stack elements.

Paging loads all pages of a process into memory at the start of execution, while segmentation only loads necessary segments, allowing for more flexible memory allocation.

Paging may lead to inefficient memory utilization if some pages are not accessed during process execution, whereas segmentation allows for more flexible memory allocation based on the specific needs of each process.

Paging provides better memory protection as each page can be assigned specific permissions, while segmentation does not provide as fine-grained control over memory protection.

Segmentation divides the logical address space into segments, which can be more complex and may require additional hardware support for address translation.

In conclusion, paging simplifies memory management, offers better protection & effective address translation and segmentation allows for more flexble memory allocation but may lead to wastage of space if segments are not fully utilized.

Paging vs Virtual Memory

Similar to paging, virtual memory also involved dividing the logical address space into fixed-sized blocks called pages or segments but they differ in how they handle memory allocation & management.

In paging, it involved diving the address space into fixed-sized blocks or pages, allowing operating system to allocate them accordingly, and when memory becomes full, the operating system swaps out entire pages from the memory.

Virtual Memory, on the other hand, extends physical memory using secondary storage like space space or ZRAM. It allows the operating system to use a portion of the hard disk as an extension of physical memory. This is achieved by dividing the logical address space into fixed-sized pages, similar to paging. When physical memory is full, virtual memory can swap out individual pages rather than entire pages, providing more fine-grained control over memory allocation.

Both paging and virtual memory provide a way to manage memory beyond the physical limitations of the system. They allow more processes to run simultaneously by utilizing secondary storage as an extension of physical memory. However, they differ in their approach to swapping out pages. Paging swaps entire pages in and out of memory, while virtual memory can swap individual pages.

The choice between using traditional swap space & ZRAM depends on factors such as performance requirements and available resources. Swap space on the disk can introduce performance overhead due to slower access times compared to RAM. ZRAM addresses this issue by compressing swapped-out pages and storing them in a compressed cache in RAM, reducing the need for disk I/O operations and improving performance.