Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

KEY TAKEAWAYS

Key Takeaways (Virtual Memory)

- Understanding virtual memory, demand paging, and swapping techniques is essential for optimizing memory resources and improving overall system performance.

- Recognizing the impact of thrashing and the role of page replacement algorithms is crucial for preventing memory-related performance bottlenecks in computer systems.

- Demand paging allows for the efficient utilization of memory resources, making it possible to handle memory-intensive applications seamlessly.

- Page replacement algorithms serve as benchmarks for comparing and optimizing memory management strategies, ultimately influencing the efficiency and effectiveness of an operating system.

TABLE OF CONTENTS

- Introduction

- Magic Of Virtual Memory

- Demand Paging

- Swapping

- Thrashing

- Page Replacement Algorithms

- Conclusion

INTRODUCTION

Virtual memory is a concept that underpins the seamless operation of our computer systems. It's a behind-the-scenes hero, allowing computers to transcend the limitations of physical memory (RAM) and handle complex tasks with ease. In essence, virtual memory extends the capabilities of a computer, making it possible for large applications to run smoothly, regardless of the physical memory available. It's the magic that lets us multitask, run powerful software, and keeps our systems secure and stable.

MAGIC OF VIRTUAL MEMORY

Virtual memory essentially creates an illusion of expanded memory by utilizing a portion of your hard disk to mimic your computer's RAM. This clever sleight of hand provides several tangible benefits.

1. Overcoming Memory Limitations: The most apparent advantage is the ability to run programs that are larger than your computer's physical memory. Think of it as a safety net for those resource-intensive tasks.

2. Memory Protection: Virtual memory offers another layer of security and stability by translating each virtual address to a physical address. This segregation ensures that one program doesn't interfere with the memory allocated to another, preventing chaos and enhancing system reliability.

But what's most intriguing is that not every program needs to be loaded entirely into the main memory. Here are scenarios where this partial loading proves incredibly beneficial:

Error Handling: User-written error handling routines are only summoned when there's a hiccup in data or computation, making them inactive most of the time.

Occasional Features: Some features and options within a program are rarely used. Why load them into memory if they're seldom required?

Reserved Address Space: Often, a fixed amount of address space is allocated to tables, even if only a fraction of the table is actively used. Virtual memory allows for this flexibility, saving valuable physical memory.

Imagine being able to execute a program that's only partially in memory. It opens doors to a multitude of advantages:

Reduced I/O Operations: Loading or swapping each user program into memory demands fewer I/O operations, making the process faster and more efficient.

Unshackled by Physical Memory: With virtual memory, a program is no longer limited by the amount of physical memory available. This liberates your system's potential.

Enhanced Resource Utilization: Each user program takes up less physical memory, allowing more programs to run simultaneously. This boost in CPU utilization and throughput is a game-changer.

DEMAND PAGING

Demand paging operates in a manner that's akin to a paging system with swapping, albeit with a clever twist. In a demand paging system, processes reside in secondary memory, and pages are loaded into main memory only when they're requested, not in advance. This approach avoids the unnecessary preloading of pages and utilizes memory resources more efficiently.

Here's how it works:

when there's a context switch, the operating system doesn't engage in the elaborate copying of old program pages to the disk or the transfer of new program pages into main memory. Instead, it kicks off the execution of the new program right away, starting with the loading of the first page. Subsequent pages are brought into memory as they're needed. It's a just-in-time approach to memory management.

The Role of Page Faults

Page faults are central to demand paging. When a program attempts to access a page that's not currently in main memory because it was swapped out earlier, this results in a page fault. The processor recognizes this as an invalid memory reference and hands over control from the program to the operating system. The OS then efficiently retrieves the required page back into memory.

Advantages of Demand Paging

Demand paging offers several noteworthy advantages:

-

Large Virtual Memory: It enables systems to work with a large virtual memory space, accommodating the needs of modern, memory-intensive applications.

-

Efficient Memory Utilization: By loading pages on demand, memory resources are utilized optimally. No precious memory space is wasted on pages that might never be accessed.

-

Unconstrained Multiprogramming: Unlike some memory management techniques, demand paging doesn't impose a limit on the degree of multiprogramming. It adapts to the needs of the system and the programs running on it.

Challenges of Demand Paging

However, it's essential to note that demand paging is not without its challenges. Some of the disadvantages include:

- Overhead: The approach does come with a certain level of overhead, particularly in managing tables and handling page interrupts. This is more pronounced compared to simpler paged management techniques.

SWAPPING

Swapping is a crucial memory management process in operating systems, effectively juggling the allocation of memory resources among active processes. It involves either removing a program's pages from memory or marking them for subsequent removal, temporarily suspending the process while it resides in secondary storage. This strategy allows the system to optimize the use of physical memory by making room for other active programs. However, an excessive amount of swapping, known as "thrashing," can lead to a counterproductive situation where the system expends too much effort on memory management, resulting in a significant performance decline. Swapping is a delicate dance that ensures the equilibrium between program execution and memory utilization in an operating system.

THRASHING

Thrashing in the context of virtual memory within operating systems is a detrimental phenomenon characterized by a system's excessive and counterproductive page swapping. When thrashing occurs, the system is so overwhelmed by the constant back-and-forth transfer of pages between main memory and secondary storage that it spends more time managing memory than executing actual instructions. This results in a dramatic decrease in system performance, akin to a traffic jam of data. The root cause of thrashing typically lies in a severe shortage of physical memory, leading to an incessant demand for page swapping. To counteract thrashing, efficient memory management techniques, including optimized page replacement algorithms and careful resource allocation, are crucial to ensuring that the system runs smoothly and efficiently.

Causes of thrashing :

Insufficient Physical Memory: Inadequate RAM leads to thrashing. With too many active processes, the system frequently swaps pages, hurting performance. Adding more physical memory is a solution to handle processes efficiently.

Overcommitment of Memory: When the operating system overcommits memory by allowing too many processes to run concurrently, it can quickly deplete the available physical memory, leading to frequent page swapping.

Recovery of Thrashing :

Recovery from thrashing involves proactive and reactive measures. To prevent thrashing, the long-term scheduler can refrain from bringing in too many processes into memory beyond a certain threshold. In cases where thrashing has already set in, the mid-term scheduler intervenes by suspending some processes to free up memory and restore system stability. These strategies collectively ensure that the system maintains efficient memory usage and recovers swiftly from performance degradation caused by thrashing.

PAGE REPLACEMENT ALGORITHMS

Page replacement algorithms are a critical facet of an operating system's memory management, tasked with determining which memory pages should be swapped out and written to disk when the need for page allocation arises. This process, known as paging, comes into play when a page fault occurs, and there are insufficient free pages available. When a page that was previously chosen for replacement is subsequently referenced, it must be read in from disk, incurring an I/O completion delay. The efficiency of a page replacement algorithm is gauged by the minimal time it takes for page-ins. These algorithms make decisions based on limited information provided by the hardware, aiming to minimize the total number of page misses while considering the primary storage constraints and the computational cost of the algorithm itself. There exists a variety of page replacement algorithms, each tailored to balance these factors for optimal memory management.

Reference string :

A "reference string" is a sequence of memory references, essential for analyzing page replacement algorithms. It can be either artificially generated or derived by monitoring a system's memory usage. When using the latter approach, a substantial volume of data is collected.

Two significant observations emerge from this data:

1)With a specific page size, only the page number, not the full address, needs consideration, simplifying algorithm analysis.

2)After referencing a page (e.g., page p), any subsequent references to the same page within the immediate sequence won't result in a page fault. This is because once a page has been referenced, it's already in memory, preventing further page faults. These insights are pivotal in optimizing page replacement strategies.

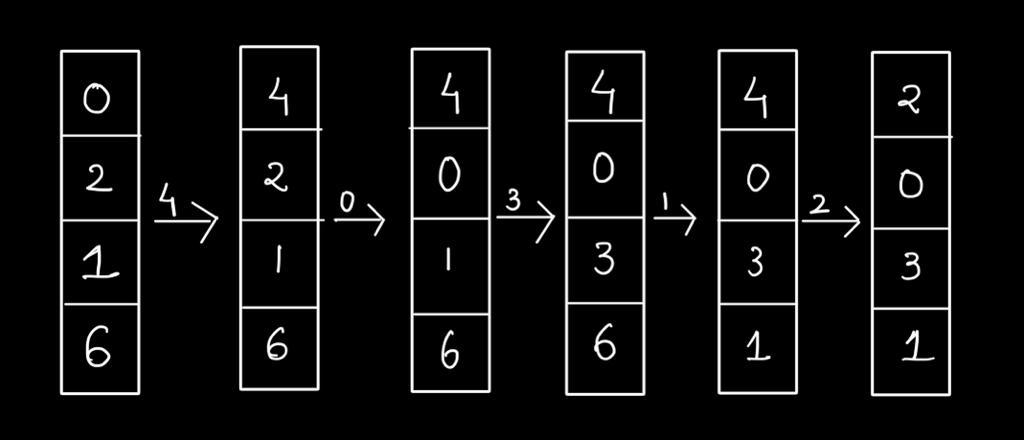

First In First Out (FIFO) algorithm :

The page chosen for replacement in the FIFO (First-In, First-Out) algorithm is the oldest page in main memory. Implementing FIFO is straightforward: it involves maintaining a list of pages, replacing pages from the tail (the oldest), and adding new pages at the head (the newest). This basic but effective approach ensures that the longest resident page is the one to be replaced when a page fault occurs.

Example:

Reference String : 0 2 1 6 4 0 1 0 3 1 2 1

Fault Rate : 9/12 = 0.75

Limitation of FIFO:

Belady's Anomaly:

One of the most significant drawbacks of FIFO is its susceptibility to Belady's Anomaly. This means that increasing the number of allocated memory frames may paradoxically lead to more page faults. FIFO doesn't consider the actual usage patterns of pages and may replace pages that are actively in use.

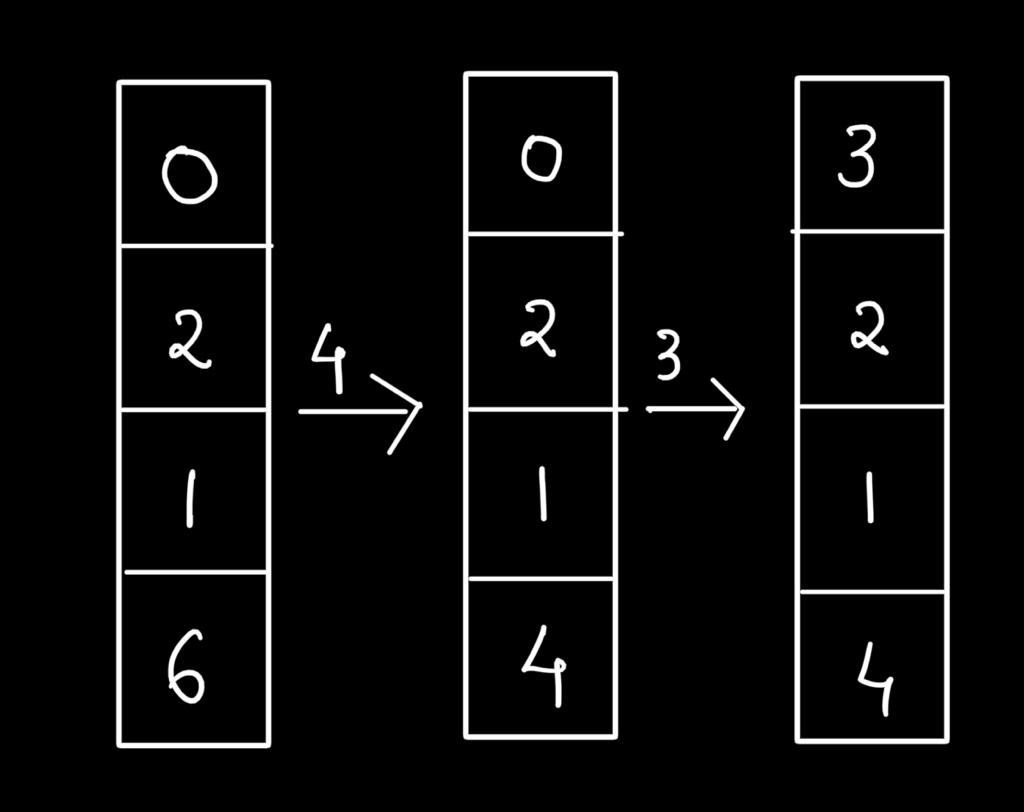

Optimal page-replacement algorithm

The optimal page-replacement algorithm, often considered the theoretical ideal in page replacement strategies, is designed to minimize the page-fault rate more effectively than any other algorithm. The key principle behind this algorithm is to replace the page that will not be used for the longest period of time in the future. In practice, this involves predicting the exact time when a page will be needed and evicting the page that has the farthest future reference. While this approach provides the optimal solution, it is practically impossible to implement since it necessitates knowledge of future page accesses, which is typically unavailable. Nonetheless, this strategy serves as a benchmark for comparing the performance of other page-replacement algorithms.

Example:

Reference String : 0 2 1 6 4 0 1 0 3 1 2 1

Fault Rate : 6/12 = 0.5

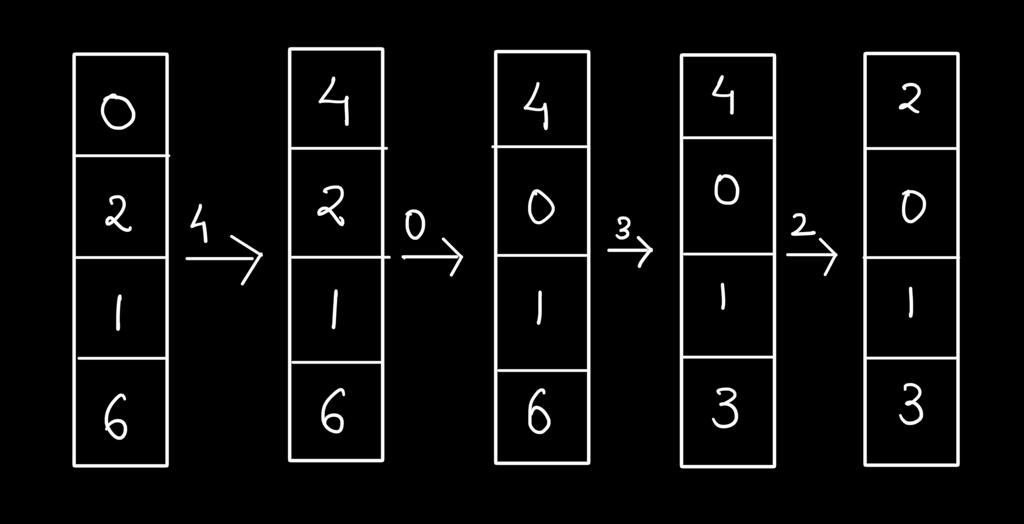

Least Recently Used (LRU) algorithm

The LRU (Least Recently Used) page replacement algorithm selects the page in main memory that has not been accessed for the longest duration for replacement when a page fault occurs. It prioritizes evicting pages that have remained idle the longest.

Implementation Simplicity:

LRU is relatively easy to implement as it involves maintaining a list of pages and tracking their access history. Pages are replaced by identifying the least recently used page based on their past references, making it an intuitive and practical approach for memory management.

Example:

Reference String : 0 2 1 6 4 0 1 0 3 1 2 1

Fault Rate : 8/12 = 0.66

CONCLUSION

In conclusion, virtual memory, demand paging, swapping, and page replacement algorithms collectively form the bedrock of modern computing, playing pivotal roles in ensuring the smooth and efficient operation of computer systems. Virtual memory's magic lies in its ability to transcend the limitations of physical RAM, enabling the execution of resource-intensive tasks and providing an extra layer of security through address translation. Demand paging takes this a step further by loading pages into main memory on-demand, thereby conserving resources and accommodating memory-hungry applications. However, it's important to acknowledge the potential overhead associated with these techniques.

Swapping, a fundamental memory management process, helps strike a balance between active processes and memory resources, preventing system overload. Yet, excessive swapping can lead to thrashing, a performance-crippling phenomenon rooted in physical memory shortages or overcommitment. Page replacement algorithms are the linchpin of effective memory management, striving to minimize page faults. While the FIFO algorithm is straightforward but susceptible to Belady's Anomaly, the optimal algorithm sets an aspirational performance benchmark. The LRU algorithm offers a practical solution by prioritizing the replacement of the least recently used pages, striking a balance between efficiency and implementation simplicity. In essence, these concepts are indispensable in achieving optimal system performance, resource utilization, and security in the ever-evolving world of computing.