Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

In this article at OpenGenus.org, we will discuss the 3 types of cache misses- namely, compulsory, conflict and capacity misses.

We will start off by briefly understanding what cache memory means, the need for it, what the terms 'cache hit' and 'cache miss' mean, and finally, we will discuss the types of cache misses in depth.

Pre-requisites: A basic understanding of computer memory.

Table of Contents:

1. What is cache?

2. What are the advantages of cache memory?

3. What do 'cache hit' and 'cache miss' mean?

4. What are the types of cache misses?

1. What is cache?

'Cache' memory refers to a memory segment which is used for temporary storage of data, and can be accessed by the CPU with high speed, making the memory retrieval process efficient. Generally, there are 3 levels of cache - L1 (primary cache), L2 (secondary cache) and L3 (to improve the performance of L1 and L2). The storage capacity increases from L1 to L3, while the performance speed decreases. It is a faster and costlier memory, storing data which the CPU/websites/apps are likely to require in the future, as well as frequently accessed data so that the CPU/websites/apps can quickly retrieve it, thus resolving the problem of high access time of the main memory. It is also situated physically closer to the CPU.

2. What are the advantages of cache memory?

Although it is costlier, as we have discussed, its access speed is very fast, close to the CPU processing speed. This makes memory retrieval in many cases, extremely efficient and the best way to realize the importance of it is to think about what would happen if we did not have a cache component. The main memory (RAM) is very slow compared to the CPU. This is because it stores all the data being used by the CPU and so, if an application were to require any data from it, they would have to search through the entire memory, which is unnecessarily inefficient and significantly slows down the computer's performance. Contrast this to cache which, besides being physically closer to the CPU and smaller in size, only stores frequently accessed/ contiguously located data which will satisfy the data demand (with high probability).

3. What do 'cache hit' and 'cache miss' mean?

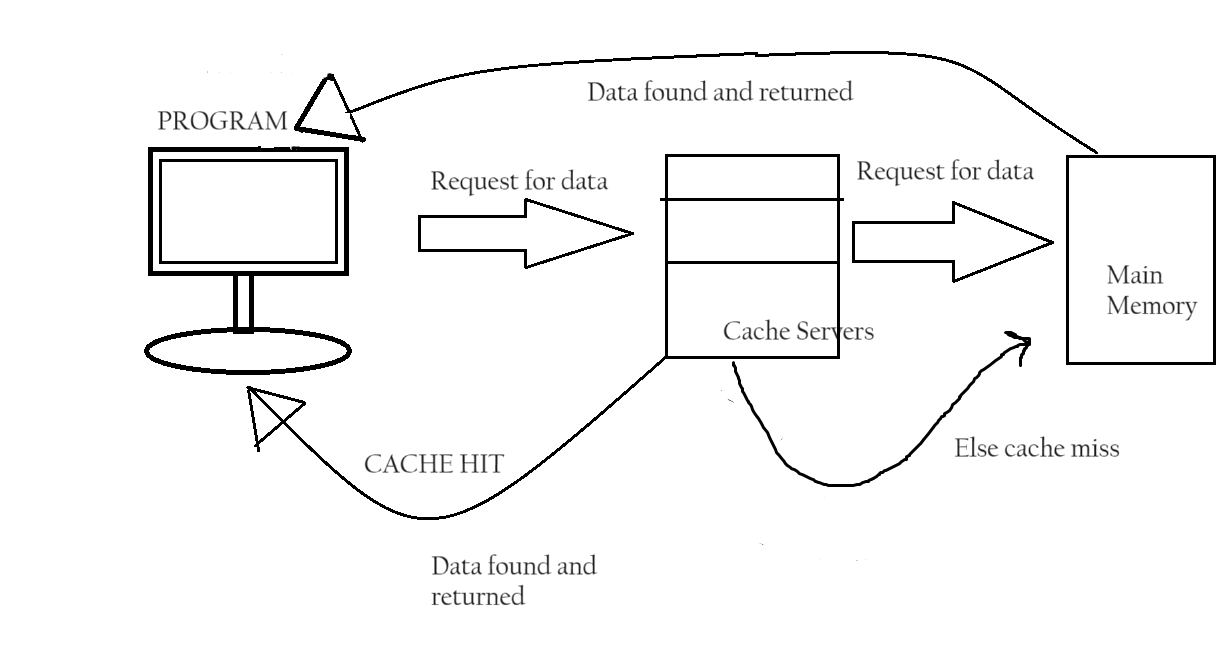

'Cache hit' refers to the state in which files/data requested by the concerned application are succesfully found and retrieved from the cache. If the required data is not found in L1, the search moves on to L2 and likewise to L3.

In a 'cache miss', the desired information was not found in the cache line it was searching through. For example, a cache miss has occured at L1, since the requested data was not found there. The search thus moves on to L2. Cache misses can slow down the performance of computers, since upon failure of retrieval of the desired data at a certain level/ all levels, the search has to now progress to further levels or even the main memory, thus taking more time to search for the data and the application/ page loads slower since it has to wait for its retrieval.

4. What are the types of cache misses?

There are mainly 3 types of cache misses: Compulsory/ Cold Miss, Capacity Miss and Conflict Miss.

1) Compulsory/ Cold Miss:

When data is requested for the first time, since there is no pre-existing data in the cache, we encounter a compulsory/cold miss. This is inevitable as any memory block when first referenced will certainly encounter a cold miss. A way to avoid this is for the cache to have what is known as prefetch. Prefetching is a cache technique wherein the data that will be required in the future for some purpose by the system is essentially "predicted" and stored. To quote Wikipedia, the official definition of prefetching is "Cache prefetching is a technique used by computer processors to boost execution performance by fetching instructions or data from their original storage in slower memory to a faster local memory before it is actually needed (hence the term 'prefetch')". But since it is difficult to predict the kind of data that may be required as there has not been any request for data at all prior to this, the system must have infinitely large prefetch to solve this issue. Thus it is not an entirely feasible solution, and hence we say that compulsory misses are unavoidable.

2) Capacity Miss:

When all the lines of cache are completely filled to their capacity, and the data required was forced out due to lack of storage space availability, we call a cache miss due to such a scenario, a capacity cache miss. The consequences of such a cache miss include increased latency, as the system must now search another tier of memory (slower memory source). This reduces the system performance and a few ways in which we can avoid this are: increasing cache size to be able to accommodate more memory and to avoid ejection of memory sooner as well as using an optimal cache policy. A cache policy lays out the rules on the basis of which to evict data from a cache. Optimising a cache policy may help ensure that important data will remain for a longer duration in the cache memory.

3) Conflict/ Collision Miss:

A conflict/collision miss occurs when more than one computer program is trying to access a particular cache segment at the same time. This leads to a scenario wherein only one program ends up being able to utilize the cache, whilst the other has to resort to searching in the main memory and this causes a significant performance delay. It is somewhat akin to one program experiencing a cache hit for its data request and another experiencing a cache miss. If conflicts occur frequently, this can lead to a significant setback in system performance, and we need strategies to mitigate this. Some of them include: increasing cache size, cache optimization and a refinement of cache organization.

Nowadays, researchers are exploring the integration of Machine Learning (ML) algorithms with cache optimization techniques to solve these issues dynamically. Predictive models are created based on past access patterns, which are more efficient than traditional rule-based algorithms.

KEY TAKEAWAYS :

-

Cache memory is a small-sized, high speed memory segment located close to the CPU which allows for efficient and fast data access when required.

-

The data being requested for processing for an application will either be found in the cache or not, referred to as "cache hit" and "cache miss" respectively.

-

If a "cache miss" occurs, it can be classified into 3 types on the basis

of the reason due to which it occured- compulsory, capacity and conflict misses. -

A compulsory cache miss is mostly inevitable due to there having been no request prior to this, and thus creating a situation where data is being searched for in an empty cache.

-

A capacity cache miss occurs when the requested data was forced out earlier due to a lack of free storage space.

-

A conflict/collision miss occurs when more than one request for the same data is to be handled at the same time, resulting in prompt access for a requester and a delay in access for the other.

-

Some of the ways in which you can deal with cache misses include increasing your cache size, testing out different cache policies to conclude which is the most optimal for your system, prefetching data and increasing cache lifespan so that data which is frequently accessed is stored for longer.

Through this article, we hope you have gained a solid understanding of cache, types of cache misses, and some common mitigation strategies. This is an interesting field of study and lots of research is ongoing in this area, so be sure to update yourself and read more about this topic.

With this article at OpenGenus.org, you must have the complete idea of Compulsory, Capacity and Conflict Miss.