Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Position-wise Feed-Forward Networks (FFN) are a crucial component in various sequence-to-sequence models, especially in the context of natural language processing and tasks like machine translation. These networks are often used in conjunction with self-attention mechanisms to process and transform information within sequences.

Table of content

- Transformer- overview

- Importance of Position-wise FFN

- Network Description

- Examples

- Code example using pretrained models

- Key points and summary

Transformer - Overview

The Transformer comprises an encoder-decoder structure, where the encoder processes the input sequence and generates a representation, which is then fed into the decoder to produce the output sequence. Each encoder and decoder layer contains multi-head self-attention mechanisms and feed-forward neural networks, enabling it to capture complex patterns and long-range dependencies. Transformers have become the cornerstone of state-of-the-art language models like BERT, GPT, and T5, showcasing their remarkable effectiveness and versatility across various natural language processing tasks. This architecture excels in capturing dependencies between words in a sentence, allowing for parallel computation and significantly reducing training time.

Importance of Position-wise FFN

The position-wise feedforward network in a Transformer architecture plays a crucial role in capturing and processing local information within the input sequence. While self-attention mechanisms excel at capturing global dependencies between words, the position-wise feedforward network complements this by focusing on local structures and positional information. This network consists of multiple layers of fully connected feedforward neural networks applied independently to each position in the sequence. By doing so, it enables the model to capture intricate patterns and relationships within a specific context window, enhancing the model's ability to understand the sequential nature of the data. Additionally, the position-wise feedforward network facilitates non-linear transformations of the representations learned through self-attention, allowing the model to extract more complex features and better capture the hierarchical structure of the input. Overall, the inclusion of the position-wise feedforward network enriches the Transformer architecture's capability to process sequential data effectively, contributing significantly to its success in various natural language processing tasks.

Network Description

In the context of transformer architectures, which have become widely popular in NLP tasks, the Position-wise Feed-Forward Network is typically applied independently to each position in the sequence. Let's break down the key components and functionality of a Position-wise Feed-Forward Network:

- Input:

At each position in the sequence, the input is a vector representing the information from the preceding layers or attention mechanism. - Linear Transformation:

- The input vector is linearly transformed using a fully connected layer. This transformation is position-specific, meaning each position has its own set of weights for the linear transformation.

- Mathematically, if X represents the input vector, the linear transformation can be expressed as W_1*X + b_1

W_1 first layers weight matrix and b_1 is the bias term. - If X is n dimensional vector then W_1 is l x n matrix l is number of nodes in first layer

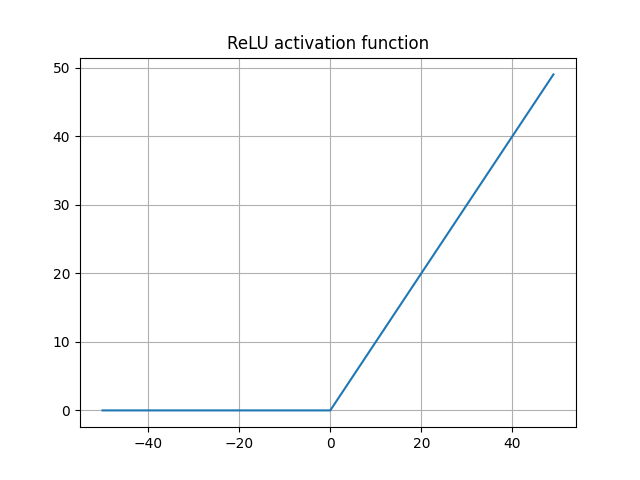

- Activation Function:

The result of the linear transformation undergoes a non-linear activation function, commonly the Rectified Linear Unit (ReLU). The activation function introduces non-linearity to the model. - Second Linear Transformation:

- The output of the activation function is linearly transformed again using another fully connected layer with a different set of weights.

- Mathematically, this is represented as W_2 * ReLU(W_1 * X + b_1) + b_2,

W_2 first layers weight matrix and b_1 is the bias term.

Let's see an example -

class PositionWiseFFNN(nn.Module):

def __init__(self, d_input:int, d_ffn: int):

super().__init__()

self.w_1 = nn.Linear(d_input, d_ffn)

self.w_2 = nn.Linear(d_ffn, d_input)

def forward(self, x):

'''

x : output of multihead attention concatenated

with embeddings

'''

return self.w_2(nn.ReLU()(self.w_1(x)))

The following are propertties of ReLU activation function

ReLU(X) = 0 for X < 0 and X for X > 0

Now lets see how we can use this model in one of the transformer example

import torch

from transformers import BertModel, BertTokenizer

from torch.nn import Sequential, Linear, ReLU

# Load pre-trained BERT model and tokenizer

model_name = 'bert-base-uncased'

tokenizer = BertTokenizer.from_pretrained(model_name)

bert_model = BertModel.from_pretrained(model_name)

# Input sentence

sentence = "Position-wise Feed-Forward Networks example with Transformers."

# Tokenize and encode the sentence

tokens = tokenizer(sentence, return_tensors='pt', padding=True, truncation=True)

# Forward pass through the BERT model

outputs = bert_model(**tokens)

# Extract the last hidden state (output of the Position-wise Feed-Forward Networks)

last_hidden_state = outputs.last_hidden_state

ffn_model = PositionWiseFFNN(last_hidden_state.size(-1), 64)

# Forward pass through the Position-wise Feed-Forward Network

output_ffn = ffn_model(last_hidden_state[:, 0, :])

Some key points and summary

- Position-wise Feed-Forward Networks are an integral part of the transformer architecture, working alongside self-attention mechanisms to process and transform information within sequences.

- Unlike traditional recurrent neural networks (RNNs) that process sequences sequentially, transformers process all positions in parallel. Position-wise Feed-Forward Networks enable the model to capture position-specific patterns and dependencies within a sequence.

- At each position in the sequence, the input undergoes a linear transformation using fully connected layers with position-specific weights. This is followed by a non-linear activation function, typically ReLU, and another linear transformation.

- Each position in the sequence is processed independently of others, allowing the model to learn position-specific representations. This is particularly useful for tasks where the meaning of a token depends on its position in the sequence.

- Position-wise Feed-Forward Networks contribute to the model's ability to capture complex patterns and relationships within sequences. The non-linear activation functions introduce flexibility and expressiveness to the model.

- In practical implementations, such as using TensorFlow or PyTorch, Position-wise Feed-Forward Networks are often defined as a series of linear transformations followed by non-linear activation functions. These networks are applied independently to each position in the sequence.

- In the context of transformer models like BERT, Position-wise Feed-Forward Networks are utilized to process information at each position. Extracting the output of these networks provides a representation of the sequence that captures position-specific features.