Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Key Takeaways

- Rapid data growth requires memory technology that maximizes storage capacity, minimizes size, and maintains speed.

- Concepts such as the Bekenstein bound suggest that the amount of information stored in a physical space is fundamentally limited.

- As memory components shrink, they face physical problems such as quantum effects and thermal noise that affect reliability and performance.

- Traditional silicon-based memory such as DRAM and flash has its limits, with problems such as leakage current and thermal management.

- Efforts are underway with advances in architectures and fabrication techniques to address scaling challenges and improve memory density.

Table of Contents

-

- Introduction

-

- Memory Density and Information Storage

-

- Theoretical Limits of Memory Density

-

- Silicon-Based Memory

-

- Fabrication

-

- Emerging Technologies

-

- Conclusion

1. Introduction

Memory density refers to the capacity of information that can be contained within a defined physical dimension in a memory system. The increasing demand for superior memory density in computational settings is driven by the rapid expansion of data produced across various fields. With the rapid growth in data production, current flash memory density has been increasing by about 40% annually (Ielmini & Wong, 2018) to keep pace with modern storage needs. Projections indicate that the global production of data may reach between 175 and 200 zettabytes by the year 2025.

As technological devices undergo a trend toward miniaturization and enhanced portability, there emerges an urgent demand for memory solutions that are both compact and do not sacrifice capacity or operational speed. Conventional scaling techniques, which primarily emphasize the reduction of component size (Zhang et al., 2020), are increasingly facing inherent physical constraints, at nanoscale dimensions, where quantum effects compromise reliability and performance (Hwang & Lee, 2018). Additionally, higher memory densities lead to increased thermal generation and manufacturing challenges for conventional silicon-based technologies.

Due to time and scope limitations, this article aims to explore these limits and the associated challenges without delving into the mathematical frameworks or computational simulations that often accompany such discussions. Instead, it provides a conceptual overview of the physical constraints and technological barriers that define the current and future landscape of memory density.

Historical Development of Storage Technology

The development of memory technology has seen several key advancements over the decades.

- Early Memory Technologies (1940s-1960s): The first forms of computer memory were based on magnetic core memory rather than vacuum tubes, which were primarily used for processing and signal amplification. Magnetic core memory, developed in the 1950s, became the dominant form of random-access memory (RAM) until the 1970s. These systems were bulky and limited in capacity but laid the groundwork for future developments.

- Transistor-Based Memory (1960s-1970s): The invention of the transistor in the late 1940s paved the way for semiconductor-based memories. Static Random Access Memory (SRAM) and Dynamic Random Access Memory (DRAM) emerged during this period. SRAM offered faster access times but was more expensive and less dense, while DRAM became more popular due to its lower cost and higher density, despite requiring periodic refreshing.

- Introduction of Flash Memory (1980s): Flash memory, developed in the 1980s, provided a non-volatile storage solution that retained data even when power was turned off. It was a type of EEPROM (Electrically Erasable Programmable Read-Only Memory) that could be electrically erased and reprogrammed. Its compact size and durability made it ideal for portable devices like USB drives and solid-state drives (SSDs).

- Advancements in 3D NAND Technology (2010s): To further increase memory density without expanding the physical footprint, manufacturers began stacking layers of NAND flash cells vertically. This 3D NAND technology, introduced commercially in the early 2010s, significantly increased storage capacity while reducing costs per gigabyte.

- Emergence of New Technologies (2020s): Recent innovations include phase-change memory (PCM), resistive RAM (ReRAM), and memristors, These technologies, which are currently being explored and developed, hold the potential for providing memory solutions that are faster, more durable, and more energy-efficient than traditional flash technologies, with even higher densities and efficiencies in the upcoming years.

2. Memory Density and Information Storage

Memory density is defined as the amount of data that can be stored within a specific physical area or volume of a memory device. It is typically expressed as bits per unit area (e.g., bits per square millimeter) or bits per unit volume (e.g., bits per cubic centimeter). Digital computers process information using binary digits (bits) and execute simple logical operations, such as AND, NOT, and OR, through logic gates.

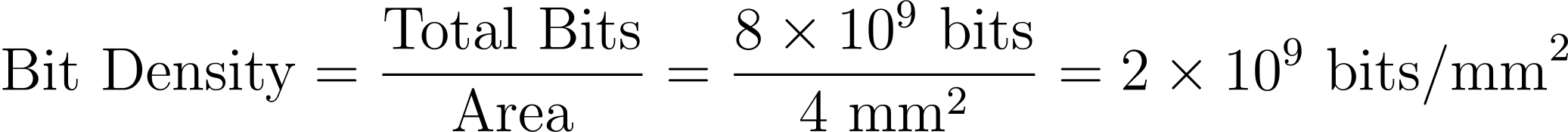

Bits per Unit Area:

This metric represents the number of bits stored within a specific area, often measured in square millimeters (mm²). For instance, if a memory chip has a capacity of 8 gigabits and covers an area of 4 mm², its bit density can be calculated as follows:

Higher bit densities reflect a more efficient use of space.

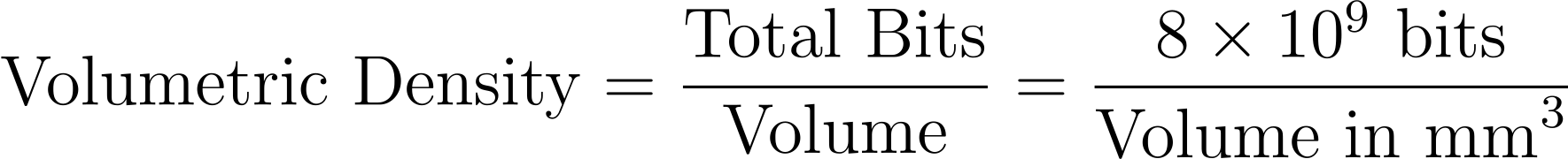

Volumetric Density:

This metric extends bit density into three dimensions, measuring the number of bits stored per cubic millimeter (mm³). The volumetric density calculation involves determining the total number of bits stored within a given volume:

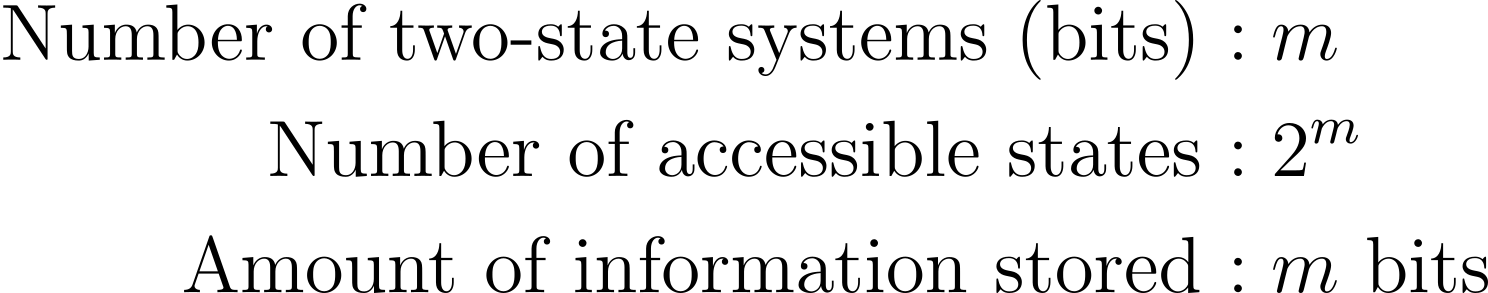

Information Storage and Accessible States

The amount of information a system can store is tied to the number of distinct physical states it can access. In classical computing, for a system with (m) two-state components (bits), there are (2^m) accessible states. Each discrete bit is capable of existing in one of two distinct states (0 or 1), thus allowing (m) bits to represent (2^m) distinct combinations. Thus, a system with (m) bits can store (m) bits of information:

There is often a trade-off between memory density and reliability; increasing redundancy (e.g., error-correction codes) improves reliability but reduces the effective storage capacity.

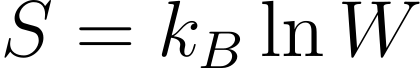

The number of accessible states (𝑊) in a memory system is related to its thermodynamic entropy (S) by the formula:

where:

𝑆 is the entropy,

kB is the Boltzmann constant,

𝑊 is the number of accessible microstates.

In this context, entropy measures the disorder or uncertainty in a system. Although thermodynamic entropy originates from the domain of statistical mechanics, Shannon entropy, as articulated in information theory, quantifies the degree of uncertainty or informational content inherent in a collection of potential outcomes.

Reversible operations (like NOT) can theoretically be performed without energy dissipation, as they do not erase information and maintain a one-to-one mapping of input to output states.

Irreversible operations (like AND or ERASE) increase entropy and require energy because they reduce the number of distinguishable states, effectively erasing information.

In theory, all computations could be performed using reversible operations, thereby avoiding energy dissipation. However, in practice, some energy loss is unavoidable due to factors such as error correction, noise, and thermal effects.

Although theoretically, computations can be carried out without energy loss, practical constraints like the need for error correction and thermal management, Indicate that energy dissipation remains a challenge, especially at high speeds.

3. Theoretical Limits of Memory Density

As physical systems, computers are fundamentally governed by the laws of physics. The speed at which they can process information is limited by the energy available, while the total amount of information they can handle is determined by their degrees of freedom (Lloyd, 2000). Moore's law is not a natural law but rather a pattern of human innovation. As a result, it will eventually reach its limits, although when this will occur remains uncertain.

Moore's Law

Moore's Law, named after Intel co-founder Gordon Moore, posits that the number of transistors on microchips doubles roughly every two years. This trend has driven exponential growth in computing power and reductions in the cost per transistor, thereby significantly advancing technology by improving performance metrics like speed and energy efficiency. Denser chips can perform more calculations per second while consuming less power. Nevertheless, as transistor sizes approach nanoscale dimensions, fundamental physical constraints, such as quantum effects, begin to challenge the continuation of Moore's Law. This has led researchers to explore alternative materials and computing architectures.

Dennard Scaling

Introduced by Robert Dennard in 1974, Dennard scaling complements Moore's Law by stating that power density remains constant as transistors are made smaller. This allows for higher speeds and more transistors without increasing power consumption or heat generation. However, since around 2006, this principle has faced significant challenges due to heat dissipation problems in densely packed circuits. Consequently, modern processor design has shifted toward multi-core architectures rather than just increasing clock speeds or the number of transistors.

Quantum Mechanical Constraints

As electronic components become increasingly miniaturized, quantum mechanical constraints begin to impact memory density within computational systems. This interplay between quantum mechanics, thermodynamics, and information theory greatly influences how data is stored and processed at the nanoscale. At very small scales, quantum effects become prominent, particularly regarding the behavior of electrons and subatomic particles, which introduces various limitations on data retention and computational processes.

Quantum Tunneling

Quantum tunneling becomes more significant as memory cells are reduced to nanometer scales. This phenomenon occurs when particles pass through energy barriers that would be insurmountable in classical physics.

In traditional semiconductors, electrons are confined within potential wells created by energy barriers, such as those formed by p-n junctions. As these barriers become thinner with miniaturization, the probability of electrons tunneling through them increases.

Smaller transistors face higher chances of electron tunneling through the gate oxide layer, leading to leakage currents. In non-volatile memory technologies like Flash memory, data is stored by trapping electrons in floating gates. If these gates become too small, electrons may escape unintentionally, resulting in data loss or corruption.

The increase deffects of tunnelling lead to a decrease in the reliability of electronic devices.Variations in performance can cause unexpected behavior in integrated circuits and complicate the operation and stability of devices.

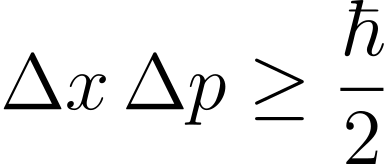

Heisenberg Uncertainty Principle: This principle asserts that certain pairs of physical properties, such as position and momentum, cannot be measured with arbitrary precision simultaneously. It is mathematically represented as:

where:

Δ𝑥 represents the uncertainty in position,

Δ𝑝 denotes the uncertainty in momentum, and

ℏ is the reduced Planck's constant.

As electronic components are scaled down, the uncertainties in the position and momentum of electrons become more pronounced. These uncertainties affect how precisely electrons can be controlled and confined, which in turn influences the reliability and stability of memory storage systems at very small scales.

Landauer’s Principle

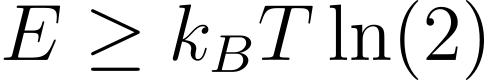

Landauer's principle provides a basic understanding of the thermodynamic limitations of information processing and energy dissipation in computing systems. It states that there is a minimum amount of energy required to erase one bit of information, and reflects the unavoidable thermodynamic cost associated with logically irreversible operations (Landauer, 1961).

In 1961, Rolf Landauer proposed that any logically irreversible manipulation of information (such as the erasure of a bit) results in an increase in entropy in a thermodynamic system. He quantified this energy cost as

The principle is expressed mathematically as:

This principle arises from the second law of thermodynamics, which states that entropy must increase in an isolated system. The decrease in entropy due to information erasure must be balanced by an increase in entropy elsewhere, typically through heat dissipation.

As memory density increases and bits become more densely packed, it becomes more difficult to maintain distinct states without being affected by thermal noise. The Landauer principle implies that operations that involve bit erasure incur energy costs. These costs are significant in densely packed computing devices where power requirements reach fundamental physical limits.

Landauer's principle specifies the theoretical minimum energy cost for bit erasure and highlights that there are intrinsic thermodynamic limits to how efficiently information can be processed and manipulated. Lowering the operating temperature to reduce energy consumption also increases the error rate due to thermal noise.

Quantum computing offers possible ways to circumvent some classical limitations of Landauer's principle. Quantum bits (qubits) can exist in superpositions and can be used for more complex computations, without strictly adhering to classical thermodynamic constraints, although they still face challenges such as decoherence and error correction.

Bekenstein Bound

The Bekenstein Bound is a theoretical limit on the amount of information that can be contained within a finite region of space, given a finite amount of energy. This concept, introduced by physicist Jacob Bekenstein in 1981, originates from black hole thermodynamics and quantum mechanics, and it is relevant to the memory density limits in computers.

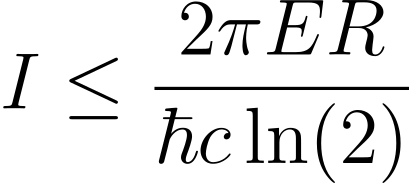

The Bekenstein Bound is expressed as:

where:

- I is the maximum amount of information (in bits) that can be stored within a finite region of space.

- 𝑅 is the characteristic length (e.g., the radius of a sphere enclosing the system).

- 𝐸 is the total energy of the system.

- ℏ is the reduced Planck constant

- 𝑐 is the speed of light in a vacuum

- ln(2) is the natural logarithm of 2, used to convert from nats to bits.

The bound implies that there is a maximum amount of information that can be stored in a given volume of space, proportional to the energy contained within that volume.

This bound holds particular significance in the context of black holes, where it was discovered that the entropy of a black hole is proportional to the area of its event horizon, not its volume. This insight contributed to the development of black hole thermodynamics and the understanding that black holes have entropy, which is a measure of the information content associated with the states of matter that formed the black hole.

The Bekenstein Bound connects the concepts of information, entropy, and energy, explaining the limitations imposed by quantum mechanics on the amount of information that can be contained in a physical system. It suggests that as systems become more energetic or compact, the entropy (and thus the information) they can contain is constrained.

The implications of the Bekenstein Bound for memory density are significant in computing systems. As technologies evolve toward miniaturization the challenge is not only to increase storage capacity but to do so within the constraints of physical laws.

The Bekenstein Bound sets a ceiling on how much information can be stored per unit volume or mass. This means that any attempt to create denser memory systems must contend with this limit; exceeding it would require either increasing energy input or finding new ways to manipulate information at quantum levels.

Electromagnetic Interference (EMI)

Electromagnetic interference (EMI) is the disruption of electronic devices caused by electromagnetic radiation from external sources. This is a critical concern in the design and operation of electronic systems, particularly as device density increases in modern applications. EMI arises from the unintentional coupling of electromagnetic fields between circuits or components, resulting in reduced performance or malfunction.

The main sources of EMI include switching noise from digital circuits, radio frequency interference (RFI), and crosstalk from neighboring signal lines. In densely packed memory cells, electric fields generated during switching events can couple into adjacent lines, leading to erroneous data interpretation.

Crosstalk is a specific type of EMI, refers to unwanted signal transfer between adjacent conductors or circuits. In high-density memory, crosstalk occurs when a signal in one circuit or memory cell induces a disturbance in a neighboring circuit or cell, leading to errors in data reading or writing.

The mechanisms behind crosstalk include capacitive coupling, where an electric field from one conductor influences another conductor’s voltage level; inductive coupling, where magnetic fields generated by current flow affect neighboring conductors; and resistive coupling, where direct conductive paths cause interference between circuits.

These interactions may degrade signals and increase error rates in data retrieval and storage. As the distance between components decreases, it becomes more difficult to maintain signal integrity and signal rise times may not be sufficient to avoid crosstalk effects.

Thermal Noise

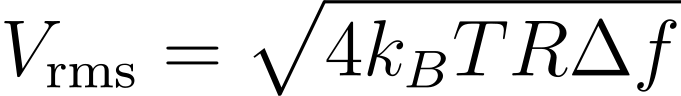

Thermal noise, also called Johnson-Nyquist noise, is inherent electrical noise generated by the thermal agitation of charge carriers (typically electrons) in a conductor or semiconductor. This noise occurs independently of external signals and is proportional to the temperature and resistance of the material. The noise voltage can be described mathematically by:

Vrms is the root mean square (RMS) noise voltage.

kB is the Boltzmann constant

𝑇 is the absolute temperature in kelvins (K).

𝑅 is the resistance in ohms (Ω).

Δ𝑓 is the bandwidth in hertz (Hz) over which the noise is measured.

In memory systems, data retention refers to the ability of a memory cell to maintain its stored information over time without being refreshed or rewritten. As memory cells become smaller and more densely packed, they are increasingly susceptible to thermal noise.

Thermal noise primarily affects data retention through charge leakage and voltage fluctuations across memory cells. In ultra-dense architectures, where cell capacitance is minimized to achieve higher density, even minor fluctuations due to thermal noise can cause a cell’s voltage level to fall below the threshold required for reliable operations.

Laszlo B. addressed the scaling challenges primarily due to thermal noise effects and the associated power efficiency issues. In nanoscale memory devices, the bit error rate can rise dramatically due to thermal fluctuations, challenging the reliability of data storage and processing. This phenomenon suggests a potential end to Moore's Law in traditional semiconductor technologies, as further miniaturization exacerbates thermal noise effects, making it difficult to maintain reliable performance in smaller devices (Forbes et al., 2006; Kish, 2002).

These theoretical challenges are mirrored in practical issues faced by ultra-dense memory systems.

4. Silicon-Based Memory

Modern computing has been built around silicon-based memory systems, which take advantage of silicon's abundant supply, advantageous electrical characteristics, and low cost. The primary types of silicon-based memory are Dynamic Random Access Memory (DRAM), Static Random Access Memory (SRAM), and Flash memory.

DRAM is a widely used form of volatile memory found in computers and servers that stores each bit of data in a separate capacitor within an integrated circuit. Due to current leakage, DRAM often needs to be refreshed thousands of times per second to maintain stored information. In contrast, SRAM uses a bistable latching circuitry to store each bit, making it faster and more reliable than DRAM. However, its higher cost and lower density limit its use primarily for caching memory in processors. Flash memory is a non-volatile storage technology that stores data without power. Commonly used in USB drives, solid state drives (SSDs), and memory cards, it operates via a silicon floating gate transistor structure that traps electrons to store information.

With the reduction in dimensions in search of miniaturization, silicon-based memory faces significant challenges, including an increase in leakage currents, a reduction in reliability, and thermal management problems due to the increase in the amount of heat generated per unit area on the nanoscale.

Alternative Materials in Memory Technology

Transition Metal Dichalcogenides (TMDs):

Because of their layered structure and tunable direct bandgap, which are similar to graphene, TMDs like MoS₂, WS₂, and WSe₂ have attracted a lot of attention (Feng et al., 2016). TMDs have applications in memory technology, including field-effect transistor (FET) channel material and resistive switching memory (ReRAM) active components. TMDs aid in the formation and dissolution of conductive filaments in ReRAM, allowing for low power consumption and non-volatile data storage with high on/off current ratios. In addition, compared to traditional flash memory, their scalability enables denser memory cells, which achieve faster switching speeds and better endurance.

Phase-Change Materials (PCMs)

Phase-change materials, such as GeSbTe alloys, exhibit notable variations in electrical resistance as a result of their ability to transition between crystalline and amorphous states in response to thermal or electrical stimuli. Phase-Change Random Access Memory (PRAM) uses this property to its advantage to provide better scalability, higher endurance, and faster read/write speeds compared to conventional NAND flash memories. PCMs can store multiple bits per cell due to different resistance levels, enhancing data density without additional physical space. PCMs are excellent candidates for upcoming non-volatile memory applications. In order to further improve PCM performance, recent developments have concentrated on increasing thermal stability and crystallization speed.

Graphene

Graphene is a single layer of carbon atoms arranged in a two dimensional honeycomb lattice. It is a potential substitute for next-generation memory technologies because of its remarkable mechanical strength, thermal stability, and electrical conductivity. Its high carrier mobility and thermal conductivity enable faster switching speeds, improved performance, and efficient heat dissipation. Because of graphene's adaptability, it can be integrated into a variety of substrates. It has been explored for several memory technologies, including ReRAM, flash memory, DRAM, and non-volatile memory (NVM). However, challenges such as scalable manufacturing, integration with existing technologies, and device stability remain before graphene can be widely adopted as a silicon replacement in mainstream memory applications.

Exploring every potential substitute material in memory technology might be outside the scope of this article. Nonetheless, the field's continuous study and development keeps pushing the boundaries of what is possible, and a wide range of alternative materials and techniques are being investigated for possible use in memory technology.

3D Architectures

In 3D architectures, memory cells are stacked vertically as opposed to the flat, two-dimensional arrangement of traditional planar designs. Because signals have to travel a shorter distance thanks to this vertical stacking, memory density and performance are greatly increased.

In non-volatile memory technology, 3D NAND Architecture is a well-known illustration of this strategy. By vertically stacking several layers of memory cells, it overcomes the scaling constraints of planar NAND and boosts overall performance and storage density. The technologies used in this architecture are usually floating gate (FG) or charge trap flash (CTF), which allow for higher bit densities at lower power consumption. These days, 3D NAND chips can have up to 128 layers, which greatly expands storage capacity and improves read/write speeds and lowers latency.

To further boost memory density, various stacking strategies have also been developed, such as Through-Silicon Via (TSV) technology. Compared to conventional interconnect techniques, TSVs offer high-speed data transfer and lower latency by creating vertical connections between stacked layers of silicon wafers. 3D architectures have many benefits, but they also have drawbacks. Controlling heat dissipation becomes more important as more layers are added because overheating can compromise performance and reliability. Furthermore, there are additional manufacturing challenges because the fabrication processes for these intricate structures are more complicated than those for traditional planar memory.

5. Fabrication

A computer's physical memory is built using semiconductor technology and consists of tiny electronic components that store data in binary form zeros and ones. The basic building blocks of this memory are transistors and capacitors.

Transistors: Transistors are semiconductor devices that can act as switches or amplifiers for electrical signals. In memory technology, they are used to control the flow of electricity within a circuit. In Static RAM (SRAM), transistors form bistable flip-flops that store each bit of information.

Capacitors: In Dynamic RAM (DRAM), each bit is stored in a capacitor, which holds an electrical charge. A charged capacitor represents a 1, while an uncharged one represents a 0. However, capacitors leak charge over time, which requires periodic refreshing of the data stored in DRAM, which is why it is classified as volatile memory.

A memory cell in DRAM consists of a combination of a transistor and a capacitor, while SRAM uses multiple transistors (typically six) to form a single memory cell without capacitors. In DRAM, these cells are arranged in a grid format (rows and columns) on silicon wafers. Silicon, the primary substrate for most modern memory chips, has semiconductor properties that enable it to conduct electricity under specific conditions and act as an insulator under others.

Multiple memory cells are integrated into larger circuits known as integrated circuits or chips. These ICs can contain millions to billions of cells packed closely together to maximize storage capacity.

The fabrication process of semiconductor memory involves several key steps:

- Doping: Silicon wafers are doped with impurities (such as phosphorus or boron) to create regions that can conduct or insulate electricity effectively, which forms p-type and n-type semiconductors.

- Photolithography: This technique uses light to transfer a geometric pattern from a photomask to a light-sensitive chemical photoresist on the wafer. This creates the intricate pathways and structures needed for transistors and capacitors.

- Etching and Deposition: Etching removes unwanted material from the wafer's surface, while deposition adds thin layers of materials to form the memory cell structures.

As the demand for smaller, faster, and more efficient memory devices grows, sophisticated fabrication techniques have been developed, such as Extreme Ultraviolet (EUV) lithography and Atomic Layer Deposition (ALD).

EUV Lithography

Extreme Ultraviolet (EUV) lithography uses light with a wavelength of about 13.5 nm, which is much shorter than the 193 nm wavelength typical of conventional photolithography. Because of this shorter wavelength, it is possible to print smaller, more precise features on silicon wafers, which allows for the fabrication of highly dense transistors and memory cells.

EUV lithography works by projecting EUV light through a mask that contains the desired circuit patterns onto a silicon wafer coated with photoresist material. The exposure to EUV light causes chemical changes in the photoresist, making it possible to selectively etch and deposit materials to form intricate circuit structures.

Atomic Layer Deposition (ALD) in Scaling Memory Devices

Atomic Layer Deposition (ALD) is a thin-film deposition technique that builds up materials one atomic layer at a time and allows exceptional control over film thickness and composition. This method is especially valuable for fabricating high-quality dielectric layers and conductive films in advanced memory devices.

ALD relies on sequential, self-limiting surface reactions. The process involves alternately exposing the substrate to different precursor gases, which react with the surface in a highly controlled manner. A typical ALD cycle involves the following steps:

- Introduction of the first precursor, which chemisorbs to the substrate surface.

- Purging of excess precursor and reaction byproducts using an inert gas.

- Introduction of the second precursor, which reacts with the adsorbed layer to form a new film.

- A final purge to remove remaining byproducts and unreacted precursors.

This cycle is repeated multiple times to achieve the desired film thickness with atomic-level precision. However, as the scale of memory devices continues to shrink, even minor deviations in the deposition process can lead to defects in the memory cells.

Despite their promise, miniaturizing nanoscale devices presents significant challenges, particularly as transistors shrink to dimensions below approximately 5 nm. At this scale, quantum effects such as tunneling and leakage currents become prominent, with electrons leaking through barriers meant to contain them, causing to increased power consumption and reduced device reliability. Additionally, the higher density of components generates more heat during operation, making effective thermal management crucial, as excessive heat can degrade performance and shorten the lifespan of memory devices.

The reduced spacing between components at nanoscale dimensions also increases the likelihood of interference between adjacent cells, potentially causing data retrieval errors. As a result, the fabrication processes for modern memory technologies become increasingly complex and costly. Traditional semiconductors face material limitations at these scales, encouraging research into advanced alternatives like graphene and transition metal dichalcogenides. While these materials offer potential solutions to some scaling challenges, their integration into existing manufacturing processes remains complex. Nevertheless, ongoing research and innovation continue to push the boundaries and offer promising advances in nanoscale memory technology.

6. Emerging Technologies

The search for alternative and emerging memory technologies is at the forefront of innovation in this field. These technologies aim to address the limitations of the current memory system using new principles and materials. Some of these emerging technologies, although at different stages of research and development, are promising to redefine the storage landscape in the near future. Due to the length constraints of this article, a detailed explanation of each technology may fall outside its scope. Instead, we provide a concise overview of these developments:

Resistive RAM (ReRAM): ReRAM is a kind of non-volatile memory that stores information by varying the resistance across a dielectric material. It provides high density and scalability, supporting multiple bits per cell in multi-level cell (MLC) configurations. ReRAM cells are a promising option for future memory scaling due to their straightforward construction and use of materials that can be miniaturized. Though improvements in material engineering, such as the use of transition metal oxides, are showing promise, issues like endurance and data retention reliability still exist.

Spintronics and MRAM: Spintronics uses electron spin in addition to charge to store data, resulting in technologies such as Magnetic RAM (MRAM). MRAM combines the fast access times of SRAM with the non-volatility of flash memory by storing data in magnetic states. Toggle MRAM and Spin-Transfer Torque MRAM (STT-MRAM), the latter of which switches states with spin-polarized currents, are the two primary varieties. Spintronics is a promising option for future memory devices because it promises lower power consumption and faster switching times.

Quantum Memory: The use of quantum mechanical properties like superposition and entanglement to achieve extremely high data densities is what quantum memory promises to bring about in terms of data storage in the future. Qubits are more compact information carriers than classical bits because they can exist in multiple states at once. Atomic ensemble, solid-state, and photonic quantum memory technologies are among the quantum memory technologies under investigation for use in quantum computing, cryptography, and communication, albeit they are still in the research stage. On the other hand, there are a lot of difficulties, such as decoherence and integration with classical systems.

DNA-Based Storage: Using DNA's molecular structure, massive volumes of data can be stored in an extremely durable and compact format. Adenine (A), Cytokinese (C), Guanine (G), and Thymine (T) nucleotide sequences can be used to store digital data at an exceptionally high density—possibly up to 215 petabytes per gram of DNA. Although the idea has potential for long-term storage, there are currently some drawbacks, such as the high expense of DNA synthesis and sequencing and the possibility of errors in the data encoding and retrieval processes.

Neuromorphic Memory: Modeled after the neural architecture of the human brain, neuromorphic memory systems use memristors, which are non-volatile devices that adjust their resistance in response based on historical electrical activity, to simulate synaptic activity. This method promises major improvements in low power consumption, high-density integration, and parallel processing. It also permits dynamic learning and memory storage. By combining memory and processing, neuromorphic computing offers an approach that is essentially distinct from conventional architectures.

Hybrid Memory Architectures: By utilizing the advantages of each memory type while mitigating its drawbacks, hybrid memory architectures optimize performance and density by combining several memory technologies into a single system. For instance, integrating slower, denser non-volatile memories like flash with faster-access volatile memories like DRAM can improve system performance as a whole.

7. Conclusion

The quest to push the boundaries of memory density in computers is a journey that navigates the interplay between the theoretical and the practical, the known and the unknown. As explored, the limits of memory density are shaped not only by the physical laws of quantum mechanics and thermodynamics but also by the material and engineering constraints that define our current technological capabilities.

Theoretical frameworks, such as the Bekenstein bound, suggest that there are fundamental limits to the amount of information that can be stored, governed by physical parameters like energy and space. However, in practice, we are still far from reaching these theoretical limits. Instead, we are contending with more immediate challenges such as material stability, heat dissipation, signal integrity, and manufacturing complexities as memory cells approach atomic scales. Quantum mechanical effects, including tunneling and thermal noise, present additional hurdles that threaten data integrity and increase power consumption.

Current technologies like DRAM and NAND Flash are nearing their physical limits, driving the development of emerging solutions like ReRAM, spintronics, and 3D memory architectures. These innovations bring new challenges, such as variability in ReRAM and the complexity of 3D stacking. Looking ahead, the future of memory density lies in advancing quantum memory, neuromorphic designs, and hybrid systems that combine different technologies for better performance and efficiency. However, integrating these with existing systems will require overcoming significant technical hurdles. Despite these challenges, the continuous progress in interdisciplinary research and new technologies lays a strong foundation for breakthroughs. As we push the boundaries of memory density, growth remains inevitable, even as practical challenges persist long before we reach theoretical limits.

References

Ielmini, D., & Wong, H. S. P. (2018). In-memory computing with resistive switching devices. Nature Electronics, 1(6), 333–343. https://doi.org/10.1038/s41928-018-0092-2

Zhang, Z., Wang, Z., Shi, T., Bi, C., Rao, F., Cai, Y., Liu, Q., Wu, H., & Zhou, P. (2020). Memory materials and devices: From concept to application. InfoMat, 2(2), 261–290. https://doi.org/10.1002/inf2.12077

Hwang, B., & Lee, J. (2018). Recent Advances in Memory Devices with Hybrid Materials. Advanced Electronic Materials, 5(1). https://doi.org/10.1002/aelm.201800519

Lloyd, S. (2000). Ultimate physical limits to computation. Nature, 406(6799), 1047–1054. https://doi.org/10.1038/35023282

HNF - ENIAC – Life-size model of the first vacuum-tube computer. (n.d.-b). https://www.hnf.de/en/permanent-exhibition/exhibition-areas/the-invention-of-the-computer/eniac-life-size-model-of-the-first-vacuum-tube-computer.html

1966: Semiconductor RAMs Serve High-speed Storage Needs | The Silicon Engine | Computer History Museum. (n.d.). https://www.computerhistory.org/siliconengine/semiconductor-rams-serve-high-speed-storage-needs/

Moore, G. E. (2006). Cramming more components onto integrated circuits, Reprinted from Electronics, volume 38, number 8, April 19, 1965, pp.114 ff. IEEE Solid-State Circuits Society Newsletter, 11(3), 33–35. https://doi.org/10.1109/n-ssc.2006.4785860

Dennard, R., Gaensslen, F., Yu, H. N., Rideout, V., Bassous, E., & LeBlanc, A. (1974). Design of ion-implanted MOSFET’s with very small physical dimensions. IEEE Journal of Solid-State Circuits, 9(5), 256–268. https://doi.org/10.1109/jssc.1974.1050511

Landauer, R. (1961). Irreversibility and Heat Generation in the Computing Process. IBM Journal of Research and Development, 5(3), 183–191. https://doi.org/10.1147/rd.53.0183

Bekenstein, J. D. (1981). Universal upper bound on the entropy-to-energy ratio for bounded systems. Physical Review. D. Particles, Fields, Gravitation, and Cosmology/Physical Review. D. Particles and Fields, 23(2), 287–298. https://doi.org/10.1103/physrevd.23.287

Forbes, L., Mudrow, M., & Wanalertlak, W. (2006). Thermal noise and bit error rate limits in nanoscale memories. Electronics Letters, 42(5), 279. https://doi.org/10.1049/el:20063803

Kish, L. B. (2002). End of Moore’s law: thermal (noise) death of integration in micro and nano electronics. Physics Letters A, 305(3–4), 144–149. https://doi.org/10.1016/s0375-9601(02)01365-8

Feng, Q., Yan, F., Luo, W., & Wang, K. (2016). Charge trap memory based on few-layer black phosphorus. Nanoscale, 8(5), 2686–2692. https://doi.org/10.1039/c5nr08065g

Molas, G. and Nowak, E. (2021). Advances in emerging memory technologies: from data storage to artificial intelligence. Applied Sciences, 11(23), 11254. https://doi.org/10.3390/app112311254