Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

In this article, we will be learning about Thundering Herd Problem. This is one of the issue that occurs in Computer Systems during multi-threading.

Table Of Contents:

- What is Thundering Herd Problem and it's Cause.

- It's effect on computer systems

- Cascading Failure

- How to avoid Thundering Herd Problem

- Syncchronisation

- Using Semaphore

- Leaky Bucket and Token Bucket Algorithms

What is Thundering Herd Problem and it's casue.

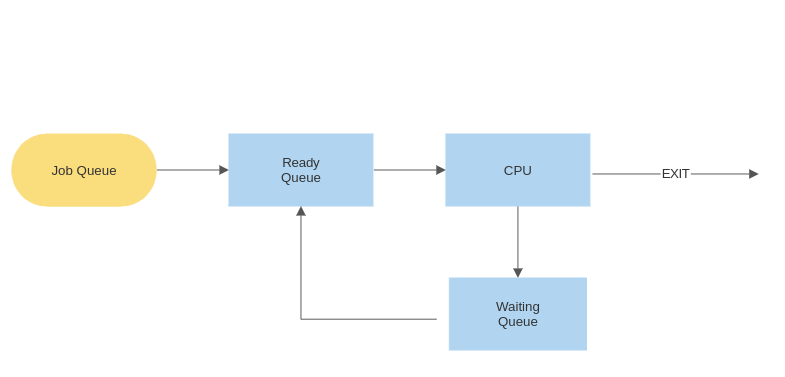

When many processes from waiting or hault state suddenly comes to ready state like a herd and rapidly like Thunder.This is the reason why this is known as Thundering Herd Problem.

Practically, when there are more processes and threads depending on a resource like database, file system, etc. are in waiting state and suddenly the resource gets available then all the those processes in waiting state comes to ready state and tries to execute themselve resulting slow processing and sometimes even system crash..

From the above image we can visualise the problem.How the processes from waiting state affect the system when they are transferred to ready state can be understood more clearly.

function handleRequest(request)

//function to handle request

if(!(processing)

//checing for processes which are not executed

start processing

//starting to execute the thread or process

The above pseudo code is an example where due to lack of sychronization all process that are in waiting state and are not processed will start processing concurrently.

It's Effect On Computer System

- Resource Wastage: When many programs at a time try access a single resurce that may lead to damage the resource. Also when the processor processes many instructions at a time it gets overloaded and damage

- Lagging Of System: The processor gets busy as a result the overal performance of the system decreases.

- System Instability: When the processor gets overloaded the system crashes.

Cascading Failure

Cascading failure is like domino effect of system failure. The best way learn about casacading Failure is when it occurs in the server side due to multiple request(Thundering Herd Problem) by the user, initiating a chain reaction of Server failures.

The whole scenario has been explained below:

Suppose we have 4 servers namely S1,S2,S3 and S4 to deal with all the requests made by the users, consider each server can easily hold off upto 100requests.

Phase-I

Due to some problem the S1 server gets crashed(assume), the 100 requests of S1 server will be distributed among S2,S3 and S4 i.e. adiitional 33 problems to each server.

Phase-II

As we know each server has a capacity of 100 requests only, the S2 server will aslo crash due to more traffic. So, now the requests of S2 [100+33] will now be distributed to S3 and S4. The S3 and S4 server each of them will have to deal with aprrox. 199 requests[100 + 33 + ((100+33)/2)]. This is neraly about double the number the requests each server could handle eventually this will lead to all the servers to crash.

In the above the scenario we can see how a single server failure leads to crash of all other servers casusing HERDS of requests THUNDERING to other server. This is an real life objective related to Thundering Herd Problem that has to be tackled by System Designers. These can be avoided by using Load Balancer or by Traffic Shaping techniques like Token Bucket and Leaky Bucket algorithms.

How To Avoid Thundering Herd Problem

Synchronisation

bool processing = false

//initialising a flag

function handleRequest(reuest)

//function to handle incoming request

if(processing == false)

//checing if any processing is going on

processing = true

//setting the flag as true to indicate processing is going on

process the request

then after it set the flag as false

processing = false

In the abpove pseudo algorithm we have implemented a synchronisation mechanism by adding bool data type to use as a flag to indicate wheather the cpu is busy or not

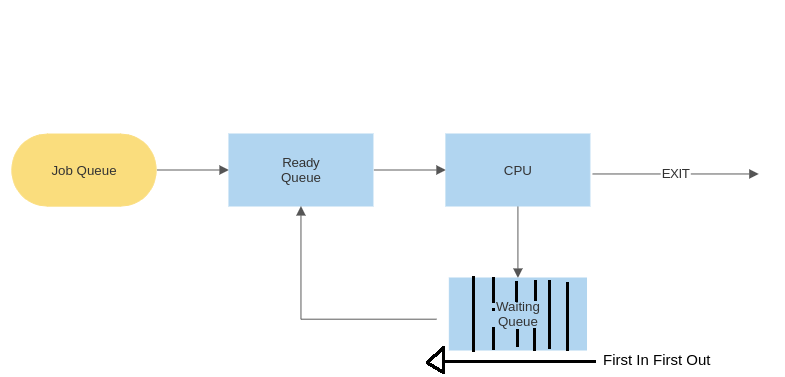

Using Queue in the waiting Stage

By using a queue in the waiting state the process that has been entered frist will get the cahnce to gget executed first(i.e FIFO, First In First Out).

Using Semaphore

Semaphore is also a synchronization mechanism that is used to control the access the shared resources.By limiting the the number of processes or threads that can access the resource we can prevent the thundering herd problem as only limited processes will be executed simulataneously.

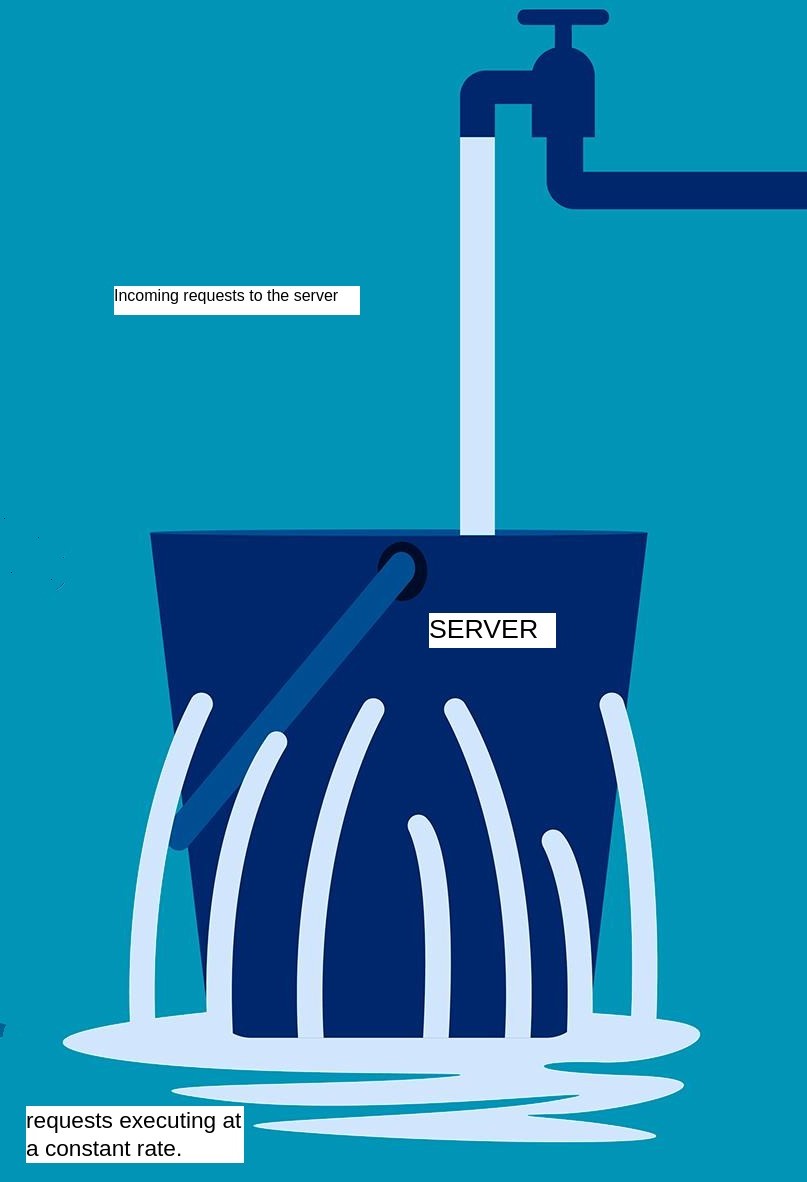

Leaky Bucket

Visualising the servers as a bucket with an hole at the bottom of it. water is poured into the bucket in an uneven rate while the water is flowing out of the bottom at a constant rate. The rate at which the water is getting out is constant but we can control the flow of water i.e poured into the bucket and sometimes even can close them. The above is the whole concept of Leaky Bucket Algorithm.

In the above example bucket is the server, the water that is poured into the bucket is the incoming requests to the server and the water flowing out is the request that are being processed by the server

NOTE: WE CAN CONTROL THE INCOMING REQUESTS EVEN WE CAN STOP BUT THE OUTFLOW OR PROCESSING RATE OF THE SERVERS CANNOT BE MANIPULATED

This will help in making the system more stable as the request will be processed at a constant rate and when there is more request the incoming request rate will be made low while the unprocessed requests will continue to be executed at a constant rate.But during more traffic the constant rate of processing data will eventually slow down the system.So, to deal with heavy traffic Token Bucket Algorithm is used.

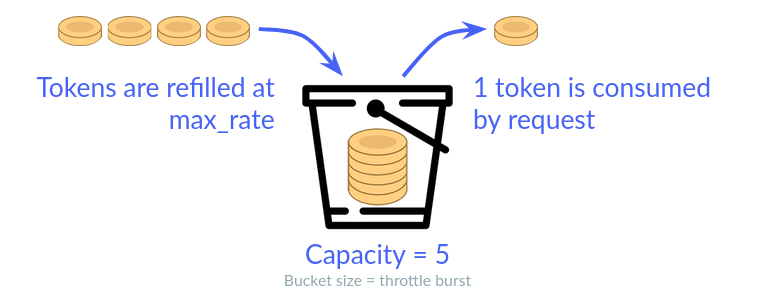

Token Bucket

A bucket is created with a fixed number of tokens.Tokens are added to the bucket at a fixed rate.When a packet is to be transmitted, the system checks to see if there are enough tokens in the bucket to represent the packet's size.If there are enough tokens, the packet is transmitted and the corresponding number of tokens is removed from the bucket.If there are not enough tokens, the packet is not transmitted, and the system waits until there are enough tokens in the bucket to represent the packet's size. It is a simple and effective mechanism for managing network resources and ensuring that network traffic is transmitted at a steady and controlled rate, ensuring resistance to problems like thundering herd problems.

NOTE:TOKENS ARE ADDED TO THE BUCKET AT A FIXED RATE, AND A DATA PACKET CAN BE TRANSMITTED ONLY IF THE BUCKET CONTAINS ENOUGH TOKENS TO REPRESENT IT'S SIZE(FULL CAPACITY OF THE BUCKET).