Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

This article serves as an introduction to LLVM, it answers the questions, what is LLVM and why we need intermediate code in the process of code compilation.

Table of contents.

- Introduction.

- LLVM IR optimization.

- Why intermediate-code?

- Summary.

- References.

Introduction.

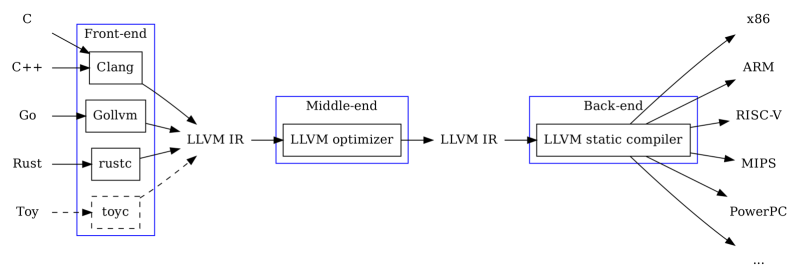

LLVM is a library with a collection of reusable compiler toolchain technologies. We use these tools to develop the compiler front-end for any programming language and back-end for any target machine architecture.

It works by first compiling the source code to an intermediate representation - IR(front-end) and then converting the virtual assembly code into machine code for a target machine(back-end).

In the first step - compiling source code, the same compiler front-end works for any given programming language regardless of the target machine architecture.

The second step allows compilation using the same compiler back-end for any given machine architecture regardless of the programming languages.

Above, the compiler front-end compiles source code written in high-level languages such as Haskell, Kotlin, C++, Swift, etc into intermediate code. The middle-end is responsible for optimizing the IR.

The back-end takes the optimized IR and compiles it into machine code for a target machine architecture.

LLVM has three common code representations, These are;

- C++ classes.

- Human-readable LLVM assembly files - .ll file extension.

- Bitcode binary representations - .bc file extension.

For example, lets generate a human-readable assembly file for the following C code.

#include<stdio.h>

int main(){

return 1;

}

To generate a .ll file we execute the following command;

$ clang -S -emit-llvm -O3 test.c

If clang is not installed we can install it by executing the following command;

$ sudo apt update

# install clang

$ sudo apt install clang

Now when we concatenate the .ll file we have the following;

; Function Attrs: norecurse nounwind readnone uwtable

define dso_local i32 @main() local_unnamed_addr #0 {

ret i32 1

}

Above, we return an integer of value 1.

ret here is referred to as a terminator instruction - these are instructions that are used to terminate a basic block. We also have binary instructions, bitwise binary instructions, and memory instructions, just to name a few.

LLVM IR optimization.

The following is an example optimization for pattern matching on arithmetic identities for an integer X.

- X-X is 0

- X-0 is X

- (X2)-X is X*

The following is the LLVM intermediate representation;

⋮ ⋮ ⋮

%example1 = sub i32 %a, %a

⋮ ⋮ ⋮

%example2 = sub i32 %b, 0

⋮ ⋮ ⋮

%tmp = mul i32 %c, 2

%example3 = sub i32 %tmp, %c

⋮ ⋮ ⋮

Now to optimize it;

// X - 0 -> X

if (match(Op1, m_Zero()))

return Op0;

// X - X -> 0

if (Op0 == Op1)

return Constant::getNullValue(Op0->getType());

// (X*2) - X -> X

if (match(Op0, m_Mul(m_Specific(Op1), m_ConstantInt<2>())))

return Op1;

In general, optimizing an IR involves three parts;

- First we find a pattern that is to be transformed.

- Then we make sure the transformation is not only safe but correct.

- Finally, we make the transformation thereby updating the code.

LLVM IR optimization.

Why intermediate-code?

Intermediate code is represented in two ways, the first as high-level IR which is very close to source code and the second is as low-level IR which is close to the target machine.

The former is easily generated from source code and we can modify it to improve its performance. It is not preferred for target machine optimization.

The latter is useful for resource allocation, i.e memory, and register, and also instruction selection just to mention a few. It is also preferred for target machine optimizations.

So why do we generate IR.

- It is easy to produce.

- Easy to define and understand.

- So that we can optimize the code early.

- We only use a single IR for analysis and code optimization.

- It supports both low-level and high-level optimizations.

Summary.

LLVM is written in C++ and designed for compile-time, link-time, idle-time and run-time code optimization. Languages used with LLVM involve Rust, Swift, Haskell, Kotlin, Lua, and Objective-C.

GCC can compiler code to a target machine architecture ARM, X86 but can also target LLVM intermediate code - IR. Here GCC acts as the compiler front-end.

Programming languages use LLVM to produce their compilers, this is because LLVM reduces the tasks involved in compilation.