Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Table of Contents

- Introduction

- Object recognition

- Image segmentation

- Object occlusion/depth estimation

- 3D object reconstruction

- Outside-in and Inside-out tracking

- Localization and Mapping

- Challenges

- Conclusion

- Sources

Introduction

Virtual reality (VR) and augmented reality (AR) are two of the most exciting technologies of our time. They have the potential to revolutionize the way we interact with the world around us, and they are already being used in a variety of industries, including gaming, education, and healthcare.

Deep learning is a type of machine learning that is based on artificial neural networks. It has been used to achieve impressive results in a wide range of applications, including image recognition, natural language processing, and speech recognition.

In recent years, deep learning has begun to be used in VR/AR applications. This is because deep learning can be used to improve the realism, interaction, and immersion of VR/AR experiences.

Here are some of the ways that deep learning is being used in VR/AR:

Object recognition

Object recognition is one of the most important applications of deep learning in VR/AR. It can be used to identify objects in the real world or in a virtual environment. This information can then be used to improve the realism of the experience, or to provide information about the objects to the user.

For example, a VR game might use object recognition to identify objects that the player can interact with. The game could then provide information about the objects, such as their name, weight, or properties.

Object recognition works by using deep learning models to identify patterns in images or videos. These models are trained on a large dataset of images or videos that have been labeled with the objects that they contain. Once the models are trained, they can be used to identify objects in real time. Popular convolutional neural networks (CNNs) such as AlexNet, VGGNet, and ResNet have already been employed for object recognition tasks.

The accuracy of object recognition models depends on a number of factors, including the quality of the training data, the complexity of the model, and the computational resources available. However, deep learning models have been shown to be very accurate at identifying objects in real time.

Here is an example of how object recognition can be used in VR/AR:

A VR game might use object recognition to identify a chair that the player points their controller at. The game could then provide information about the chair, such as its name, weight, and whether it is comfortable to sit in.

Object recognition can also be used to provide information about the environment to the user. For example, a VR headset might use object recognition to identify different types of terrain, such as grass, sand, or water. This information could then be used to provide the user with contextual information, such as the best way to navigate the environment.

Image segmentation

Image segmentation is a valuable technique used in VR/AR applications to divide images into distinct parts, enabling improved realism and providing essential information about objects. Deep learning models, such as fully convolutional networks (FCNs) or U-Net architectures, are commonly employed for image segmentation tasks.

For instance, an AR application can utilize image segmentation to identify different parts of a car, allowing it to provide detailed information about the make, model, and year of the vehicle. Furthermore, segmentation information can be utilized to overlay virtual objects onto the car, such as a price tag or a warning sign, enhancing the augmented experience.

The versatility of image segmentation extends beyond these examples. It can also be used for object tracking, enabling realistic and immersive experiences by tracking the movement of objects in the real world. Additionally, image segmentation can facilitate virtual fitting rooms, enabling users to virtually try on clothes and accessories without the need to physically visit a store. Moreover, in medical applications, image segmentation can aid in diagnosing diseases and injuries, such as identifying tumors in medical images.

To achieve accurate image segmentation, deep learning models like FCNs or U-Net architectures are trained on annotated datasets, where images are labeled with the various parts or objects they contain. These models can then classify and segment different regions of an image, providing a foundation for enhanced user experiences in VR/AR applications.

By leveraging image segmentation and deep learning techniques, VR/AR applications can deliver informative, realistic, and interactive experiences, opening up new possibilities across various industries and domains.

Object occlusion/depth estimation

Object occlusion/depth estimation is a technique that helps determine the relative depth and occlusion relationships between objects in a scene. This information is crucial for enhancing the realism of VR/AR experiences and ensuring that objects interact realistically without passing through each other.

Deep learning models such as Mask R-CNN or DepthNet can be employed for accurate object occlusion and depth estimation. These models are trained to understand the spatial relationships and relative depths of objects in a scene. By analyzing the visual input, they can accurately determine which objects are in front of others and estimate their depth.

Utilizing deep learning models for object occlusion/depth estimation allows VR/AR applications, such as games, to provide a more immersive and realistic experience. For example, in a VR game, the use of object occlusion/depth estimation can prevent the player from walking through walls or other solid objects, maintaining the illusion of a coherent virtual environment.

3D object reconstruction

3D object reconstruction is a technique used to generate a three-dimensional representation of an object from a series of two-dimensional images or point clouds. This process is vital for creating realistic and immersive VR/AR experiences involving virtual objects.

Deep learning models like PointNet, PointNet++, or MeshNet can be employed for 3D object reconstruction tasks. These models leverage techniques such as multi-view geometry and volumetric modeling to reconstruct the shape and structure of objects in a three-dimensional space.

By utilizing deep learning models, an AR application can enable users to interact with virtual objects in a more engaging manner. The reconstructed 3D models can provide information about the object or allow users to manipulate and interact with them in the virtual environment.

3D object reconstruction, powered by deep learning, enhances the level of realism and interactivity in VR/AR experiences. It enables users to perceive virtual objects with depth and spatial awareness, further blurring the line between the virtual and real world.

Outside-in and Inside-out tracking

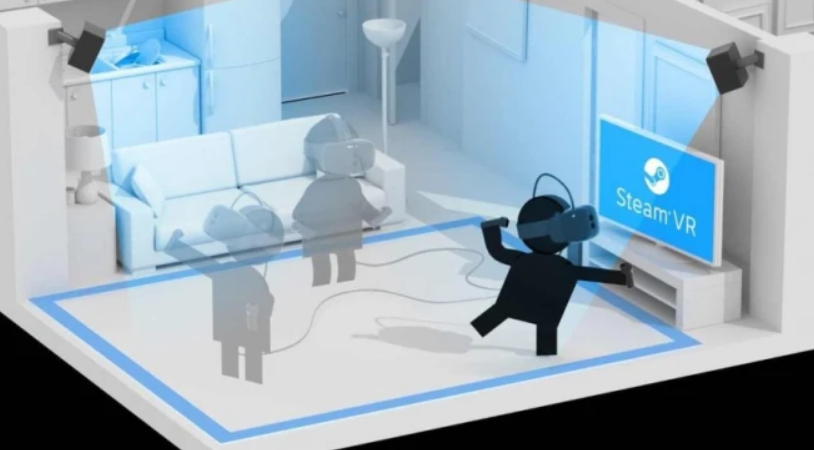

Outside-in tracking is a tracking method commonly used in VR systems like the HTC VIVE. It involves placing external sensors or cameras in the room to track the position and movement of the VR headset and controllers.

The alternative approach is Inside-out tracking where instead of using external sensors the VR headset or AR device incorporates all the necessary sensors and cameras to track its own position and movement.

Deep learning can enhance both these tracking methods. For the outside-in approach deep learning models like convolutional neural networks (CNNs) can be employed to analyze the visual input from the external sensors or cameras. These models can help detect and track the position of the VR headset and controllers more accurately, even in challenging lighting conditions or occlusion scenarios (when sensors might get blocked temporarily by objects).

When it comes to inside out deep learning can be used to analyze the sensor data, such as camera images or inertial measurements, to estimate the position and movement of the VR headset or AR device. Recurrent neural networks (RNNs) or other deep learning architectures can be employed to learn temporal dependencies and improve the tracking accuracy.

Comparison

- Latency: Currently, outside-in tracking generally offers lower latency due to dedicated external sensors. However, advancements in deep learning algorithms and hardware optimization are narrowing this gap, and inside-out tracking is catching up.

- Mobility: Inside-out tracking provides greater mobility as it doesn't require external sensors or a fixed play area. Devices like the Oculus Quest offer portability and flexibility, allowing users to use VR anywhere. Outside-in tracking requires a dedicated play space with mounted sensors or base stations, making it less portable and adaptable.

As technology advances, deep learning techniques will continue to play a vital role in improving tracking accuracy, reducing latency, and enhancing the overall VR/AR experience. The choice between outside-in and inside-out tracking depends on specific requirements, with considerations for latency, mobility, and the level of immersion desired.

Localization and Mapping

Localization and mapping techniques are crucial for AR devices like Microsoft HoloLens to understand and interact with the user's environment. Deep learning can aid in localization and mapping tasks by analyzing sensor data, recognizing landmarks, and improving the accuracy of the mapping algorithms.

The only realistic way of achieving this is through using deep learning models, such as convolutional neural networks (CNNs) or recurrent neural networks (RNNs), can be used to process sensor data from cameras, depth sensors, or other sensors on AR devices. These models can help recognize landmarks, estimate the device's position and orientation, and create accurate maps of the environment.

Challenges

While deep learning holds great potential for VR/AR, there are several challenges that need to be addressed to promote its wider adoption in these domains:

- Data availability: Deep learning models require large amounts of labeled training data. However, collecting comprehensive and diverse datasets for VR/AR applications can be challenging, limiting the training potential of deep learning models.

- Computational resources: Deep learning models can be computationally expensive to train and run. VR/AR devices often have limited computational resources, making it necessary to optimize deep learning algorithms and models to ensure efficient execution on these platforms.

- Latency: VR/AR applications require real-time responsiveness to provide seamless and immersive experiences. The high latency of deep learning models can be a hindrance, especially when quick interactions or real-time processing are essential.

- Privacy and ethical considerations: VR/AR applications may collect and process sensitive user data. Deep learning models need to be designed with privacy and ethical considerations in mind, ensuring the protection of user information and adherence to ethical guidelines.

Overcoming these challenges will require advancements in data collection techniques, model optimization for resource-constrained devices, the development of low-latency deep learning architectures, and the establishment of robust privacy and ethical frameworks.

Conclusion

Deep learning is poised to revolutionize VR/AR by enhancing realism, interaction, and immersion. Object recognition, image segmentation, object occlusion/depth estimation, and 3D object reconstruction are some of the ways deep learning is being applied in VR/AR applications.

While challenges such as data availability, computational resources, and latency need to be addressed, deep learning technology continues to evolve rapidly. With advancements in accuracy, efficiency, and user-friendliness, deep learning models will become more adaptable to challenging conditions, run efficiently on resource-constrained devices, and be easier for developers to utilize.

The future of deep learning in VR/AR holds great promise. As the technology matures, we can anticipate more innovative and immersive experiences that leverage deep learning algorithms and models. From gaming and education to healthcare and industrial applications, deep learning will play a vital role in shaping the future of VR/AR.