Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Technology is certainily growing at its highest pace, you'll be amazed after reading this article!

You might have already heard about generating the clone of a person or copying anybody's voice? Or if you're familiar with recent news, you must have seen how China has created artificial news anchor for reporting!

How are these things getting possible? What working technology is behind these advancements?

This is nothing but growing pratical use of Generative Adversarial Networks (GANs). GANs is an application of Deep Learning and uses concept Neural Networks for its purpose. We have explored the problems with GANs in this article and have divided them into two major parts:

- General Problems with GANs

- Technical Disadvantages of GANs

Reading the above scripts about what could be done using GANs must have sounded cool to you, but just give it a second thought, is it really cool to have such artificial things which could do more harm to humans than humans? (I assure you, your first instincts are never wrong).

Let me take you to a deep dive into how GAN works.

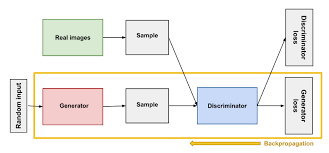

There's two Neural Networks called Generator and Discriminator. The Generator generates the random output and sends to the Discriminator. Discriminator cross checks the input with the real output and if matches to the real output, shows it as the output. But if the Discriminator is not satisfied with the Generator's output, it Backpropogates it to the Generator for new output generation.

General Problems with GANs

If everything is going smooth then what's the problem coming with GANs?

-

Lets take a leap to the top five technical issues going around the world. The issue related to Facial Detection and Data Privacy. Our most important Data could easily be leaked if our Biometric Recgnition is purloined. GANs have already proven to generate the clone of a person.

-

Our most of the Businesses or Bank Transactions are happening online. We are on the verge of getting totally inclined towards the internet(covid situation is the best example for this), if the usage of GANs would be increasing day by day in the same ratio, then trust issues would become the major factor for the same.

-

GANs are on its highest pace of development but still this could not be used by smaller tech organisation. Many advantages of GANs could be scrutinized but the full fleg utitlization of it, is still not in use. GAN uses unsupervised learning and generating results from text or speech is not an everyone's cup of tea.

Technical Disadvantages of GANs

Lets talk about some of the techncial disadvantages of GAN:

-

There's still no intrinsic metric evaluation present for better model training and generating complex outputs. Although a research paper was proposed for the mentioned issue which proposed a new metric for the evaluation of generative models, CrossLID , this assesses the local intrinsic dimensionality (LID) of input data with respect to neighborhoods within generated samples, i.e. which is based on nearest neighbor distances between samples from the real data distribution and the generator. But it has been rejected and researchers are still working upon this issue.

-

Density Estimation, we still cannot predict the accuracy of the density of the evaluated model and state that this image is denser enough to move forward with. These metrices of the data generated are still deciding manually.

-

GANs are the elegant mechanism of Data Generation but due to Unstable Training and unsupervised leanring method it becomes harder to train and generate output.

-

Lets consider we've generated a whole dummy model and lastly need to change anything in the developed and fully trained model, this is quite of a crooked task. Inverting is not straightforward in GANs.

Inshort GANs take alot to train and even more to form and use.