Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 20 minutes

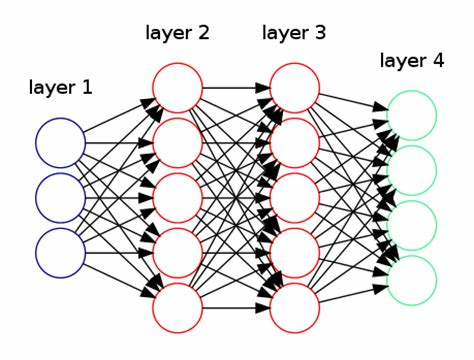

Optimizations are required to run training and inference of Neural Networks faster on a particular hardware infrastructure. It is important to maintain the accuracy of Neural Networks while applying various optimizations.

The various types of Neural Network optimizations available are as follows:

Weight pruning

- Element-wise pruning using:

- magnitude thresholding

- sensitivity thresholding

- target sparsity level

- activation statistics

Structured pruning

Pruning is a technique to reduce the number of activation values involved in a Neural Network which in turn which reduce the number of computations required.

-

Convolution:

- 2D (kernel-wise)

- 3D (filter-wise)

- 4D (layer-wise)

- channel-wise structured pruning

-

Fully-connected:

- column-wise structured pruning

- row-wise structured pruning

-

Structure groups

-

Structure-ranking using:

- weights

- activations criteria like:

- Lp-norm

- APoZ

- gradients

- random

-

Block pruning

Control

- Soft and hard pruning

- Dual weight copies

- Model thinning to permanently remove pruned neurons and connections.

Schedule

- Compression scheduling: Flexible scheduling of pruning, regularization, and learning rate decay

- One-shot and iterative pruning

- Automatic gradual schedule for pruning individual connections and complete structures

- Element-wise and filter-wise pruning sensitivity analysis

Regularization

- L1-norm element-wise regularization

- Group Lasso or group variance regularization

Quantization

- Post-training quantization

- quantization-aware training

Knowledge distillation

- Training with knowledge distillation along with:

- pruning

- regularization

- quantization methods.

Conditional computation

- Early Exit