Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 15 minutes`

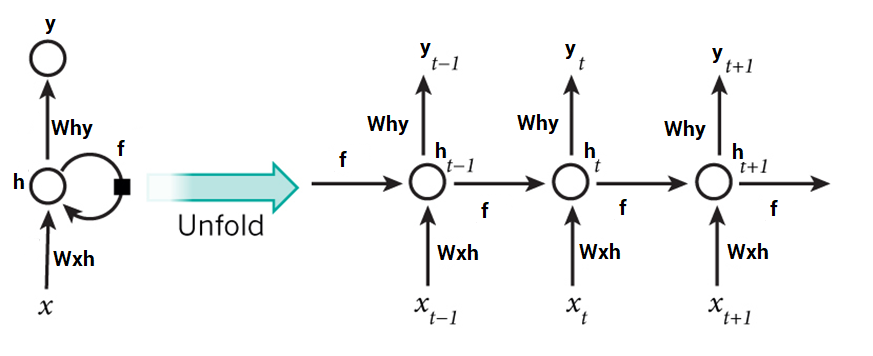

Recurrent Neural Networks (RNNs) are designed to work with sequence prediction problems. Sequence prediction problems come in many forms and are best described by the types of inputs and outputs supported.

Some examples of sequence prediction problems include:

- One-to-Many: An observation as input mapped to a sequence with multiple steps as an output.

- Many-to-One: A sequence of multiple steps as input mapped to class or quantity prediction.

- Many-to-Many: A sequence of multiple steps as input mapped to a sequence with multiple steps as output. Many-to-Many problem is often referred to as sequence-to-sequence, or seq2seq for short.

Recurrent neural networks were traditionally difficult to train.

The Long Short-Term Memory (LSTM) network is a Recurrent Neural Network which requires less training.

RNNs and LSTMs have received the most success when working with sequences of words and paragraphs, generally called natural language processing.

This includes both sequences of text and sequences of spoken language represented as a time series. They are used as generative models that require a sequence output such as generating handwriting.

RNNs can be used on:

- Text data

- Speech data

- Classification prediction problems

- Regression prediction problems

- Generative models

Recurrent neural networks are not appropriate for tabular datasets as you would see in a CSV file or spreadsheet. They are also not appropriate for image data input.

RNNs should not be used for:

- Tabular data

- Image data

Autoregression methods perform better than RNNs and LSTMs on time series forecasting problems.