Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

NASNet stands for Neural Search Architecture (NAS) Network and is a Machine Learning model. The key principles are different from standard models like GoogleNet and is likely to bring a major breakthrough in AI soon.

Introduction

We are all currently living in the golden age of Artificial Intelligence. In 2020, people benefit from artificial intelligence every day: music and media streaming services, Google maps, Uber, Alexa, to name a few. Be it health care, gaming, finance, the automotive industry, AI is present everywhere!

AI systems often consist of 3 units :

1) Artificial Intelligence:

AI is an umbrella term for both ML and DL. AI, as the name suggests, means intelligence which is "artificial" (programmed by humans to perform human activities).

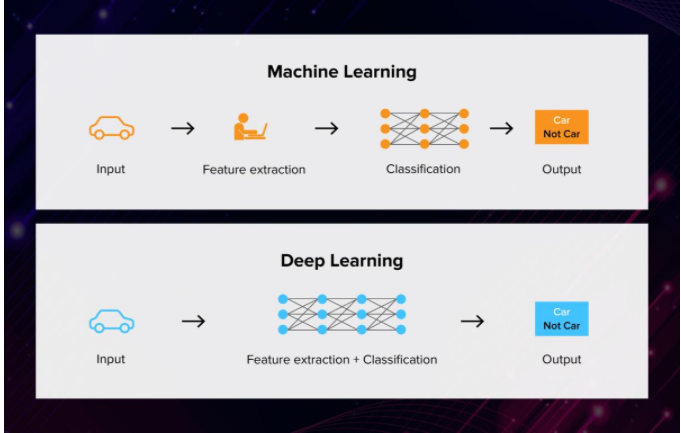

2) Machine Learning:

A subset of AI, Machine Learning provides systems the ability to automatically learn and improve from experience without being explicitly programmed.

In ML, there are different algorithms (e.g. neural networks) that help to solve problems.

3) Deep Learning:

Deep Learning is a subset of machine learning, which uses neural networks to analyze different factors with a structure that is similar to the human neural system.

Deep learning is a subfield of machine learning. While both fall under the broad category of artificial intelligence, deep learning is what powers the most human-like artificial intelligence.

Democratizing ML - Neural Search Architecture(NAS)

This is the pioneer age of ML, especially considering the year 2012 when several breakthroughs happened concerning the performance and accuracy of deep neural networks. These technologies are undoubtedly culturally accepted, but they are restricted, meaning there's a long way in democratizing Machine Learning (the idea that it is accessible to all organizations, perhaps even for every person in an organization).

Hence, an important step towards democratizing ML and addressing fundamental efficiency and automation issues of these technologies is the NAS architecture.

Need for "Neural Architecture Search" :

Have you heard of The ImageNet Large Scale Visual Recognition Challenge or ILSVRC? It is an annual competition in which challenge tasks use subsets of the ImageNet dataset. The goal of the challenge was to promote the development of better computer vision techniques.

Well, let's take a look at the GoogLeNet model, the winner of the ILSVRC 2014 competition.

This architecture has 22 layers in total!

Can you imagine manually designing this network? There are more than 20 layers in total and a pretty expensive design process which can be restricted to experts.

AutoML and Neural Architecture Search (NAS) are the new kings of the deep learning castle. They are the quick ways of getting great accuracy for your machine learning task without putting in a lot of effort. In simple words, it means AI designed for AI.

Neural Architecture Search (NAS)-

When we talk about developing neural networks for a particular task, we expect it to give us the best performance possible.

- To come to a most optimized architecture, one must have good expertise in the domain(required specialized skills).

- There will be situations where we may not be aware of the state-of-the-art techniques or their limits.

- The entire process of designing neural networks will involve a lot of trial-and-error, which in itself can be very time-consuming and expensive.

Although most popular and successful model architectures are designed by human experts, it doesn’t mean we have explored the entire network architecture space and settled down with the best option.

NAS TO THE RESCUE!

- Neural Architecture Search (NAS) automates network architecture engineering.

- It is an algorithm that searches for the best algorithm to achieve the best performance on a certain task.

- The pioneering work by Zoph & Le 2017 and Baker et al. 2017 have attracted a lot of attention into the field of Neural Architecture Search (NAS), leading to many interesting ideas for better, faster, and more cost-efficient NAS methods.

- Commercial services such as Google’s AutoML and open-source libraries such as Auto-Keras make NAS accessible to the broader machine learning environment.

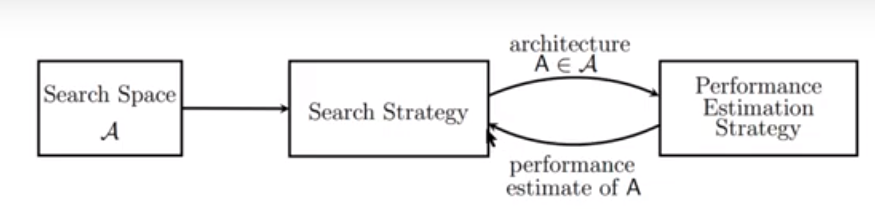

The general setup of NAS involves three components :

a) Search Space

b) Search Strategy

c) Performance Estimation Strategy

Let's understand each of these components.

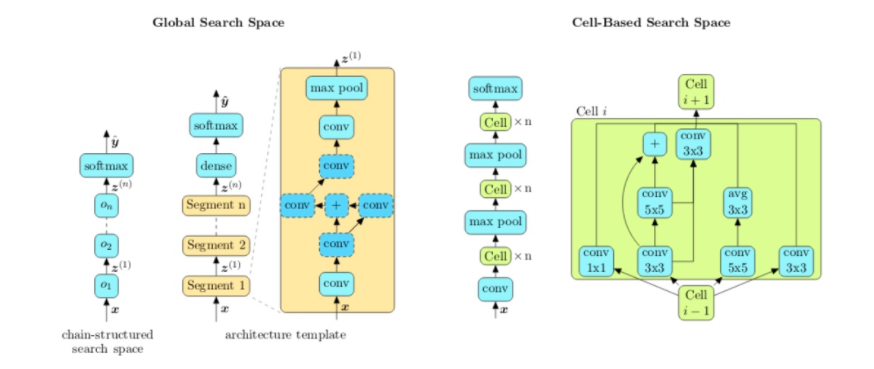

Search Space:

In simple terms, this defines the space of all possible architectures to look for, concerning a given problem. One can view neural networks as a function that transforms input variable 'x' into output variable 'y' through a series of operations(convolutions, pooling, activation functions, or addition/concatenation).

The NAS search space can be seen as a subspace of the definition of this neural network. Given a deep learning problem, there are two fundamental groups of search spaces:

a) Global Search Space:

i) This space allows large degrees of freedom regarding the arrangement of operations, hence it allows any kind of architecture.

ii) This space has a set of allowed operations such as convolutions, pooling, dense layers with activation, global average pooling with different hyper-parameter settings(like the number of filters, filter width, filter height).

Also, it doesn't allow repetitive architecture patterns.

iii) It has certain constraints such as not having dense layers before the first set of convolution operations or starting a neural network with pooling as the first operation.

b) Cell-based Search Space:

i) This space focuses on discovering the architecture of specific cells that can be combined to assemble the entire neural network.

ii) A cell-based search space is based upon the observation that many effective manually designed architectures have repetitions of fixed structures(take a look at the GoogLeNet image above), also called cells.

iii) Such architectures often consist of smaller-sized cells that are stacked to form a larger architecture.

iv) The main benefit of the cell-based search space is that yields architectures that are smaller and more effective but can also be composed into larger architectures.

Performance Estimation

- The performance estimation strategy evaluates the performance of a possible neural network from its design (without constructing and training it).

- For every architecture in the search space, it can return a number that corresponds to the performance of the architecture or the accuracy of the model architecture when applied to a test dataset based on certain performance metrics.

- In certain cases, it helps in passing the results to the next iteration for producing a better model, and in other cases, it just keeps improvising on its own every time from scratch.

Search Strategy

NAS actually relies on search strategies.

The search strategy helps identify the best architecture for estimating performance and avoid testing of bad architectures.

There are many search strategies such as random and grid search, gradient-based strategies, evolutionary algorithms, and reinforcement learning strategies.

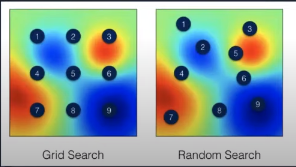

A) Grid and Random Search:

i) The simplest search strategies are the grid search and random search.

ii) The grid search systematically screens the search space whereas the random search randomly picks architectures from the search space to be tested by the performance estimation strategy.

iii) Both these strategies are viable for very small search spaces, in particular, if the problem at hand is tuning a small number of hyperparameters (with random search usually outperforming grid search), but they quickly fail with the growing size of the search space.

B) Gradient-based Search :

i. As an optimization issue, NAS can be easily formulated through a gradient-based search.

ii. As NAS used discrete search space, it is challenging to achieve gradients.

iii. Therefore, it requires a transformation of a discrete architectural space into a continuous space and architectures which can be derived from their continuous representations.

iv. Theoretical foundations for gradient search on the NAS are unusual.

v. However, this method shows excellent search effectiveness in practical applications.

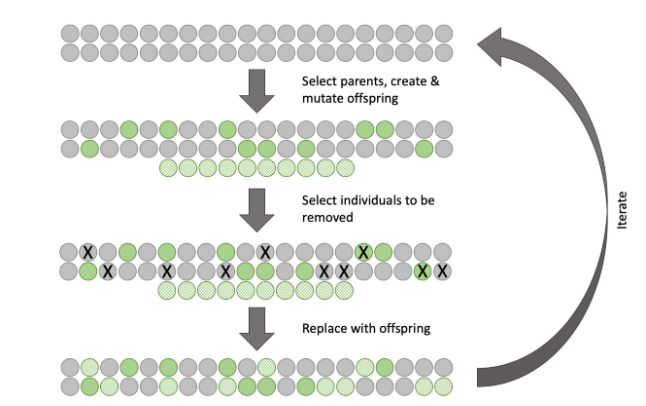

C) Evolutionary algorithm:

i) Evolutionary algorithm is an optimized algorithm that mimics biological mechanism to find an optimal design with specific constraints.

ii) EAs have been adopted by researchers to provide evolving artificial neural networks.

iii) The characteristics of the EA methodology is that it generates the various components of neural network architecture with its evolving processes.

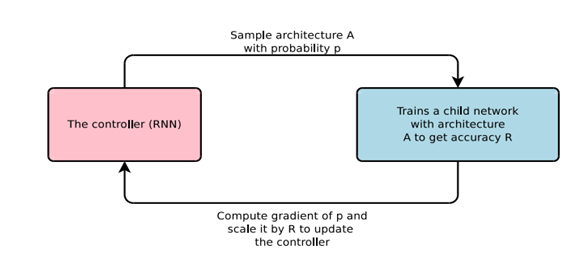

D) Reinforcement Learning(RL)

i)The initial design of NAS (Zoph & Le 2017) involves an RL-based controller for proposing child model architectures for evaluation. The controller is implemented as a Recurrent Neural Network(RNN).

ii) The search space of NAS became popular with the introduction of NAS through RL in 2017.

iii) The controller network is trained similar to an RL task using a popular algorithm called "REINFORCE".The controller is used to sample from the search space with a certain probability distribution, let's say p.

iv) The output of the controller is a variable-length sequence of tokens used for configuring the network architecture(update properties of the controller network using gradient-based methods).

v) This process is iterated until convergence or timeout.

Many other methods are also used in neural architecture search which has achieved an impressive performance.

Conclusion:

- NAS has successfully managed to establish deeper neural networks and various architectures generated using RL and evolutionary algorithms in particular, specifically in the field of image processing and computer vision.

- Like every booming technology, further research is required to make NAS more generic, since the NAS methods are too expensive for realistic applications.

- One of the issues of NAS is the search efficiency. Let's consider the RL based NAS architecture. It requires thousands of GPU-days of searching/training to achieve the best computer vision results.

This new direction of Neural Architecture Search provides a lot of challenges and a chance for another breakthrough in the field of Artificial Intelligence.