Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 15 minutes

A tensor processing unit (TPU) is a proprietary processor designed by Google in 2016 for use in neural networks inference. It has been deployed to all Google data centers and powers applications such as Google Search, Street View, Google Photos and Google Translate.

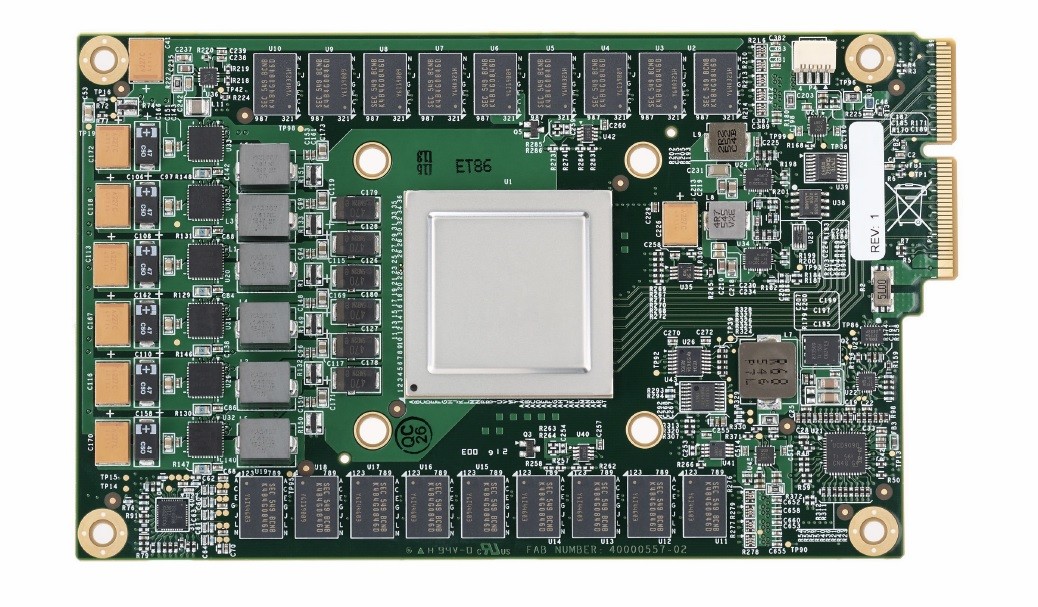

This is how a TPU looks like:

Origin of TPUs

Initially, the plan had been to develop an Application Specific Integrated Circuit (ASIC) in 2006 to meet the growing needs of neural networks in terms of computation demand and power consumption.

In recent years, the need became urgent and the immediate solution was to double the data centers. To tackle this problem, Google came up with Tensor Processing Unit (TPU) as developing ASICs take time. The entire development of the first TPU version took fifteen months.

Norm Jouppi was the Technical leader of the TPU project.

Thus, TPU was born in 2016

TPU instruction set

TPU's instruction set has been influenced by the Complex Instruction Set Computer design.

The TPU includes the following computational resources:

- Matrix Multiplier Unit (MXU): 65,536 8-bit multiply-and-add units for matrix operations

- Unified Buffer (UB): registers

- Activation Unit (AU): Hardwired activation functions

Memory Access: A systolic array

CPUs and GPUs spend a significant amount of time accessing data. To overcome this, TPU designed an architecture named as systolic array.

In short, systolic array chains multiple ALUs together reusing the result of reading a single register.

This enables TPU to process 65,536 multiply-and-adds for 8-bit integers every cycle.

Performance

The performance of TPU is certainly better than CPU and GPU considering the factor that the main driving force for the TPU development was to make inference faster.

As a comparision, consider this:

-

CPU can handle tens of operation per cycle

-

GPU can handle tens of thousands of operation per cycle

-

TPU can handle upto 128000 operations per cycle

Conclusion

TPU works best in the domain of neural network inference and has solved some of the problems in CPU and GPU. It is used along with CPUs and GPUs.

Note that CPUs and GPUs will still be used as all these are designed for various purpose.