Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

In this article, we have explained different Strategies to update Cache in a System such as Cache Aside, Read Through, Write Back and much more. This is an important topic to design efficient system.

Table of Contents:

- Introduction

- Different Strategies

i. Cache Aside

ii. Read-Through

iii. Write-Through

iv. Write-Back

v. Write-Around

Caching(pronounced "cash") is a buffering technique that stores frequently accessed data in a cache. A cache is a temporary storage area with high retrieval speed. The main purpose of cache is to increase data retrieval speed by reducing the need to access a slower storage area. How does Cache work? Is Cache set up in different tiers or on its own?. In this article, we will discuss Caching strategies.

Nowadays, SPEED matters a lot. No one wants to be stuck up at loading, everyone wants their things to be done in a split second. As we speaking, you won't be reading this article if it would have taken a few secs more to open. Not only speed, but we also need a systematic way to manage a large amount of traffic. Caching helps us to handle such situations if done properly.

A cache can reduce response time, decrease database load time and save us costs. Choice of the right caching strategy can make a big difference. It depends on the data and how data is being accessed. Caching strategy for a leader Board System won't be necessarily improving performance if implemented for Social Profile Search. It's important to choose the right one. Let's take a look at various strategies.

Different Caching Strategies

The Different Caching Strategies in System Design are:

- Cache Aside

- Read-Through Cache

- Write-Through Cache

- Write-Back

- Write Around

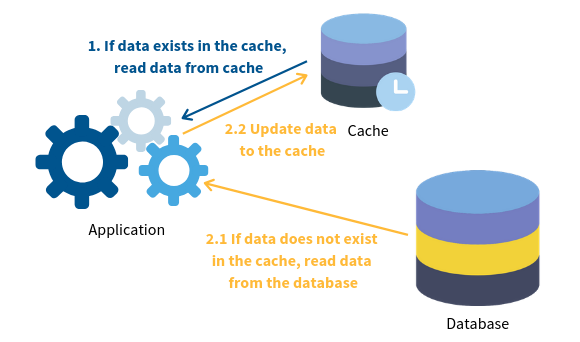

1. Cache Aside

In Cache aside, the application can talk directly to cache(or say cache sits aside) and database. our application fetches data from the cache first before looking into the database. If we have a cache-hit means data is found in the cache. Else, it's cache-miss. In this case, the application reads from the database and update cache content for future references and returns to the application. It is also known as lazy loading. It is most commonly used in ready-heavy workloads.

Pros

- Application works in case there is a cache-miss but performance degrades.

- Since only requested data is being written over the cache due to lazy loading, it avoids cache being filled up with unnecessary data.

Cons

- For each cache-miss, it causes a noticeable delay.

- Initially, the cache would be empty which would cause cache-miss for most queries. As a result, latency time increases.

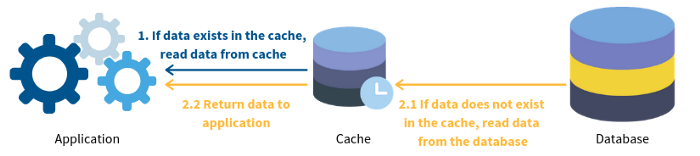

2. Read Through Cache

It is quite similar to cache-aside. Instead of talking to both cache and database, our application talks with the just cache. Cache sits in between application and database.

In this strategy, whenever there is cache-hit, the application fetches directly from cache same as before. In case of a cache miss, here cache server fetches data from database not application. Also after data is fetched from the database, it is first written to cache then returns to our application. It also works for read-heavy workloads.

Pros

- Application doesn't need to handle if there is a cache-miss. The cache server handles itself.

Cons

- Stale data may present in the cache if data changes in a database.

- In the case of cache-miss, it also causes noticeable delay.

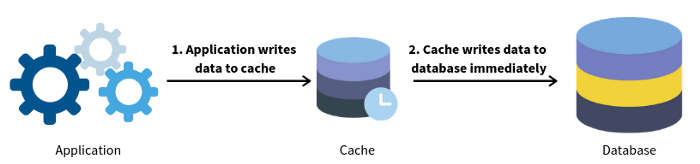

3. Write Through Cache

It is similar to the read-through approach. The only difference is that we are performing a write operation now. All write operations are firstly executed on the cache system, which further writes on the database synchronously. It is slower as compared to write-behind but once data is written into cache, reads for the same data is fast for both.

Pros

1.No cache-miss as data is always present.

2.No stale data present in the cache.

3. Data consistency is guaranteed if paired with read-through.

Cons

- Most of the data present in the cache might never get requested.

- Write latency increases as the application has to wait for write acknowledgement from cache storage and database.

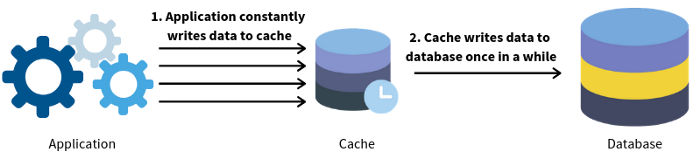

4.Write-Back

It is also known as write-behind. Here, also write operation is firstly performed on cache, but after that all data is written asynchronously on the database after some time interval, improving its overall performance. Due to this, it is mainly used in write-heavy workloads.

Pros

- Tolerant to modern database failures.

- Reduce load and cost by reducing writes to the database.

Cons

- In case of any direct operation on the database, we may end up using stale data, formally said Eventual Inconsistency between the database and caching server.

- If Cache fails, permanent data loss may occur.

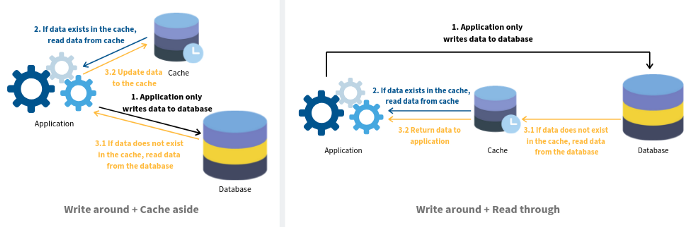

5.Write Around

In write around, the application writes data directly to the database. And Data read goes through cache. For a read operation, we use two cache strategies that we discussed. So usually, it combines with either cache aside or read-through strategy.

With this article at OpenGenus, you must have a strong idea of Caching Strategies. Enjoy.