Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

ResNet50 v1.5 is the modified version of the original ResNet50 model. ResNet50 v1.5 is slightly more accurate (0.5%) and slightly lower performance (down by 5%) compared to ResNet50.

Hence, ResNet50 v1.5 is used in applications where the slight improvement in accuracy matters and the small performance bottleneck is not an issue.

The difference in the architecture of ResNet50 and ResNet50 v1.5 is that, in the bottleneck blocks which requires downsampling, v1 has stride = 2 in the first 1x1 convolution, whereas v1.5 has stride = 2 in the 3x3 convolution.

We will go through the details of ResNet50 v1.5 in depth. We will start with some introductory information which will help you understand the architecture of ResNet50 v1.5.

AI and Neural Networks

AI is one of the hottest trends right now ! Most of the successfull businesses like Amazon, Google and Apple are AI-driven and these companies are trying their best to gain monoploy in this new feild. As we know the basic concept behind AI is a neuron. AI consists of Neural Networks which are as the name suggests networks of Neurons. We'll not be diving deep into this. We assume that the reader is familiar with basic Neural Network and CNN terminologies

Pre-trained Models and why to use them ?

Due to free availability of frameworks like Tensorflow and Pytorch which are well documented, it is very easy for anyone who knows coding to train a Neural Network from scatch, then why do companies and orgranizations use Pre-trained models? Training Deep Neural Networks is computationally very expensive and regular use GPUs are not sufficient to train on such large amounts of Data.(Unless you are super rich XD which huge companies are). Another reason is that, these Pre-trained Models are created by Scientist over years of Research and are bound to perform better than user-defined Networks. These models are also used as references by other reseachers and Data Scientists. Some examples of pre-trained models are BERT, ResNet and GoogleNet.

ResNet50 v1.5

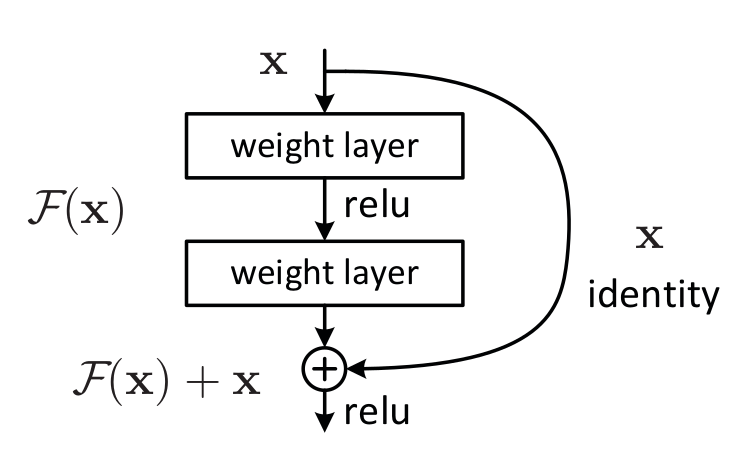

ResNet, short for Residual Networks is a classic neural network used as a backbone for many computer vision tasks. The original model was the winner of ImageNet challenge in 2015. ResNet50 v1.5 is the modified version of the original ResNet 50. The fundamental breakthrough with ResNet was it allowed us to train extremely deep neural networks with 150+layers successfully. Prior to ResNet training very deep neural networks was difficult due to the problem of vanishing gradients.The authors addressed this problem by introducing deep residual learning framework so for this they introduce shortcut connections that simply perform identity mappings

The benefit of these shortcut identity mapping was that there was no additional parameters added to the model and also the computational time was kept in check.

ResNet50 v1.5 Architecture

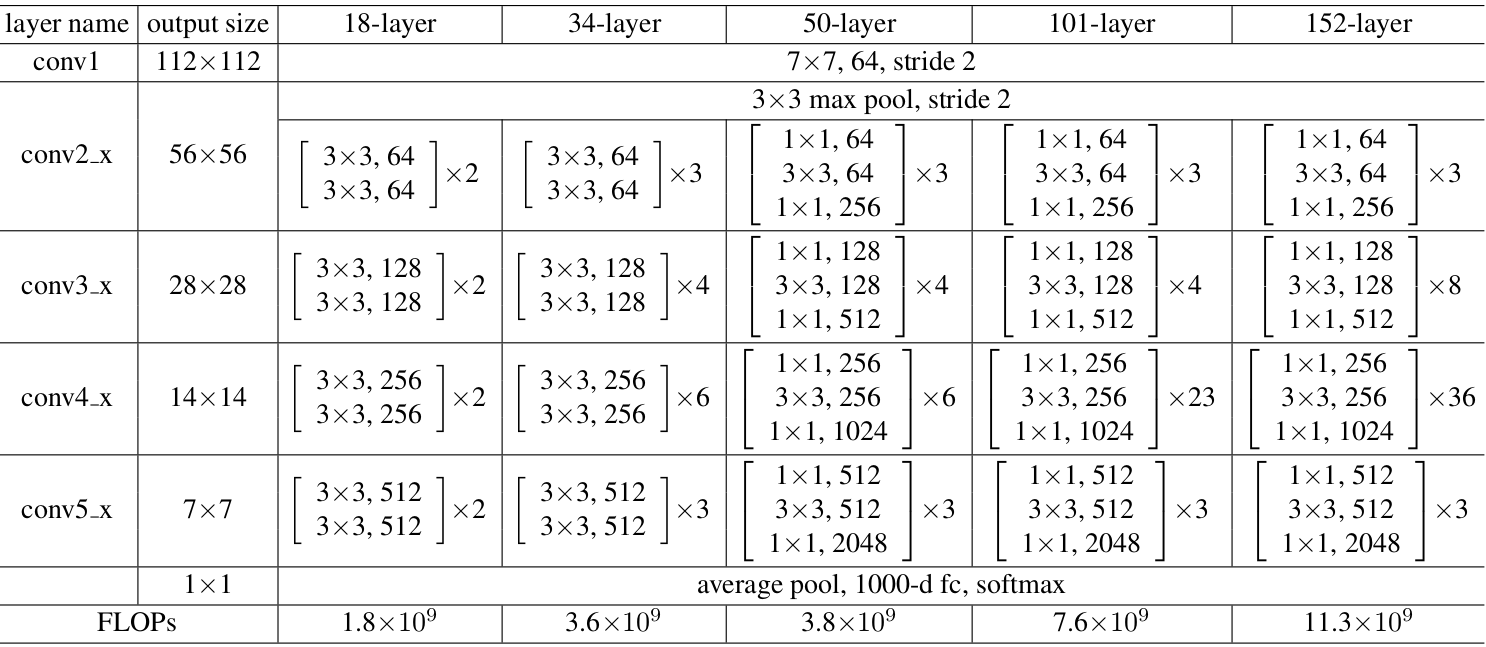

So as we can see in the table 1 the resnet 50 v1.5 architecture contains the following element:

- A convoultion with a kernel size of 7 * 7 and 64 different kernels all with a stride of size 2 giving us 1 layer.

- In the next convolution there is a 1 * 1, 64 kernel following this a 3 * 3, 64 kernel and at last a 1 * 1, 256 kernel, These three layers are repeated in total 3 times so giving us 9 layers in this step.

- Next we see max pooling with a stride size of 2.

- Next we see kernel of 1 * 1, 128 after that a kernel of 3 * 3,128 and at last a kernel of 1 * 1, 512 this step was repeated 4 time so giving us 12 layers in this step.

- After that there is a kernal of 1 * 1, 256 and two more kernels with 3 * 3, 256 and 1 * 1, 1024 and this is repeated 6 time giving us a total of 18 layers.

- And then again a 1 * 1, 512 kernel with two more of 3 * 3, 512 and 1 * 1, 2048 and this was repeated 3 times giving us a total of 9 layers.

- After that we do a average pool and end it with a fully connected layer containing 1000 nodes and at the end a softmax function so this gives us 1 layer.

We don't count the activation functions and the pooling layers.

So totalling this it gives us a 1 + 9 + 12 + 18 + 9 + 1 = 50 layers Deep Convolutional network.

Key Differences between ResNet v50 and v50 1.5

The ResNet50 v1. 5 model is a modified version of the original ResNet50 v1 model. The difference between v1 and v1.5 is that, in the bottleneck blocks which requires downsampling, v1 has stride = 2 in the first 1x1 convolution, whereas v1.5 has stride = 2 in the 3x3 convolution.

This difference makes ResNet50 v1.5:

- slightly more accurate (~0.5% top1) than v1

- comes with a small performance drawback (~5% imgs/sec)

The changes are so small that most of the times the two models are interchangebely used in practical example.

Performance vs Other Models

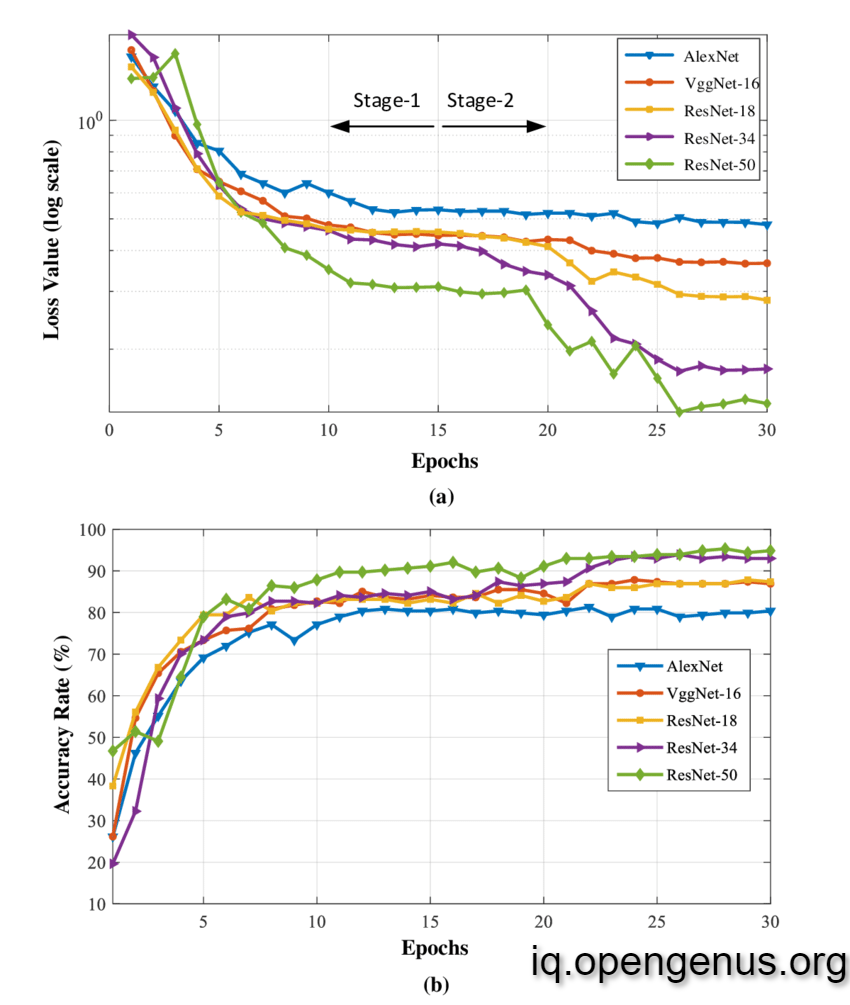

We can see that ResNet performs fairly better than AlexNet, VggNet and it's predecessors.

References

Check out Artificial Intelligence/ ML topics at OpenGenus