Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Table of Contents

Introduction

In the wake of OpenAI's groundbreaking release of ChatGPT, the immense potential of large language models (LLMs) has become evident, showcasing their ability to automate tasks across a wide spectrum, ranging from language translation to creative story generation. Leveraging these AI models effectively, however, poses challenges, particularly in crafting prompts that elicit the desired responses. The art of creating such prompts has given rise to a new field known as Prompt Engineering.

Prompt engineering is the art and science of formulating effective prompts that causes AI models, specifically large language models like GPT-3 and GPT-4, to generate the intended responses. In this article at OpenGenus, we will explore various techniques utilized in prompt engineering, shedding light on the most popular and effective approaches. These techniques play a crucial role in optimizing the capabilities of language models and harnessing their potential in real-world applications.

The techniques that will be covered in this article at OpenGenus are the following:

- Zero-Shot Prompting

- Few-Shot Prompting

- Chain-of-thought (CoT) Prompting

- Self-consistency

- Generated Knowledge Prompting

- Tree of Thoughts (ToT)

- Retrieval Augmented Generation (RAG)

Basic prompting

Simple prompts are enough to accomplish quite a lot, but the quality of the outputs depends on how much information is provided and how well crafted it is. Take the following as a simple example:

The sun is

The sun is a bright, hot star that provides light and heat to our planet Earth.

This output makes sense with the given input, but if we want to have better control of the output its necessary to provide more context.

Complete the sentence:

The sun is

The sun is a bright, hot star that provides light and heat to our planet Earth, making life possible and supporting various essential processes.

This output is similar to the previous one, but here we added the instruction to "complete the sentence" which is why the output here is more of an complete sentence (with more information than before). This process of improving and changing the prompt to make the model output a "better"/more desired output is a very basic example of prompt engineering.

Formatting

Even though the models can take in what would seem like any type of text as its input (even without any particular structure), there are certain elements that make up the prompts, These elements are:

Instruction- a specific task or instruction for the model

Context- external information or additional context that can steer the model to better responses

Input Data- the input or question that we are interested to find a response for

Output Indicator- the type or format of the output.

However, not all prompts require all of these elements; it depends on the specific task.

Basic Best Practices

Put instructions at the beginning of the prompt

The order of information in the prompt plays a crucial role in shaping the model's responses. To achieve higher-quality outputs, it is advisable to begin the prompts with clear instructions, setting the task or question to be addressed by the AI.

Summarize the text below as a bullet point list of the most important points.

{text input here}

NOTE: Its also advisable to separate the instruction from the context with some characters like ### or """ like the following example:

Summarize the text below as a bullet point list of the most important points.

Text: """

{text input here}

"""

Be Specific

Add as much detail as possible about the desired context, outcome, length, format, style, etc. The more specific the prompt is, the more likely it is that the output is gonna be as desired.

Instead of this:

Write a poem about OpenAI.

Write this:

Write a short inspiring poem about OpenAI, focusing on the recent DALL-E product launch (DALL-E is a text to image ML model) in the style of a {famous poet}

Be Precise

Be specific and direct, the analogy here is very similar to the principles of effective communication -- the more direct, the more effective the message gets across.

For example, you might be interested in learning the concept of prompt engineering. You might try something like:

Explain the concept prompt engineering. Keep the explanation short, only a few sentences, and don't be too descriptive.

It's not clear from the prompt above how many sentences to use and what style. You might still somewhat get good responses with the above prompts but the better prompt would be one that is very specific, concise, and to the point.

Use 2-3 sentences to explain the concept of prompt engineering to a high school student.

Tell what to do, not what not to do.

Give positive instructions instead of negative ones. Specify what to do to get good responses from the model. For example, a movie recommendation chatbot failed because the instruction focused on what not to do.

The following is an agent that recommends movies to a customer. DO NOT ASK FOR INTERESTS. DO NOT ASK FOR PERSONAL INFORMATION.

Customer: Please recommend a movie based on my interests.

Agent:

Sure, I can recommend a movie based on your interests. What kind of movie would you like to watch? Do you prefer action, comedy, romance, or something else?

Here is a better prompt:

The following is an agent that recommends movies to a customer. The agent is responsible to recommend a movie from the top global trending movies. It should refrain from asking users for their preferences and avoid asking for personal information. If the agent doesn't have a movie to recommend, it should respond "Sorry, couldn't find a movie to recommend today.".

Customer: Please recommend a movie based on my interests.

Agent:

Sorry, I don't have any information about your interests. However, here's a list of the top global trending movies right now: [list of movies].

I hope you find something you like!

Examples

The examples above were adopted from the "Best practices for prompt engineering with OpenAI API" article.

Advanced Prompting Techniques

In this section, we explore more advanced prompt engineering techniques, that are more suitable for accomplishing complex tasks.

Zero-Shot Prompting

Large language models (LLMs) like GPT-3 are capable of performing tasks "zero-shot" meaning they can accomplish tasks without specific training examples.

Classify the text into neutral, negative or positive.

Text: I think the weather today is great!

Sentiment:

Positive

Since in this example we didn't have to provide any examples it shows that the LLM already unerstands

"sentiment" and therefor this is a use of zero-shot prompting.

When zero-shot learning is not sufficient, it is advisable to include demonstrations or examples in the prompt (which leads to few-shot prompting).

Few-Shot Prompting

Few-shot prompting involves providing a set of training examples as part of the prompt to give the model additional context. These examples can be used to prime the model to respond in certain ways, emulate specific behaviors, and seed answers to common questions.

A good example of this was presented in Brown et al. 2020. In the example, the task was to correctly use the new word "farduddle" in a sentence.

A "whatpu" is a small, furry animal native to Tanzania. An example of a sentence that uses the word whatpu is: We were traveling in Africa and we saw these very cute whatpus.

To do a "farduddle" means to jump up and down really fast.

An example of a sentence that usesthe word farduddle is:

When we won the game, we all started to farduddle in celebration.

Chain-of-Thought (CoT) Prompting

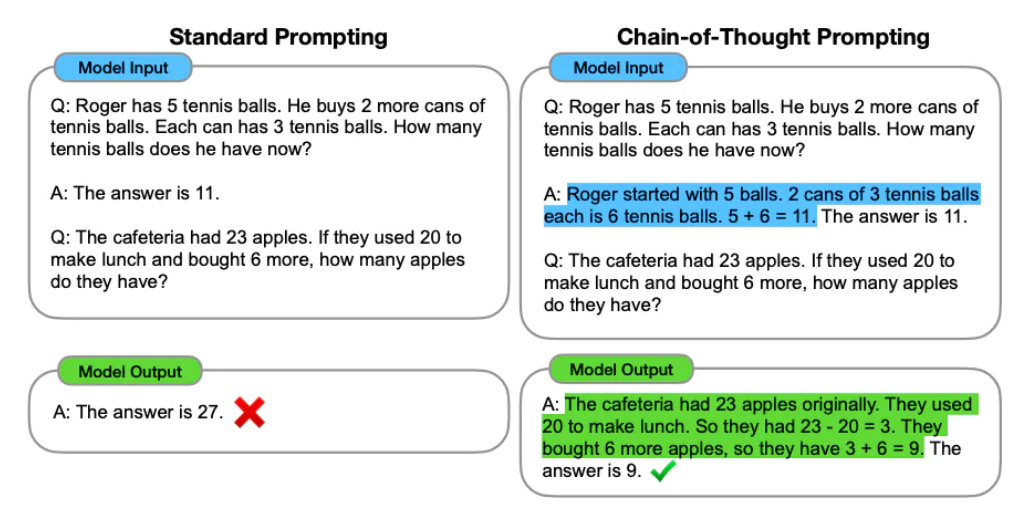

Image Source: Wei et al. (2022)

Chain-of-thought (CoT) prompting, introduced in Wei et al. (2022), enables complex reasoning capabilities through intermediate steps. When combined with few-shot prompting, it yields better results for more intricate tasks that require reasoning before responding.

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: Adding all the odd numbers (9, 15, 1) gives 25.

The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:

Adding all the odd numbers (15, 5, 13, 7, 1) gives 41.

The answer is False.

There are different versions of CoT prompting for example: Automatic Chain-of-Thought (Auto-CoT) and Zero-shot COT Prompting.

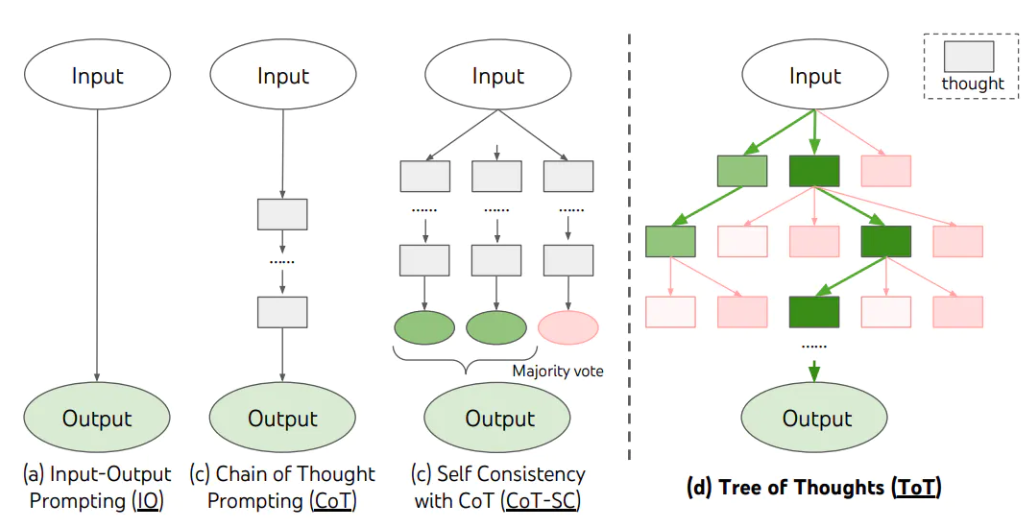

Self-Consistency

Self-consistency, an advanced prompt engineering technique proposed by Wang et al. (2022), aims to improve the performance of chain-of-thought (CoT) prompting, especially for tasks involving arithmetic and commonsense reasoning. Instead of using a naive greedy decoding approach, self-consistency samples multiple diverse reasoning paths through few-shot CoT, and then selects the most consistent answer from the generated responses.

Let's demonstrate how self-consistency can enhance arithmetic reasoning with a given prompt:

When I was 6 my sister was half my age. Now I’m 70 how old is my sister?

35

As we can see this answer is wrong, following the Self-consistency example from Wang et al. (2022) (Table 17):

Q: There are 15 trees in the grove. Grove workers will plant trees in the grove today. After they are done, there will be 21 trees. How many trees did the grove workers plant today?

A: We start with 15 trees. Later we have 21 trees. The difference must be the number of trees they planted. So, they must have planted 21 - 15 = 6 trees.

The answer is 6.

Q: If there are 3 cars in the parking lot and 2 more cars arrive, how many cars are in the parking lot?

A: There are 3 cars in the parking lot already. 2 more arrive. Now there are 3 + 2 = 5 cars.

The answer is 5.

Q: Leah had 32 chocolates and her sister had 42. If they ate 35, how many pieces do they have left in total?

A: Leah had 32 chocolates and Leah’s sister had 42. That means there were originally 32 + 42 = 74 chocolates. 35 have been eaten. So in total they still have 74 - 35 = 39 chocolates.

The answer is 39.

Q: Jason had 20 lollipops. He gave Denny some lollipops. Now Jason has 12 lollipops. How many lollipops did Jason give to Denny?

A: Jason had 20 lollipops. Since he only has 12 now, he must have given the rest to Denny. The number of lollipops he has given to Denny must have been 20 - 12 = 8 lollipops.

The answer is 8.

Q: Shawn has five toys. For Christmas, he got two toys each from his mom and dad. How many toys does he have now?

A: He has 5 toys. He got 2 from mom, so after that he has 5 + 2 = 7 toys. Then he got 2 more from dad, so in total he has 7 + 2 = 9 toys.

The answer is 9.

Q: There were nine computers in the server room. Five more computers were installed each day, from monday to thursday. How many computers are now in the server room?

A: There are 4 days from monday to thursday. 5 computers were added each day. That means in total 4 * 5 = 20 computers were added. There were 9 computers in the beginning, so now there are 9 + 20 = 29 computers.

The answer is 29.

Q: Michael had 58 golf balls. On tuesday, he lost 23 golf balls. On wednesday, he lost 2 more. How many golf balls did he have at the end of wednesday?

A: Michael initially had 58 balls. He lost 23 on Tuesday, so after that he has 58 - 23 = 35 balls. On Wednesday he lost 2 more so now he has 35 - 2 = 33 balls.

The answer is 33.

Q: Olivia has $23. She bought five bagels for $3 each. How much money does she have left?

A: She bought 5 bagels for $3 each. This means she spent 5 * 3 = $15. Olivia started with $23, so she has 23 - 15 = $8 left.

The answer is $8.

Q: When I was 6 my sister was half my age. Now I’m 70 how old is my sister?

A:

Output 1:

When I was 6 my sister was half my age, so she was 3. Now I am 70, so she is 70 - 3 = 67. The answer is 67.

Output 2:

When the narrator was 6, his sister was half his age, which is 3. Now that the narrator is 70, his sister would be 70 - 3 = 67 years old. The answer is 67.

Output 3:

When I was 6 my sister was half my age, so she was 3. Now I am 70, so she is 70/2 = 35. The answer is 35.

Using self-consistency, we can see that there is already a majority answer (67) that emerges, which becomes the final answer. This demonstrates how self-consistency helps improve the accuracy of CoT prompting, especially for arithmetic reasoning tasks.

Generated Knowledge Prompting

Tree of Thoughts (ToT)

For complex tasks that require exploration and strategic lookahead, traditional or simple prompting techniques may not be sufficient. To address this, Yao et el. (2023) and Long (2023) introduced the Tree of Thoughts (ToT) framework. ToT is a generalized approach that goes beyond chain-of-thought prompting and promotes exploration of thoughts as intermediate steps for effective problem-solving with language models.

In the ToT framework, a tree of thoughts is maintained, where each thought represents a coherent sequence of language that serves as a step towards solving the problem. This approach allows the language model to self-evaluate the progress made by intermediate thoughts during a deliberate reasoning process. By combining the LM's ability to generate and evaluate thoughts with search algorithms like breadth-first search and depth-first search, ToT enables systematic exploration of thoughts with lookahead and backtracking for efficient problem-solving. This more sophisticated approach provides the LM with enhanced capabilities for tackling complex tasks.

To use the ToT framework when prompting one approach was proposed by Hulbert (2023) where the ide is about getting the LLM to evaluate intermediate thoughts in a single prompt. A sample ToT prompt is:

Imagine three different experts are answering this question. All experts will write down 1 step of their thinking,then share it with the group.Then all experts will go on to the next step, etc.If any expert realises they're wrong at any point then they leave.

The question is {Question}

Retrieval Augmented Generation (RAG)

General-purpose language models can be fine-tuned for common tasks like sentiment analysis and named entity recognition without requiring additional background knowledge. However, for more complex and knowledge-intensive tasks, language models can benefit from accessing external knowledge sources to improve factual consistency and generate more reliable responses, reducing the problem of "hallucination."

To address such knowledge-intensive tasks, Meta AI researchers introduced Retrieval Augmented Generation, which combines an information retrieval component with a text generator model. RAG can be fine-tuned and its internal knowledge can be modified efficiently without the need for retraining the entire model.

RAG takes an input, retrieves relevant documents from a source like Wikipedia, and concatenates them as context with the input prompt. This context is then fed to the text generator to produce the final output. This approach allows RAG to be adaptive and access the latest information, making it useful for situations where facts may evolve over time, unlike traditional language models with static knowledge.

RAG has demonstrated strong performance on various benchmarks, showing improved factual, specific, and diverse responses when tested on different question-answering tasks. By incorporating retriever-based approaches like RAG with popular language models like ChatGPT, capabilities and factual consistency can be further enhanced.

Conclusion

Prompt engineering is crucial for maximizing the potential of large language models. In this article at OpenGenus, we explored the basics as well as some advanced techniques such as Zero-Shot, Few-Shot, Chain-of-thought, Self-consistency, Generated Knowledge, Tree of Thoughts, and Retrieval Augmented Generation. Implementing these techniques enhances the accuracy and adaptability of language models in real-world applications, offering transformative possibilities for AI integration.

It's worth noting that there are other variations of these methods and additional techniques not covered in this article, such as Automatic Reasoning and Tool-use (ART), Automatic Prompt Engineer (APE), Active-Prompt, Directional Stimulus Prompting, ReAct Prompting, and Multimodal CoT Prompting.