Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

One of the greatest tools a programmer can have under their belt is the ability to write tests to ensure the code they are producing functions well. Rust of course has a way of writing tests, and this allows a test driven development cycle.

Table of contents

- Test Driven development

- Simple tests in Rust

- Test flags

- Example

Test Driven Development

What does this mean? Well, suppose you want to write a function that adds two numbers. You can first write a test, in which you say, if I call this function with 2 and 3 as the two parameters, the result should be 5. Then you write the function and use this test to determine if the function is working according to plan. In this way you have set small goals and checks that your code must pass, before you even started writing the code!

Rust has a few macros and attributes to aid us in our quest to write tests. Let's explore a few, shall we?

Simple Testing in Rust

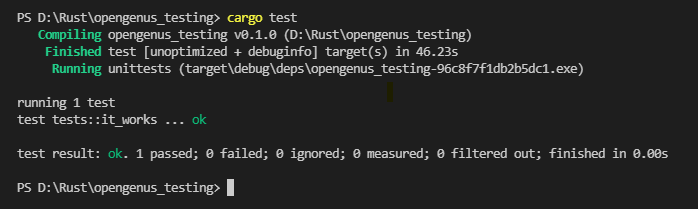

The simplest way to do tests in Rust is by using the test configuration and test macro. To run the tests configured as below, you just run a cargo test command in the terminal!

#[cfg(test)]

mod tests {

#[test]

fn it_works() {

assert_eq!(2+2, 4);

}

}

The #[test] macro right before the function indicates it's a testing function, so the test runner knows that it has to run this as part of the tests. Simple enough!

Okay, but how does it look if something fails?.. Let's force a test that always fails and let's have a look.

#[cfg(test)]

mod tests {

#[test]

fn it_works() {

assert_eq!(2 + 2, 4);

}

#[test]

fn please_dont_panic() {

panic!("Oops");

}

}

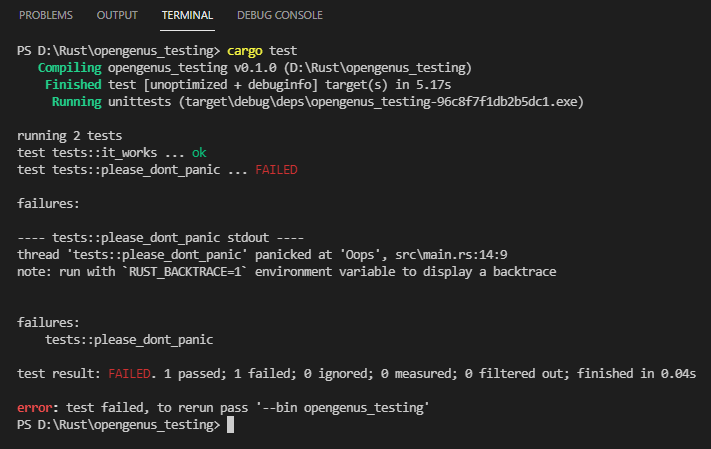

As we can see, our testing failed. Because one of our tests failed, and it tells us which particular test failed. There are other ways of making a test fail, besides panicking.

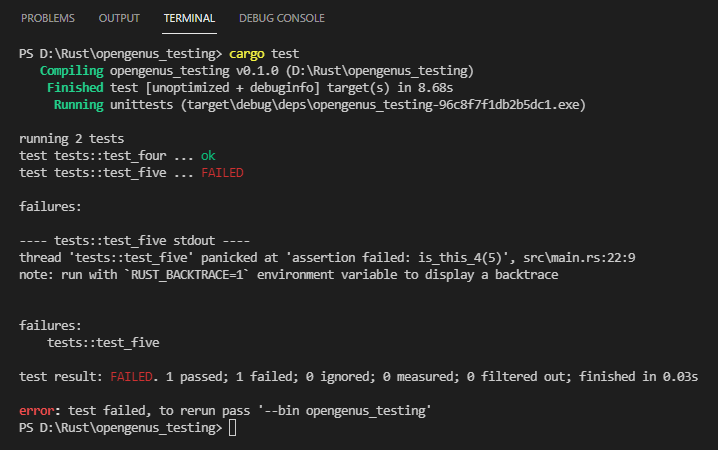

One of these ways is the assert macro. Assert takes a boolean parameter. If it's true, assert does nothing. If it's false however, it panics! For example.. let's do something very silly, just to show the point!

The is_this_4 function returns a bool. True if it's 4, false otherwise. So we can use assert with this!

#[cfg(test)]

mod tests {

fn is_this_4(n: i32) -> bool {

if n == 4 {

return true;

} else {

return false;

}

}

#[test]

fn test_four() {

assert!(is_this_4(4));

}

#[test]

fn test_five() {

assert!(is_this_4(5));

}

}

Our use case, it would be better to use the other two macros Rust offers, assert_eq! and assert_ne!, which check for equality, and non-equality respectively. We could rewrite our tests above like..

#[test]

fn test_four() {

assert_eq!(4, 4);

}

#[test]

fn test_five() {

assert_eq!(5, 4);

}

I'm not going to post an image because it's exacly the same output as the above test.

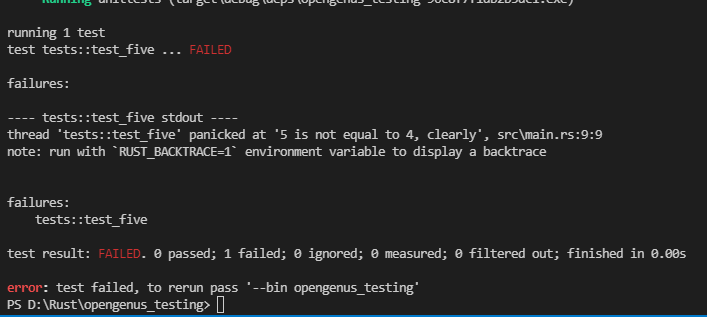

In an assert!, assert_eq! and assert_ne!, you can also specify some extra message to be shown in case it fails. Like this..

#[cfg(test)]

mod tests {

#[test]

fn test_five() {

assert!(5 == 4, "5 is not equal to 4, clearly");

}

}

Another option we have for testing is the #[should_panic] attribute. Any function labeled as such, is expected to panic, and the test will fail if it does not panic.

#[cfg(test)]

mod tests {

pub fn check_lower_100(n: i32) -> bool {

if n > 100 {

return false;

} else {

return true;

}

}

#[test]

#[should_panic]

fn get_correct_value() {

assert!(check_lower_100(75));

}

#[test]

#[should_panic]

fn get_high_value() {

assert!(check_lower_100(150));

}

}

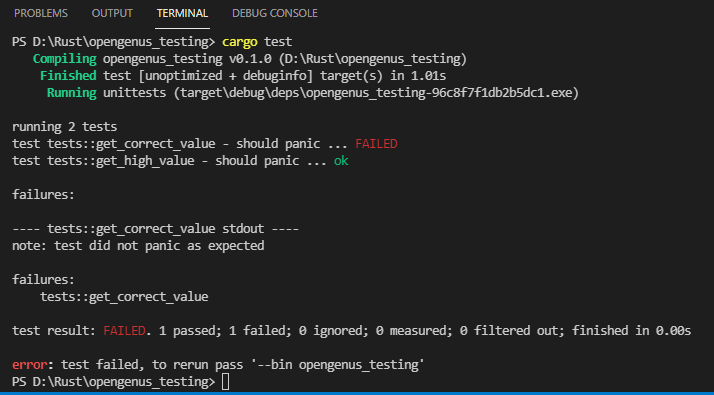

As you can see, when labeled as should panic, the test that passes fails, and the one that doesn't, passes! We're 'catching' or expecting the mistake. Which I've certainly used before. I know that certain value should break a function, I'll test it like that.

Regarding the previous article, we can also have a Result<T, E> as a return type! I'll borrow the Rust book's example (with a slight change), as an excuse to also point you to the references below!

#[cfg(test)]

mod tests {

#[test]

fn it_works() -> Result<(), String> {

if 2 + 2 == 5 {

Ok(())

} else {

Err(String::from("two plus two does not equal five"))

}

}

}

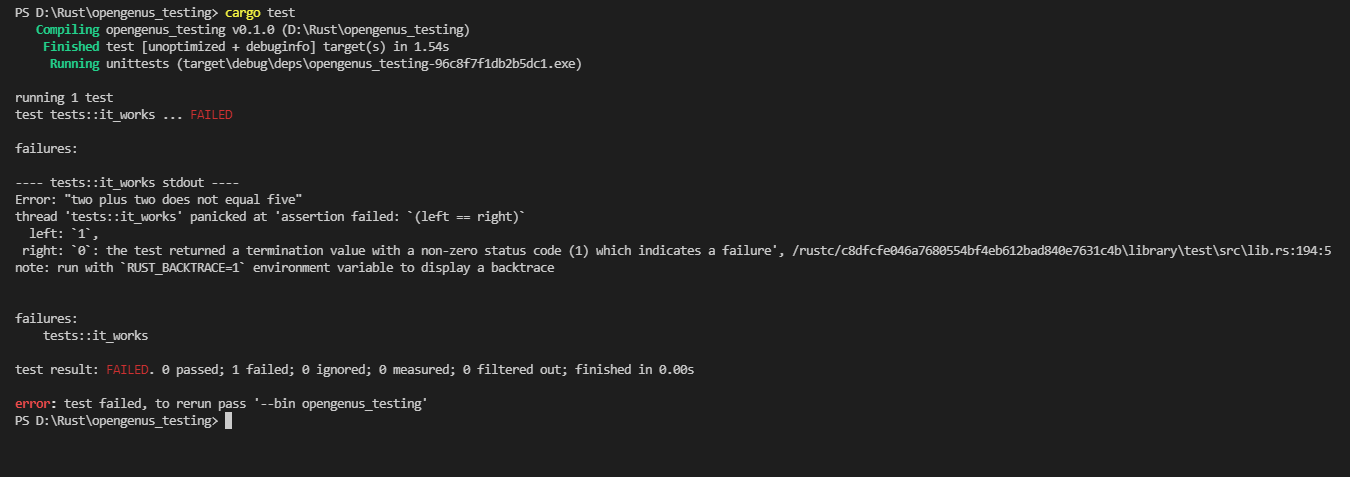

So, if the function works, we return the Ok(()), and if it fails, it will show the string we pass to Err!

Keep in mind you can't use the should_panic attribute if you use the Result<T, E> return type!

Test flags

There's a couple of extra flags we can add to the test command to control how these tests are ran.

cargo test -- --test-threads=1 // Lets you set how many threads you want to use to run your tests, probably unnecessary unless you have a LOT of tests

cargo test -- --show-output // Tests don't print output to the terminal. By using this flag, you will get to see the output.

cargo test name // replace name with the function name of the test you want to execute, and only that one will be executed.

cargo test -- --ignored // Runs all the tests that are labeled with the ignore attribute

There's also the #[ignore] attribute you can add, just like the should_panic one, that makes it so that particular test will not be ran unless specifically requested.

Example!

Okay. Let's suppose we want to make a function that given a string, returns true if it contains another string we specify, and false if it doesn't. Simple!

Keeping in mind what we know about string slices and lifetimes.. our function signature could look a bit like this..

fn search_for<'a>(contents: &'a str, query: &'a str) -> bool

So, let's write a test that we know should fail, and a test we know should pass..

#[cfg(test)]

mod tests {

use super::search_for;

#[test]

fn test_search_for_valid() {

let content = "Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Donec imperdiet ullamcorper eros eget dictum.

Integer vel metus malesuada nisi elementum posuere.";

let query = "Lorem ipsum";

assert_eq!(search_for(content, query), true);

}

#[test]

fn test_search_for_invalid() {

let content = "Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Donec imperdiet ullamcorper eros eget dictum.

Integer vel metus malesuada nisi elementum posuere.";

let query = "Rust was here";

assert_eq!(search_for(content, query), false);

}

}

So, as the tests stand, our search function should return true when we look for something that IS in our content, and false when it isn't. Let's write our search function with that in mind. We go line by line of our text, and if the line contains our query, we return true. If we went through all the lines and we haven't yet returned, we just return false because we haven't found the query.

pub fn search_for<'a>(contents: &'a str, query: &'a str) -> bool {

for line in contents.lines() {

if line.contains(query) {

return true;

}

}

false

}

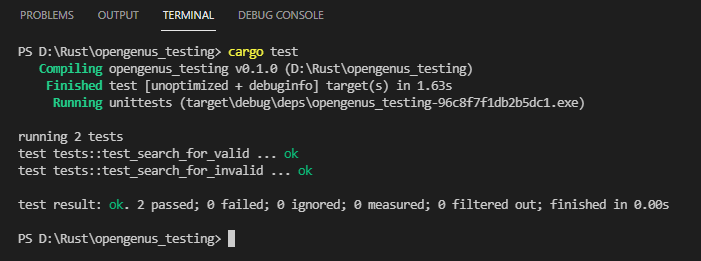

So, we run our tests.. If both pass, we've written the function well. If we have any bugs, they will fail. Because we wrote our tests to give us the expected outcome before writing the function itself. "This should work like that", and then code around that idea.

And that wraps it up for today. Try doing a small exercise where you first think of an outcome, an expected functionality, write tests that make sure this happens, and THEN write that functionality. Like.. maybe something that calculates the factorial of a number. It's simple, and you can give it a whirl with test driven development.