Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 20 minutes

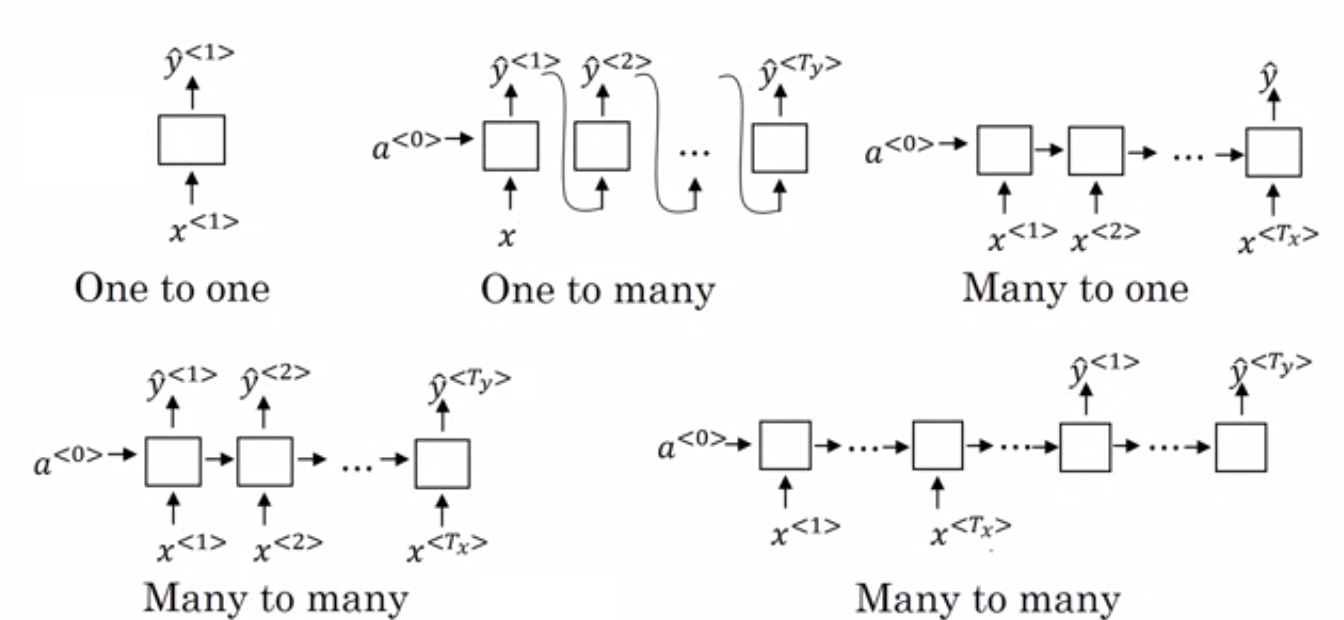

In this article at OpenGenus, we shall dive into Recurrent Neural Networks types after getting you briefly introduced to RNNs. In short, the different types of RNN are:

- One to One RNN

- One to Many RNN

- Many to One RNN

- Many to Many RNN

We will review the basic idea of RNN and then, move on to the different types of RNN and explore them in depth. Keep reading, for a bonus awaits at the end of this article.

What is RNN?

Recurrent Neural Network is a generalization of feed-forward neural network that has an internal memory. RNN is recurrent in nature as it performs the same function for every input of data while the output of the current input depends on the past one computation. After producing the output, it is copied and sent back into the recurrent network. For making a decision, it considers the current input and the output that it has learned from the previous input.

Unlike feed-forward neural networks, RNNs can use their internal state (memory) to process sequences of inputs. This makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. In other neural networks, all the inputs are independent of each other. But in RNN, all the inputs are related to each other.

Types of RNN

So we have established that Recurrent neural networks, also known as RNNs, are a class of neural networks that allow previous outputs to be used as inputs while having hidden states. RNN models are mostly used in the fields of natural language processing and speech recognition. Lets look at its types:

One to One RNN

One to One RNN (Tx=Ty=1) is the most basic and traditional type of Neural network giving a single output for a single input, as can be seen in the above image.

One to Many

One to Many (Tx=1,Ty>1) is a kind of RNN architecture is applied in situations that give multiple output for a single input. A basic example of its application would be Music generation. In Music generation models, RNN models are used to generate a music piece(multiple output) from a single musical note(single input).

Many to One

Many-to-one RNN architecture (Tx>1,Ty=1) is usually seen for sentiment analysis model as a common example. As the name suggests, this kind of model is used when multiple inputs are required to give a single output.

Take for example The Twitter sentiment analysis model. In that model, a text input (words as multiple inputs) gives its fixed sentiment (single output). Another example could be movie ratings model that takes review texts as input to provide a rating to a movie that may range from 1 to 5.

Many-to-Many

As is pretty evident, Many-to-Many RNN (Tx>1,Ty>1) Architecture takes multiple input and gives multiple output, but Many-to-Many models can be two kinds as represented above:

1.Tx=Ty:

This refers to the case when input and output layers have the same size. This can be also understood as every input having a output, and a common application can be found in Named-entity Recognition.

2.Tx!=Ty:

Many-to-Many architecture can also be represented in models where input and output layers are of different size, and the most common application of this kind of RNN architecture is seen in Machine Translation. For example, "I Love you", the 3 magical words of the English language translates to only 2 in Spanish, "te amo". Thus, machine translation models are capable of returning words more or less than the input string because of a non-equal Many-to-Many RNN architecture works in the background.

Thanks for Reading and I hope this article helped you. And as I promised, here's a little bonus reading article that can serve as a RNN cheat-sheet for you.

Further reading

- Text Summarization using RNN by Ashutosh Vashisht

- Applications of Recurrent Neural Networks (RNNs) by Devdarshan Mishra

- RNN using an example by Taru Jain

- RNN in general (short introduction) by Adhesh Garg