Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Introduction to Instruct GPT

Instruct GPT, or simply Instruct, is a powerful tool that allows users to fine-tune the language generation capabilities of the GPT (Generative Pre-trained Transformer) model. Developed by OpenAI, Instruct GPT allows users to train the model on specific tasks and generate text that is tailored to their specific needs. This tool has been designed to make it easy for developers and researchers to fine-tune GPT for a wide range of natural language processing (NLP) tasks, such as language translation, text summarization, and question answering. With Instruct GPT, users can train the model on their own data, fine-tune the model for specific use cases, and generate text that is highly relevant and accurate. To adapt well to following instrcutions, Open AI used reinforcement learning with human feedback(RLHF) to fine-tune GPT model. In this article, we will take a deep dive into the world of Instruct GPT, exploring its capabilities and how it can be used to improve the performance of language generation models.

Table of contents:

- Background of Instruct GPT

- How does Instruct GPT language modeling work?

- Instruct GPT Architecture

- How is Instruct GPT related to ChatGPT?

- Advantages of Instruct GPT

- Applications of Instruct GPT

- References

Background of Instruct GPT

Instruct GPT is a new type of GPT-3 model that is able to follow instructions and complete tasks. It was developed by OpenAI as an extension of their popular GPT-3 model. The size of the GPT model, specifically the number of parameters, varies depending on the specific version or implementation. However, the GPT-3 model has 175 billion parameters which is one of the largest models in the language model community. The main idea behind Instruct GPT is to enable the model to understand and follow instructions in natural language, allowing it to perform a wide range of tasks such as data entry, data cleaning, and summarization, among others. This is achieved by fine-tuning the GPT-3 model on a large dataset of instructions and tasks, allowing it to learn to understand the meaning of instructions and how to complete the tasks. The goal of Instruct GPT is to make it easier and more efficient for businesses and organizations to automate repetitive and time-consuming tasks.

How does Instruct GPT language modeling work?

The original GPT model is a language model that can generate human-like text by predicting the next word in a sentence based on the context provided by the previous words.

Instruct GPT, on the other hand, is specifically designed to perform a certain task or set of tasks, such as answering questions, translating text, or summarizing articles, by fine-tuning the pre-trained GPT model on a specific task-specific dataset. This fine-tuning process is known as transfer learning, where the model learns to adapt to the new task by leveraging the knowledge it has already acquired during pre-training. Instruct GPT uses a specialized technique called as few-shot learning. Few-shot learning is a machine learning technique that enables a model to learn and make predictions based on very limited amounts of data. Few-shot learning can be applied to tasks such as few-shot text classification, question answering, and text generation, where the model is trained on a small number of examples per class. By leveraging its pre-training on a large corpus of text data, Instruct GPT is able to generalize its knowledge to new classes with very few examples, making it a good candidate for few-shot learning. To apply few-shot learning in Instruct GPT, fine-tuning on a small annotated dataset can be performed to adapt the model's parameters to the specific task at hand. The resulting model can then be used to make predictions on new, unseen classes based on just a few examples.

The key difference between GPT and Instruct GPT is that GPT can be fine-tuned for any task, whereas Instruct GPT is specifically fine-tuned for a certain set of tasks. This makes Instruct GPT more efficient and accurate for those specific tasks, but less versatile than the original GPT.

Instruct GPT works by providing it with a prompt or a set of instructions, along with the task-specific dataset, and then fine-tuning the model on that dataset. Once the model is fine-tuned, it can be used to perform the specific task for which it was trained.

Instruct GPT Architecture

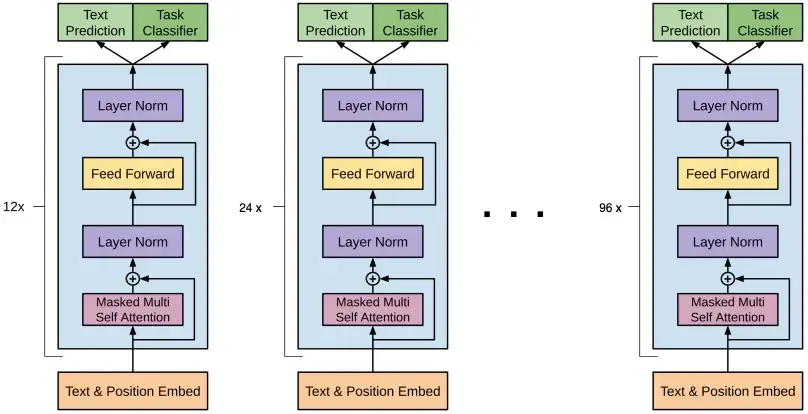

The architecture of Instruct GPT is based on the transformer architecture, which is a type of neural network architecture that was first introduced in a 2017 paper by Google researchers. The transformer architecture is composed of multiple layers, each of which includes a self-attention mechanism and a feed-forward neural network. The self-attention mechanism allows the model to weigh the importance of different parts of the input when making predictions, while the feed-forward neural network is used to make the final predictions.

In the case of Instruct GPT, the architecture is fine-tuned to perform specific tasks, such as language translation or question answering, by training it on a large dataset of examples for that task. The model is also pre-trained on a massive amount of text data to learn general language patterns, which helps improve its performance on various NLP tasks. Additionally, Instruct GPT uses a technique called "few-shot learning" which allows the model to learn a new task with just a few examples, rather than requiring a large dataset of labeled examples.

How is Instruct GPT related to ChatGPT?

Instruct-GPT and ChatGPT are both models developed by OpenAI. However, the main difference between them is in their intended use cases. ChatGPT is a conversational AI model that is trained to generate human-like responses in a dialogue context. On the other hand, Instruct-GPT is a task-oriented AI model that is trained to complete specific tasks such as code generation, text-to-SQL queries, and summarization by following the instructions provided to it.

Both models are built on top of the transformer architecture, but Instruct-GPT is trained on a more diverse set of tasks and is fine-tuned to perform specific tasks, whereas ChatGPT is trained on a more general conversational dataset.

In summary, Instruct-GPT is a specialized version of ChatGPT designed for a task-oriented context.

Advantages of Instruct GPT

-

Large Scale Training: InstructionGPT is trained on a massive amount of diverse data, allowing it to generate high-quality and contextually relevant responses.

-

State-of-the-Art Architecture: InstructionGPT uses the Transformer architecture, which is currently the state-of-the-art in NLP.

-

Versatile: InstructionGPT can be fine-tuned for a wide range of NLP tasks, including question-answering, language translation, and text generation.

-

Improved Performance: InstructionGPT has outperformed previous models in various NLP benchmarks, demonstrating its superior performance.

-

Easy to Integrate: InstructionGPT can be easily integrated into existing applications, enabling organizations to leverage its capabilities for NLP-related tasks.

Applications of Instruct GPT

Some of the applications for Instruct GPT are as follows:

-

Text Generation: Instruct GPT can be fine-tuned to generate specific types of text, such as poetry, fiction, or news articles.

-

Language Translation: Instruct GPT can be trained to translate text from one language to another, making it a useful tool for businesses and organizations that operate internationally.

-

Text Summarization: Instruct GPT can be fine-tuned to summarize long texts, making it a useful tool for researchers, journalists, and students.

-

Text-to-Speech: Instruct GPT can be fine-tuned to generate speech from text, making it a useful tool for creating virtual assistants and other speech-based applications.

-

Dialogue Systems: Instruct GPT can be fine-tuned to generate natural-sounding responses to user input, making it a useful tool for creating chatbots and other dialogue systems.

-

Text Classification: Instruct GPT can be fine-tuned to classify text into different categories, such as sentiment analysis, topic classification, and named entity recognition.

-

Text Completion: Instruct GPT can be fine-tuned to complete sentences or paragraphs given a starting text, making it a useful tool for text editors and other productivity applications.

Overall, Instruct GPT is a versatile tool that can be used in a wide range of NLP applications, and it is likely that new uses for the technology will be discovered as it continues to be developed and refined.

References

- Vaswani, Ashish, et al. "Attention is all you need." Advances in neural information processing systems 30 (2017).

- Dongre, Anay21110. “Machine-Learning-Collection/ML/PyTorch/NanoGPT at Main · Anay21110/Machine-Learning-Collection.” GitHub, github.com/Anay21110/Machine-Learning-Collection/tree/main/ML/PyTorch/NanoGPT.

- Openai. “GitHub - Openai/Gpt-3: GPT-3: Language Models Are Few-Shot Learners.” GitHub, github.com/openai/gpt-3.