Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 15 minutes

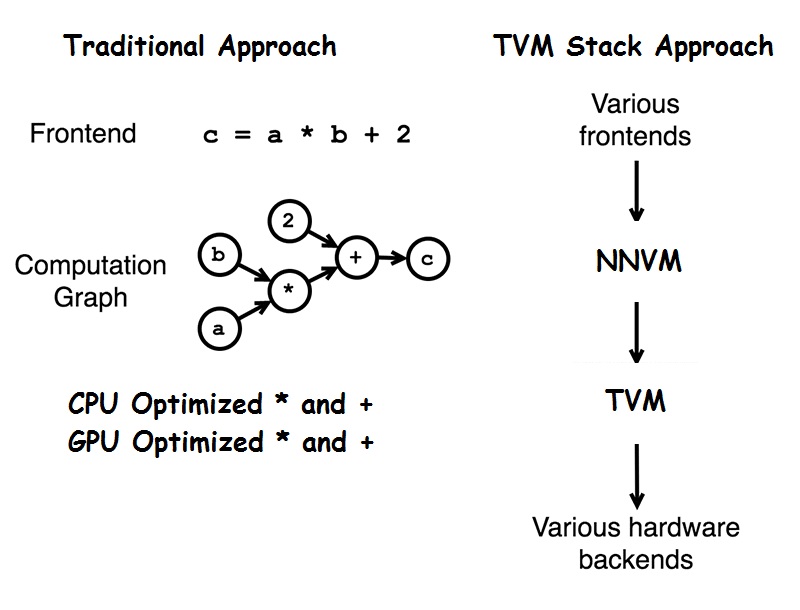

TVM is an open source deep learning compiler stack for CPUs, GPUs, and specialized accelerators. It aims to close the gap between the productivity-focused deep learning frameworks, and efficiency-oriented hardware backends. We have provided a brief introduction to the TVM Stack.

One can choose among multiple machine learning frameworks to develop applications. You also have a choice of a wide range of hardware to train and deploy ML models. The diversity of frameworks and hardware is crucial to maintaining the health of the AI ecosystem. This diversity, however, also introduces several challenges to developers. This post briefly addresses these challenges and introduces a compiler solution namely TVM that can help solve them.

Challenge

Some of the challenges of having several Machine Learning frameworks and hardware platforms are:

-

Difficulty to switch from one framework to another because of differences among the frontend interfaces and the backend implementations. Each framework brings in a different advantage which suites to various situations

-

Framework developers need to maintain multiple backends to guarantee performance on various hardware

-

Chip vendors need to support multiple frameworks for every new chip they build. The workloads in each framework are represented and executed in unique ways, so even a single operation such as Convolution might need to be defined in different ways.

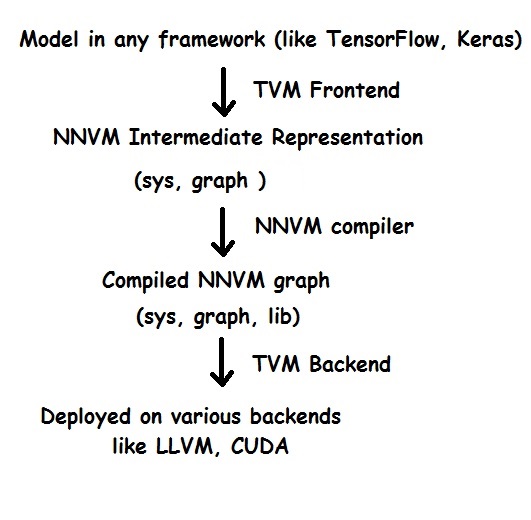

TVM, the solution

TVM is an open deep learning compiler stack for CPUs, GPUs, and specialized accelerators. It aims to close the gap between the productivity-focused deep learning frameworks, and the performance- or efficiency-oriented hardware backends. TVM provides the following main features:

- Compilation of deep learning models in Keras, MXNet, PyTorch, Tensorflow, CoreML, DarkNet into minimum deployable modules on diverse hardware backends.

- Infrastructure to automatic generate and optimize tensor operators on more backend with better performance.

TVM has frontends for various frameworks such as:

- TensorFlow

- MXNet

- Keras

- CoreMK

- ONNX

TVM has support for various backends such as:

- LLVM

- CUDA

- OpenCL

- METAL

TVM Flow

Advantages of TVM

TVM brings in various advantages such as:

-

You can replace heavy runtime environments like TensorFlow runtime environment with a lightweight runtime environment such as LLVM. This will release the load from the host machine

-

Better performance than top Machine Learning frameworks such as TensorFlow due to AutoTVM module which auto tunes optimizations according to the host machine backend.