Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Reading time: 30 minutes

We have compared PyTorch and TensorFlow on the basis of various metrics to help you determine the framework you should go forward with. In short, TensorFlow gives you more control and high computational efficiency while PyTorch gives you the simplicity to develop applications.

Speed/ Efficiency

PyTorch is not a great choice for production use. TensorFlow is more development and hence, should be used when performance is a concern.

PyTorch is faster than TensorFlow on default settings. It is mostly attributed to TF defaulting to data format NHWC, which is slower on CUDA GPUs than NCHW. Although TensorFlow can be told to use NCHW too, that is additional configuration.

Ease of use

PyTorch is, certainly, easier to use and get started with and hence, is a good choice for academic research and situations where performance is not a concern.

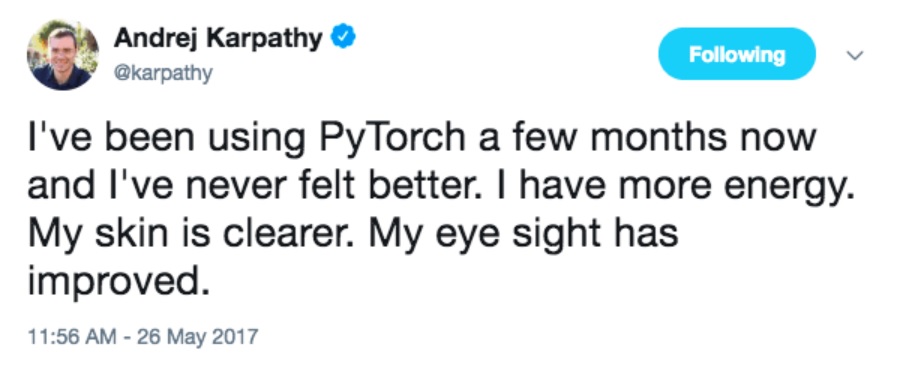

This is captured by Andrej Karpathy's tweet:

Dynamic vs Static graph

In Tensorflow, the graph is static and you need to define the graph before running your model. Although, Tensorflow also introduced Eager execution to add the dynamic graph capability.

PyTorch uses dynamic computation graphs

Data Parallelism

One of the biggest features that distinguish PyTorch from TensorFlow is declarative data parallelism: you can use torch.nn.DataParallel to wrap any module and it will be (almost magically) parallelized over batch dimension. This way you can leverage multiple GPUs with almost no effort.

On the other hand, TensorFlow allows you to fine tune every operation to be run on specific device. Nonetheless, defining parallelism is way more manual and requires careful thought.

Distributed training

Distributed training is to create a cluster of TensorFlow servers, and how to distribute a computation graph across that cluster.

Tensorflow supports distributed training which PyTorch lacks for now.

Visualization

Tensorboard in TensorFlow is a great tool for visualization. It is very useful for debugging and comparison of different training runs.

Tensorboard can:

- Display model graph

- Plot scalar variables

- Visualize distributions and histograms

- Visualize images

- Visualize embeddings

- Play audio

Tensorboard can display various summaries which can be collected via tf.summary module. We will define summary operations for our toy exponent example and use tf.summary.FileWriter to save them to disk.

Tensorboard competitor from the PyTorch side is visdom. It is not as feature-complete, but a bit more convenient to use. Pytorch integrations with Tensorboard do exist. Also, you are free to use standard plotting tools like matplotlib and seaborn.