Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

In this article at OpenGenus, we have explained the 4 different types of Backpropagation.

Table of contents

- Introduction to Backprop

- Different types of Backprop

- Static backpropagation

- Recurrent backpropagation

- Resilient backpropagation

- Backpropagation through time

- Conclusion

Introduction to Backprop

Back propagation is an algorithm used in supervised learning to train the neural networks. The algorithm works by adjusting the weights and biases of the nodes.It entails sending input data into the network forward to produce an output, comparing it to the desired output, and then propagating the mistake back through the network to change the nodes' weights and biases. The process repeats many times until the network produces results of high accuracy.

Different types of Backpropagation (4 types)

There are four main types of backpropagation:

- Static backpropagation

- Recurrent backpropagation

- Resilient backpropagation

- Backpropagation through time

Static backpropagation

Static backpropagation involves calculating the error function relative to the network weights, which is utilized to modify these weights and enhance the peformance of the network.

Core Concept:

The fundamental idea behind static backpropagation involves calculating the gradient of the error function with respect to the network weights. This is done by applying the chain rule of calculus, which allows us to represent the derivative of a function with respect to its argument in terms of the derivatives of its intermediate variables.

Equation:

The equation for static backpropagation is as follows:

∂E/∂w = ∂E/∂y * ∂y/∂s * ∂s/∂w

Where E is the error function, w is a weight of the network, y is the output of the network, and s is an intermediate variable.

Advantages:

The following are some benefits of static backpropagation:

- Able to handle intricate nonlinear input–output interactions.

- Can be applied to a variety of tasks, including speech recognition, image recognition, and natural language processing.

- The network is capable of being trained via supervised learning, which enables it to learn from labeled data.

Disadvantages:

The following are some of the drawbacks of static backpropagation:

- For big networks, it may be computationally expensive.

- The error function may have local minima, when it remains at an unfavorable value.

- Needs a significant volume of labeled data for training.

Applications:

Static backpropagation has several uses, such as the following:

- Image recognition: A neural network can be trained to recognize images via static backpropagation, for as by identifying handwritten numbers in a dataset.

- Static backpropagation can be used to train a neural network to convert speech into text for speech recognition.

- Static backpropagation can be used to train a neural network to comprehend and produce natural language text in natural language processing.

Recurrent backpropagation

Recurrent backpropagation is a popular technique for training artificial neural networks, which involves computing the gradients of the loss function with respect to the network's parameters and then changing the parameters to minimize the loss. Recurrent neural networks have an internal feedback loop that enables data to be transferred from one phase to the next. Because the parameters of the network are shared across time steps, it is necessary to properly combine the gradients from each time step in order to update the parameters.

Equation

Recurrent backpropagation uses a similar equation to the conventional backpropagation algorithm, but it calculates weight updates by adding the gradients from the current time step and all prior time steps. It is shown below:

Δwij(n) = -η ∂E(n) / ∂wij(n) - λ ∂E(n-1) / ∂wij(n) - λ^2 ∂E(n-2) / ∂wij(n) - ... - λ^(n-1) ∂E(1) / ∂wij(n)

where Δwij(n) is the weight update for the j-th neuron in the i-th layer at time step n, η is the learning rate, λ is the forgetting factor, and ∂E(t) / ∂wij(n) is the partial derivative of the error with respect to the weight wij at time step t.

Advantages:

1.RBP is a good choice for resolving sequence issues because it can handle sequences of different lengths.

2.The feedback links in recurrent backpropagation allows the network to recall earlier inputs.

3.Recurrent backpropagaton allows sequential data can be handled in real time.

Disadvantages:

1.Due to the feedback connections and the requirement to handle sequences of various lengths, training recurrent backpropagation can be computationally expensive.

2.When the sequence length is large, recurrent backpropagation can experience gradients that disappear or explode.

Applications:

1.Recurrent backpropagation is utilized for speech recognition when audio signals are present.

2.Recurrent backpropagation is used to process text input that is written in natural language.

3.Recurrent backpropagation is used to forecast future values in time series data, including stock prices and weather predictions.

Resilient backpropagation

Resilient backpropagation is a type of neural network learning algorithm that adjusts weights and biases using only the sign of the gradien. The fundamental principle of resilient backpropagation is to modify the step size of weight updates in accordance with the size of earlier weight updates. The algorithm is made to be resistant to redundant, noisy, or unsuitable training data.

Equation:

The weight update rule for Resilient backpropagation is given by:

Δw_ij(t) = -sign(g_ij(t)) * Δw_ij(t-1)

where g_ij(t) is the gradient of the error function with respect to the weight w_ij at time t, sign() is the sign function, and Δw_ij(t) is the weight change at time t.

Advantages:

1.Since resilient backpropagation just considers the gradient's sign, it is less susceptible to noisy data and can converge more quickly than other gradient descent techniques.

2.Since it adjusts the step size of weight updates, it is more resistant to redundant or poorly conditioned training data.

3.Compared to other gradient descent techniques, resilient backpropagation requires less processing.

Disadvantages:

1.Since resilient backpropagation does not take into account the gradient's strength, it may become stuck in local minima.

2.More hyperparameter adjustment may be necessary than with other gradient descent techniques.

Applications:

1.In numerous neural network topologies, resilient backpropagation has been utilized for a variety of tasks, including image classification, speech recognition, and stock price prediction.

2.It can be used to enhance the performance of deep learning architectures like convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

3.Additionally, resilient backpropagation has been used to a variety of optimization issues in robotics, computer vision, and control theory.

Backpropagation through time

Backpropagation through time is used in recurrent neural networks (RNNs) to compute gradients for each weight in the network. Like any other neural network, it may be used to train sequences of input/output patterns. However, unlike other neural networks, it also preserves the "memory" of earlier inputs in the sequence, which makes it especially useful for time series data. The feedforward neural networks are trained using the backpropagation technique, which is extended to the RNNs via Backpropagation through time.

Equation:

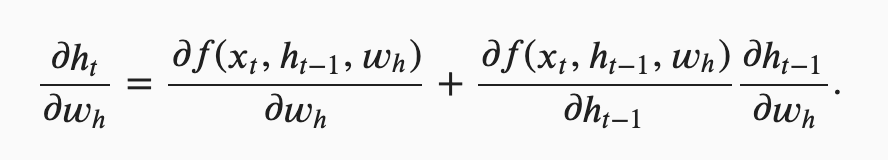

The Backpropagation through time algorithm involves the use of chain rule of differentiation to compute the gradient of the error function with respect to the weights of the recurrent neural network. The algorithm is based on the following equation:

Advantages:

1.For time series data, such as speech recognition, stock price forecasting, and natural language processing, Backpropagation through time is very successful. It has the ability to recall information from the past and can handle input data of any length.

2.It is a commonly used and well-known algorithm that has been proven to function effectively in real-world settings.

3.Many well-liked deep learning frameworks, like TensorFlow and PyTorch, utilise Backpropagation through time.

Disadvantages:

1.Backpropagation through time can be computationally expensive and demands a lot of CPU power.

2.The backpropagation of errors over long sequences makes the BPTT training process unstable when the sequence length is large, which causes the vanishing or expanding gradient problem.

3.The approach favors short-term memory since it only propagates errors backwards as far as the length of the sequence.

Applications:

1.Natural Language Processing: Because Backpropagation through time can produce text sequences based on prior inputs, it is frequently used for language modeling, text categorization, and machine translation.

2.Speech Recognition: In order to recognize spoken words and translate them into text, Backpropagation through time is employed in speech recognition tasks.

3.Stock Price Prediction: Using historical data trends and Backpropagation through time, one may forecast stock prices.

4.Using prior notes and musical patterns, Backpropagation through time can be utilized to create musical sequences.

Conclusion

Backpropagation is a popular algorithm for training artificial neural networks is backpropagation. It is based on the gradient descent technique for cost function optimization, which modifies the network's weights and biases. There are four main types of backprpagation which include the recurrent backpropagation and the static backpropagation, Backpropagation through time and Resilient backpropagation.