Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

We listed 10 aspects where spaCy shines better than NLTK. It also includes information when NLTK outsmarts spaCy.

Background

Before diving into comparing the two most popular Natural Language Processing (NLP) libraries, let's first understand what spaCy and NLTK are, who are the creators and why these packages were created on the first place?

(Feel free to skip this section if you already know these libraries)

Starting with Natural Language Toolkit, abbreviated as NLTK, is the earliest NLP library developed which offers a wide range of software, datasets (known as corpora) and documentation across all platforms for NLP tasks and computational linguistics. The birth of NLTK dates back to 2001 when Steven Bird was teaching Computational Linguistics at the University of Pennsylvania and Edward Loper began working as a teaching assistant for the same. Their aim was to develop a long-lasting software that enables easier teaching of NLP, but they ended up building one of the most widely used NLP package. It gives a wide scope for experimentation. Currently, it has been contributed and used by thousands of researchers all around the world for ground-breaking research.

The newest player in the game, spaCy is developed by Matthew Honnibol and Ines Montani when Matthew decided to quit academia and make NLP available to people and not just researchers. They made an open-source, multi-platform library that has gained immense popularity and is a leading competitor of NLTK in just 5-6 years. That's something! This industrial-strength, production-ready package written in Cython and Python believes in getting your work done swiftly and effectually for all NLP tasks like parsing, tokenization, lemmatization, text classification, entity recognition and linking, visualizations, etc. The most recent version v3 has support for state-of-the-art (SOTA) transformer models which powers the spaCy pipeline giving remarkable benchmarks. According to the most recent updates, spaCy has more than 23 million downloads and almost 21.2 thousand Github stars.

Why are we even talking about it?

As mentioned by Microsoft Research Asia, the next 10 years are going to be golden for NLP. It is one of the hottest research fields in Artificial Intelligence(AI). Hence, analyzing the current technology that enables this research is of prime importance. A decade ago, we wouldn't have talked about this. But with the advent and rise of spaCy, the comparison between both of these popular libraries has begun. Among the most used libraries for computational linguistics, Spacy and NLTK are at the top. Knowing how and where spaCy lies ahead of NLTK is crucial as it thrived and established its position while NLTK, a strong contender was in the market.

Let us get to the bottom of our main question - Why SpaCy over NLTK?

As I have set the stage, let us understand the pros of spaCy that beat NLTK. After using both the libraries extensively, I have jotted down the following 10 points to prove my stand.

Before you move further, I would like to say that I don't intend to belittle NLTK in any way. Its stride since 20 years is still going and theres no better way to prove its worth.

1. Slow and steady wins the race, but not always!

NLTK has each and every tool and technique one would need to perform NLP. It is an enormous library with a wide-range of wrappers, stemmers, parsers and what not. It's a candy store and every NLP practitioner is bound to be awestruck by looking at so many options. But its wise to choose the best of what's available. The sad truth with NLTK is that the best isn't there in the lot! Additionally, NLTK is a tortoise and to be fair it wasn't built keeping speed in mind as it was a made for pedagogical reasons. Now, having tried and tested, I can totally vouch for what Matthew had rightly stated that we needed a library that stays at par with the hot and updating field that NLP is.

Enter : spaCy! It has mindboggling accuracies and speed for each specific pipeline task that every NLP practitioner dreams of! Checkout the benchmarks on their official website and I'll wait until your jaw drops! It gives SOTA algorithms packed up in en_core_web_trf model with a liberty to customize the pipeline. spaCy's increasing popularity proves its prowess.

2. Amazing Documentation, really AMAZING!

The only light in the darkness when one starts off with a new library and even later is its documentation. Even though we have online tutorials but lets be honest, if one wants to create something from the scratch or novel, one has to dig into the documentation, there's no other go! Hence, it is of prime importance to compare the documentations of spaCy and NLTK. spaCy's documentation is clear, crisp, succinct,well-organized and cover-all. It consists of detailed explanations, valuable tips and tricks, helpful code modules and illustrations, and video tutorials to get started. The makers have cleared various FAQ's whenever and wherever necessary leaving no doubt in the readers mind. The colorful and interactive user interface adds onto to a great user experience.

On the contrary, NLTK has an inconsistent, difficult to follow and a quite incomplete documentation for certain packages which takes a beginner sometime to understand. The book written by the creators regarding using NLTK for NLP is very detailed and helps a newbie to understand concepts in depth. Still, it lacks the guidance that helps someone to implement NLP. This makes NLTK difficult to learn and use as there's no clear NLP pipeline mentioned anywhere in the documentation. No doubt, it is loaded with code snippets and examples but that is not the case for all packages. Additionally, there isn't any documentation regarding the structure and organization of each corpora in the the large database in NLTK data which might restrict the NLP practitioner to leverage the full potential of the dataset. Furthermore, an introduction of each pipeline API is given at the end of the page after all the different algorithmic APIs to perform that NLP task instead of the beginning. Say for example, tag module is introduced after explaining APIs for Brill's Tagger, CRF Tagger, HMM Tagger, HunPos Tagger, Perceptron Tagger, among others. There is a scope of improvement in the documentation for NLTK and the most plausible reason for the non-uniformity is the continuous additions to the library by several contributors.

3. The real internationalization!

Though at nascent stages spaCy supported only English(v1), but with the latest version(v3) it supports 17 languages for statistical modeling and 64+ languages for "Alpha tokenization". On the other hand, although NLTK supports more languages compared to spaCy, it is not extending the support to all the modules and algorithms. Only spoonful packages among the ocean of packages provide multi-lingual support and all of them don't support each and every language that the others support. For eg. nltk.parse.stanford module for parsing, tokenization and tagging, nltk.stem.snowball module for stemming provides stemmers for around 15 languages including Arabic, English, Romanian, Swedish, etc., nltk.test.unit gives methods for evaluating POS tagging and translation for Russian, English, etc. (not all the 15 languages as Snowball stemmer) including languages unknown/unspecified to the models and some others. But rest of the chunking, classifying, clustering, parsing, sentiment analyzing, stemming, tagging, transformation based learning, unit testing, translating and chatbot based algorithms support only English.

Hence, internationalization can't be attributed to the whole suite of text processing libraries provided by NLTK. However, spaCy gives trained pipelines and a freedom to customize it for all the languages that it supports ensuring reliable and consistent internationalization.

Bonus: spaCy even gives a pre-trained language model for multi-languages for named entities!

4. Right here, there's some m15take!

Error: Expected a string, found an integer.

I still remember when I started out with NLTK and tried exploring a new corpus and failed to understand why a particular method wasn't valid. And this has been the case in many instances where I spent a lot of time debugging erros while training my tagger in NLTK. Coming to spaCy, v3 has an incredible error handling and validation support giving spaCy an upper hand. It has type hints support from Python 3 and static or compile-time type checker like Mypy for validating and giving friendly error messages for the same. The policy followed is "mistakes as they happen" which is very helpful. Further, Thinc, the lightweight deep learning library running under the hood assists in model definitions by guiding you the expected types of all the hyper parameters, multi-dimensional arrays; it even has custom data-types like Floats3d (a 3-D array of floating-point values) and various other training parameters. Thinc provides dynamic error finding support too. With addition of linting in your favorite code editor, you can experience a smooth coding experience like never before! This saves time and energy of scrounging through the documentation and stack traces figuring out what went wrong and results in efficient, bug-free code.

5. Hassle Free Downloads

The NLTK data consists of various corpora, book, popular packages, packages for running tests, third-party data packages and all modules available in NLTK. Though it is a simple user interface but one might find it over-whelming when they look at it and end up running nltk.download('all') which stands for downloading all the NLTK data. This leads to having around 6GB of NLTK data filled up in the memory when in reality one would be working with just one corpora at a time and only some of the packages. I have seen most of the NLP practitioners using NLTK doing this. Or else, one would spend a lot of time deciding what has to be downloaded. The worst part is one cannot perform any NLP task without downloading the NLTK Data.

spaCy comes to the rescue. It provides all essential pipeline components with just the download of spaCy. One could choose to download a language model out of small(sm), medium(md), large(lg) or recently added transformer-based(trf) model. One can even start with a blank language model and begin with training the entire pipeline on their own.

nlp = spacy.blank('<language_name>')

6. Better Approach towards NLP

Both these famous packages have a very different outlook at conducting NLP. Considering the same NLP pipeline of operations and input to the two, the content that flows through both of them is very different. NLTK follows a basic datatype-oriented approach where output of say, tokenization is a list of strings/sentences, tagging is a dictionary of string mapped to its tag, etc. This means that we always receive an integer, string, list or a dictionary and have to make a sense and create our own data structures and work on them even though every NLP task would follow the same steps.

But, spaCy works on object-oriented approach. It works on Document objects, Vocab and Language classes. Every language is a class which consists of all pre-trained commonly performed pipeline components like entity linker & recognizer, dependency parser, tagger, pattern matcher, lemmatizer, etc. that processes input text resulting in a document object that consists of annotations like is_stopword, noun_chunks, sentiment and methods like sents(). One can even find the similarity between document objects using document.similarity() method. The vocab data structure is same as the vocabulary for a specific language i.e it contains the word vectors that define the language to the pipeline. One can obtain strings, lexemes, vectors, etc. from the Vocab object. The approach gives two benefits. One, the data structures and working flow is customized for NLP and hence makes it simpler, easy to understand and manipulate for even advanced NLP. Two, this approach obeys the well-accepted object oriented programming style and standards hence is updated and follows the best practices.

7. Everything is in "Context"!

I just talked about word vectors and that is all this point is about. Word embeddings/vectors are key to any NLP task as it gives context. For a machine, every word is treated as a string that is stored at a location in its memory. It doesn't understand its meaning individually as well as in the context of the input text. This is where word embeddings come into picture. They give a useful numerical representation that makes the machine understand the string's context. And just like that, the mere "string" becomes a "word in that particular text" for the machine. Commonly known pretrained word vectors are GloVe, FastText, Word2Vec in 2 architectures namely Continuous Bag Of Word(CBOW) and Continuous Skip-Gram Model, ELMo, etc.

Coming to NLTK, it innately doesn't have any support for word vectors. One has to search for options outside the library like using Gensim, a popular topic-modeling library or any of the above mentioned embeddings in conjugation with algorithms to create the pipeline that understands context. However, spaCy has Word2Vec embedding already in the language models that make the job so simple. It also gives flexibility to train our own word-embedding and update the Vocab object(since it contains the word-vectors). It also provides various Tok2Vec options as an add-on.

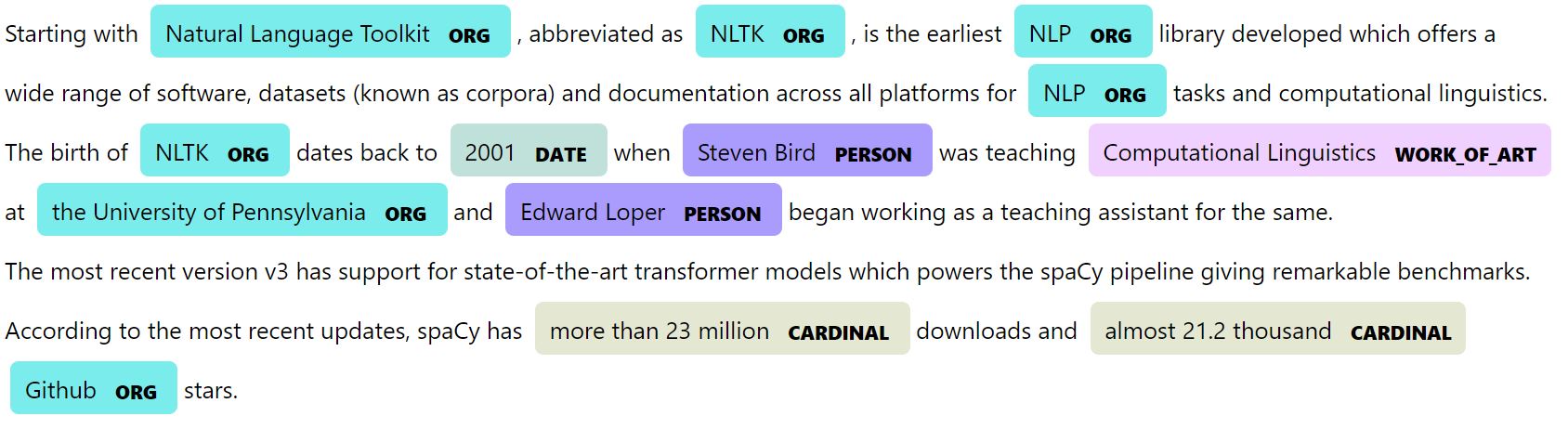

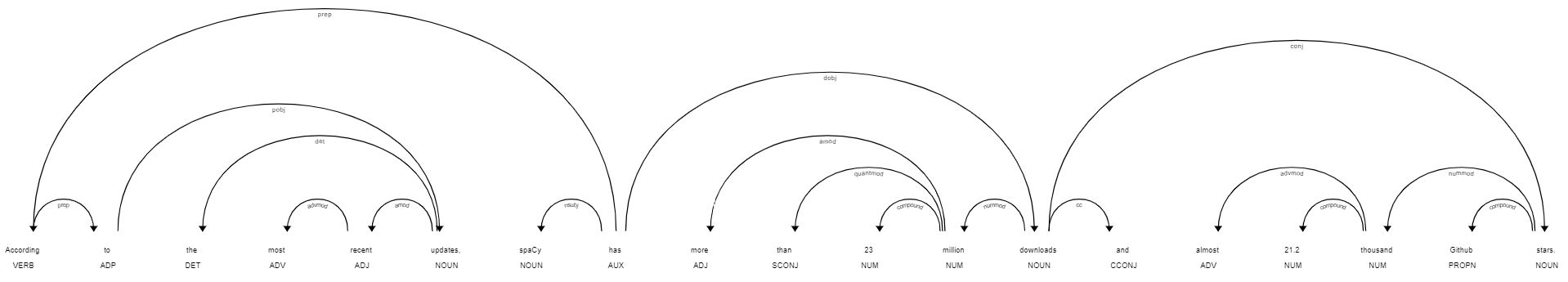

8. Power 2X

An NLP package becomes more powerful when there are built-in visualizers to enhance understanding and presentation. It helps in visualizing parsed trees, entity linking, etc. that aids in improving the training pipeline or statistical model and error fixing significantly which is difficult to interpret sans the graphs. NLTK doesn't have any support for visualization. One needs to use the excellent python built-in plotting libraries like Matplotlib, Seaborn, NetworkX, Bokeh, Holoviews, etc. Moreover, spaCy has a library under its parent Explosion AI known as displaCy for dependency parsing and dispaCyENT for named entity recognition. Figure 1 & 2 show beautiful colorful graphs drawn through the mentioned code. These graphs can be further rendered as HTML, hosted on web applications, downloaded as SVG and also viewed in Jupyter notebooks.

import spacy

nlp = spacy.load('en_core_web_sm')

doc = nlp(u"Starting with Natural Language Toolkit, abbreviated as NLTK, is the earliest NLP library developed which offers a wide range of software, datasets (known as corpora) and documentation across all platforms for NLP tasks and computational linguistics. The birth of NLTK dates back to 2001 when Steven Bird was teaching Computational Linguistics at the University of Pennsylvania and Edward Loper began working as a teaching assistant for the same.\n The most recent version v3 has support for state-of-the-art transformer models which powers the spaCy pipeline giving remarkable benchmarks. According to the most recent updates, spaCy has more than 23 million downloads and almost 21.2 thousand Github stars.")

spacy.displacy.serve(doc, style="ent")

Fig1: dispaCy visualization of spaCy's Named Entity recognizer

import spacy

nlp = spacy.load('en_core_web_sm')

doc = nlp(u"According to the most recent updates, spaCy has more than 23 million downloads and almost 21.2 thousand Github stars.")

spacy.displacy.serve(doc, style="dep"))

Fig2: dispaCy visualization of spaCy's Dependency Parser

9. End-to-end project architecture

We all love git. Why do we love it? Because it gives us the flexibility for collaboration, version-control, project packaging and deployment. Now, every project has these requirements; be it a research or an industrial one. NLTK doesn't have any data as well as pipeline packaging and deployment, and workflow management system. spaCy has listened to our plea to bring a one stop solution where development and deployment specialized for Machine Learning(ML) projects is possible. The recently released version of spaCy has taken a major leap in the NLP world by, quoting their documentation, "let you manage and share end-to-end spaCy workflows for different use cases and domains, and orchestrate training, packaging and serving your custom pipelines." With the help of an open source version control library DVC one can keep track of all changes in data as well as code files. Currently, it supports only one workflow but in future we can expect multiple workflows for a single project. It even allows you to host your data in cloud databases like Google Cloud Platform(GCP), Amazon Web Services(AWS), Microsoft Azure, Hadoop Distributed File System(HDFS), Simple Storage Service (S3) etc. It automatically caches the results avoiding reruns, focuses on reproducibility and CI/CD which makes it a perfect solution for all use-cases.

10. All in One(almost)!

Having seen all the previous points, it is very clear why NLTK can be used productively for only research and educational purposes. If one uses NLTK for industrial use they would face challenges due to its slow speed, low accuracies, inability for workflow management and bag of algorithms but no best one. spaCy on the other hand shows all qualities for being production ready with research ability. Though their website states that it isn't for research purposes, but with the latest version which allows declarative configuration system, trainable and customization pipelines, SOTA transformer-support and custom model creation in any frameworks like Tensorflow, Pytorch, etc. with the existing positives like blank model creation, support for training word embedding layer, multi-lingual and multi-domain support, all Natural Language Understanding(NLU) pipeline tools with flexibility of customization, ability to add new components to the NLP pipeline, etc it checks most of the boxes for research-use. Still, it is not there yet as spaCy doesn't provide the variety of algorithms and corpora that NLTK does. Anyways, in comparison to NLTK, it still has an advantage.

NLTK - When does it win over SpaCy ?

Knowing this is equally important to make an informed decision, hence I wish to talk about when NLTK is better than spaCy.

Firstly, Natural Language Generation(NLG) which includes chatbots and language translation cannot be performed by spaCy. NLTK has nltk.chat module that enables simple question answering through pattern matching on sentences. It also has packages for machine translation under nltk.translate. It has packages that help in evaluation of NLG tasks using Bilingual Evaluation Understudy (BLEU). Secondly, spaCy consumes a lot of memory gradually. This won't be a problem if one is using cloud based coding platforms for example Google Colab, but for running in your personal PC, this might be a problem when the data is extremely huge. Lastly, NLTK has gained experience, trust and research support in the past 2 decades that spaCy can't beat this early in the market. NLTK is a favorite of many NLP practitioners world-wide because of its variety and volume of algorithms and data it provides.

Outro

spaCy has come at par with NLTK for many NLP tasks in quite less time of its release. This article at OpenGenus counts down 10 aspects where spaCy shines better than NLTK. It also includes information when NLTK outsmarts spaCy.

Please feel free to provide constructive feedback and suggestions in the comments. See you in the comments :)

References

- More information about NLTK can be found on : Natural Language Processing with Python – Analyzing Text with the Natural Language Toolkit by Steven Bird, Ewan Klein, and Edward Loper

- Cool blogs and updates on Spacy