Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

In this article, we have explained the concept of Conditional Generative Adversarial Nets in depth.

Table of contents:

- GANs or Generative Adversarial Networks

- Architecture of a Generative Adversarial Network

- Conditional GANs

- Image Labeling Using Multi-modal Learning

Pre-requisites:

- Beginner's Guide to Generative Adversarial Networks

- Applications of GANs

- Transformer Networks: How They Can Replace GANs

GANs or Generative Adversarial Networks

GANs, or Generative Adversarial Networks, are a generative modeling strategy that uses deep learning methods like convolutional neural networks to learn from a collection of training data and produce new data with the same features as the training data.

The generator and the discriminator are two neural networks that compete against each other in generative adversarial networks.

The discriminator is trained to identify the generator's phony data from actual samples, while the generator is trained to make fake data.The generator is penalized if it generates bogus data that the discriminator immediately recognizes as implausible, such as an image that is plainly not a face.

The generator improves its ability to generate increasingly convincing examples over time. The generator improves its ability to generate increasingly convincing examples over time.

Architecture of a Generative Adversarial Network

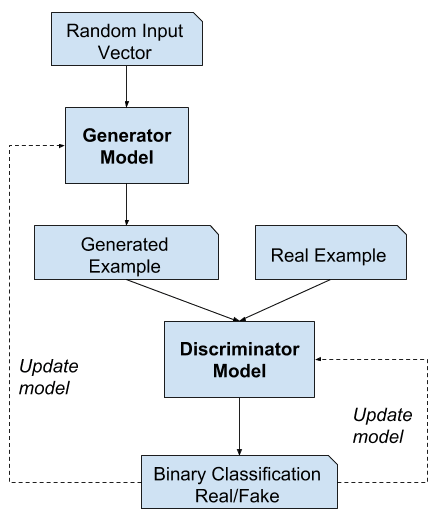

Two neural networks make comprise a generative adversarial network:

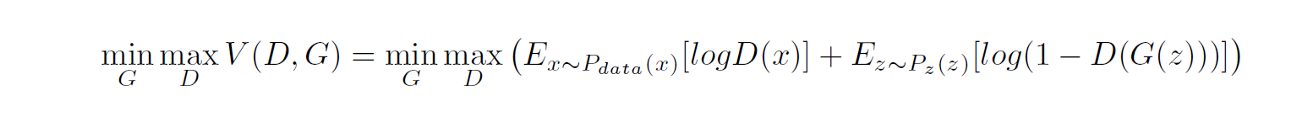

From a random seed, the generator learns to produce plausible phony data.The discriminator is trained using the bogus examples generated by the generator as negative examples. A two-player minimax game's objective function would be Eq 1

D = Discriminator

G = Generator

Pz (z) = Input noise distribution

Pdata (x) = Original data distribution

G(z) = Generators Output

D(x) = Discriminators Output

The discriminator, which learns to tell the difference between bogus and real data

The discriminator penalizes the generator if it delivers improbable results. The discriminator network receives both the generator's fake examples and the training set of genuine instances at random.

The discriminator has no way of knowing whether a given input came from the generator or the training set.The discriminator can easily distinguish the generator's bogus output at first, before training begins.Because the generator's output is directly supplied into the discriminator as input, we can apply the backpropagation algorithm throughout the system and update the generator's weights when the discriminator classifies one of the generator's outputs.

The generator's output becomes more realistic with time, and it becomes more adept at deceiving the discriminator. The generator's outputs eventually become so lifelike that the discriminator is unable to tell them apart from real ones.

Conditional GANs

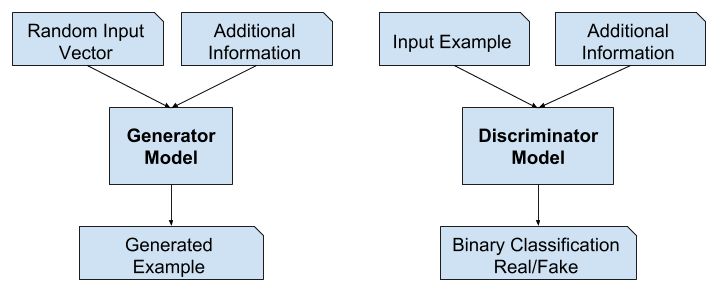

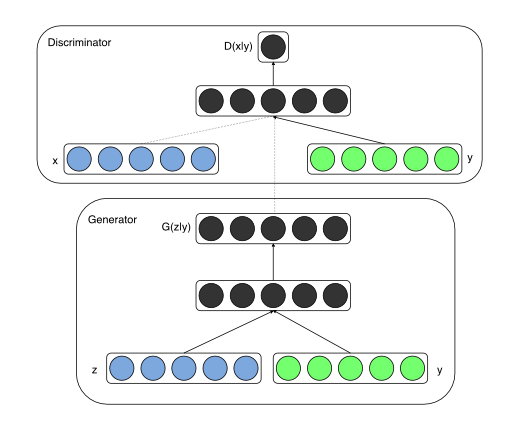

If both the generator and the discriminator are conditioned on some additional information y, such as class labels or input from other modalities, generative adversarial networks can be expanded to a conditional model.Conditioning can be done by feeding y as an additional input layer to both the discriminator and the generator.

Except for the one-hot vector, which is used to condition Generator outputs, the CGAN Generator model is similar to the DCGAN Generator model.The generative model can be trained to produce new examples from the input domain, where the input, a random vector from the latent space, is (conditionally) provided.

The discriminator is additionally conditioned, which means it is given both a real or fake input image as well as the additional input. The discriminator would therefore expect the input to be of that class in the case of a classification label type conditional input, teaching the generator to generate samples of that class in order to trick the discriminator.

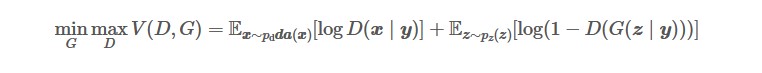

A two-player minimax game's objective function would be Eq 2

Figure 1: Conditional adversarial net

There are reasons why class label information should be used in a GAN model.

Additional information, such as class labels, that is connected with the input photos can be used to improve the GAN. This improvement could take the shape of more steady training, faster training, or higher-quality generated images.

Class labels can also be used to generate photographs of a specific type in an intentional or targeted manner.

A GAN model's drawback is that it can generate a random image from the domain.

There is a link between locations in the latent space and the generated images, but it is complicated and difficult to map.

Image Labeling Using Multi-modal Learning

Despite supervised neural networks' (and convolutional networks in particular) recent triumphs, scaling such models to accommodate a high number of anticipated output categories remains a challenge.

Another issue is that much of the previous research has concentrated on learning one-to-one mappings from input to output.

Many fascinating problems, on the other hand, are better thought of as probabilistic one-to-many mappings.

In the case of image labeling, for example, there could be a variety of tags that could be attached to a given image, and different (human) annotators could use different (but often similar or related) labels to describe the same image.

For example, utilizing natural language corpora to learn a vector representation for labels in which geometric relations are semantically significant is one technique to help address the first issue.

When making predictions in such spaces, we benefit from the fact that we are often 'near' to the reality (for example, forecasting 'table' instead of 'chair'), as well as the fact that we can naturally make predictive generalizations to labels that were not encountered during training.

According to "A deep visual-semantic embedding model" even a simple linear mapping from image feature space to word representation space can increase classification performance. A conditional probabilistic generative model, in which the input is the conditioning variable and the one-to-many mapping is instantiated as a conditional predictive distribution, is one technique to address the second difficulty.

With this article at OpenGenus, you must have the complete idea of Conditional Generative Adversarial Nets.