Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

In this article, we will dive deeper in evaluation metrics for computer vision tasks especially for Panoptic segmentation namely Panoptic quality (PQ), segmentation quality (SQ) and recognition quality (RQ).

Table of contents :

1. What is panoptic segmentation

2. Panoptic quality (PQ)

3. Segmentation quality (SQ)

4. Recognition quality (RQ)

5. Why Panoptic quality (PQ)

6. Summary

We know that not only image classification that concern our mind in the field of CV but also their are more complicated tasks like object detection and image segmentation, So we will dive into image segmentation and their evaluation metrics.

1. What is panoptic segmentation :

There are 3 types of image segmetation that is general concept of dividing image into multiple different segments or regions.

The 3 types are :

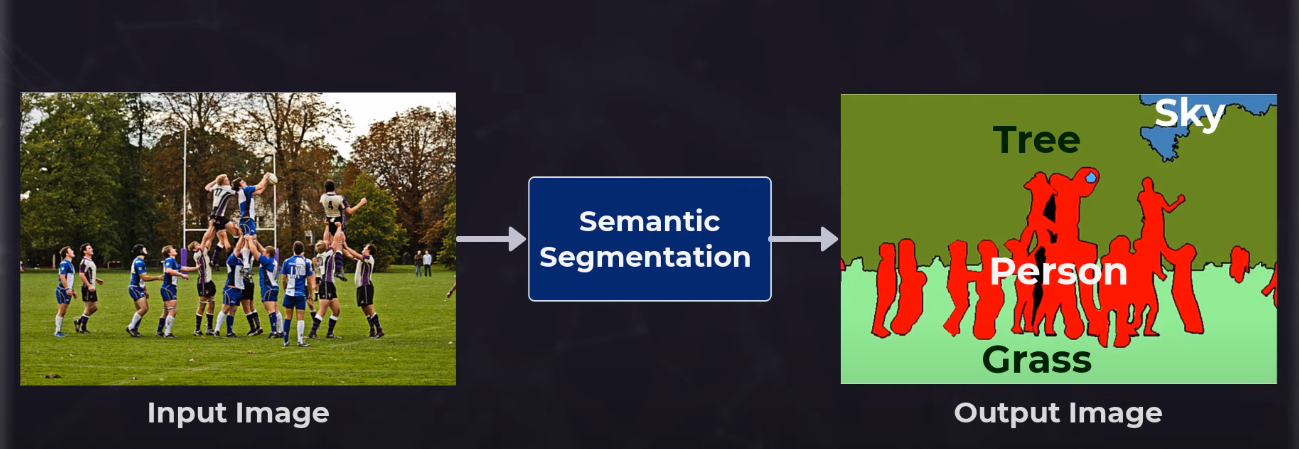

Semantic segmentation : which trying to label every single pixel to class which corresponding it but don't differentiate between objects of the same class.

Instance segmentation : it is somewhat like object detection in detecting more than one object in the image but instead of bounding boxes in object detection, here the output is a mask containing the object and differentiate between objects of the same class.

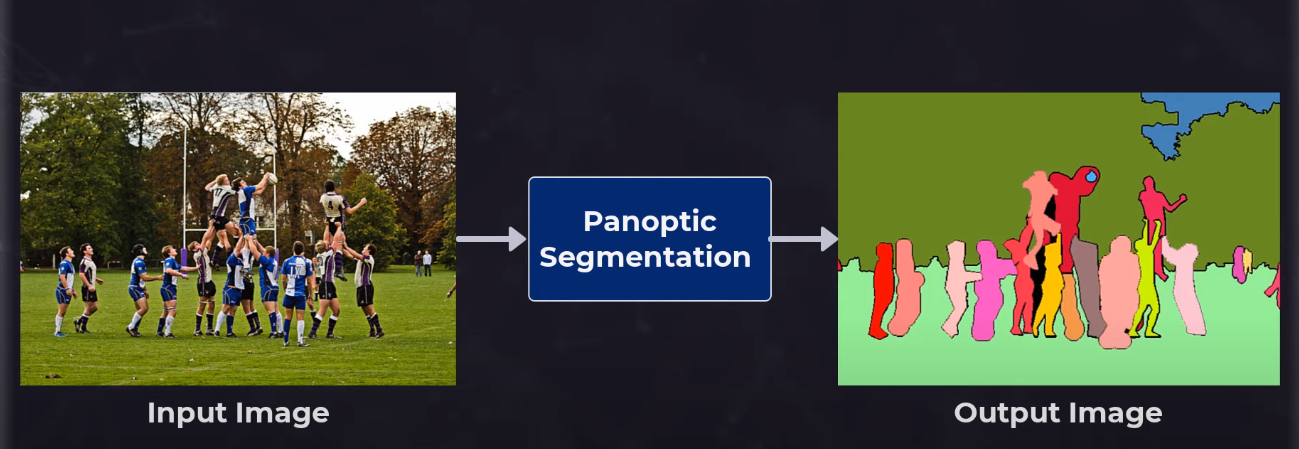

Panoptic segmentation : it is a combination between the last two techniques semantic segmentation and instance segmentation to get the best of them. in panoptic segmentation the model give each pixel its label to class and if there are more than one instance in a class it can differentiate between them.

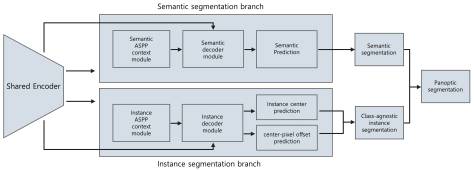

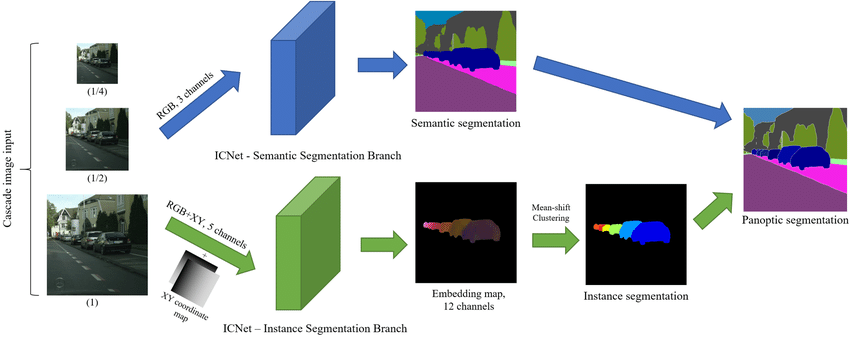

A model used in panoptic segmentation may be look like this one which has the two branches:

And

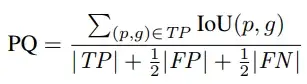

2. Panoptic quality (PQ) :

ِ

After training the model we need to evaluate its performance, here when the importance of panoptic quality come :

where :

- p is the predicted segment and g is the ground-truth segment

- IoU (Intersection over Union)

IoU(p,g)=|p∩g|/|p∪g|

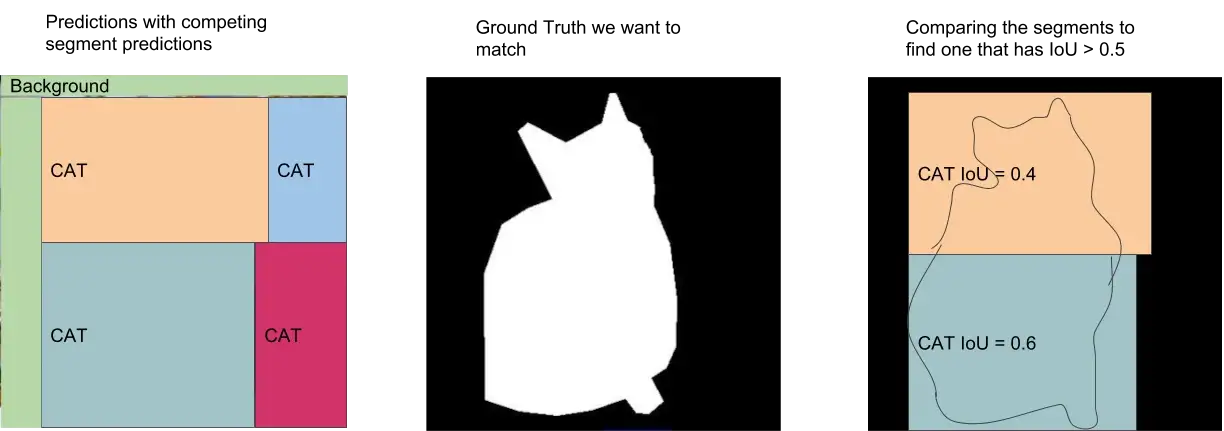

After segment matching (we will talk on it later), we will only consider a correct matching if the pair segment have IoU > 0.5 (the threshold). - TP : The true positive mean when your p correctly match with g (IoU > 0.5)

- FP : The false positive mean when your p incorrectly unmatch with g (IoU < 0.5)

- FN : The false negative mean when your model can't predict a ground-truth segment

So, the numerator equal the sum of all IoU for TP instances only and the denominator is a somewhat a blend of precision and recall with dividing on complete TP values and half of FP and half of FN.

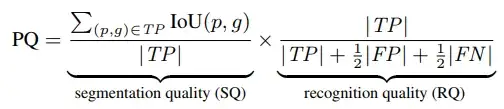

Panoptic quality (PQ) can be also a product of Segmentation quality (SQ) and Recognition quality (RQ) .

PQ=SQ × RQ

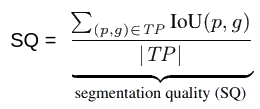

3. Segmentation quality (SQ) :

Segmentation quality (SQ) is the first part of panoptic quality and is a signal of

how good are our predictions segments fit the ground-truth segments.

it is obviously that the segmentation quality measure the mean of IoU of all and only TP predicted segments.

the bigger the value or nearing to 1 mean that TP segments well match their ground-truth segments and vice versa. Without considering any FP or FN segments.

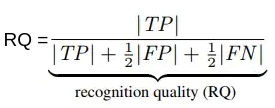

4. Recognition quality (RQ):

Then we move to Recognition quality which is the second part of the panoptic quality equation which measure how good our model in getting prediction right

As the equation showed that the recognition quality is the harmonic mean of Precision and Recall (F1).

5. Why Panoptic quality (PQ) :

A critical question anyone may ask is why we do all of this, why we cant simply use mAP evaluation metrics (which is ideal for object detection and instance segmentation) or IoU ( which is ideal for semantic segmentation ).

and the answer will be as simple as the question, because mAP need confidence score that we rank the prediction on it, and as panoptic segmentation contain the 2 type of segmentations, unfortunately the semantic segmentation don't have confidence level required for mAP. And also panoptic segmentation have more than one instance per one class and multiple ground-truth so IoU will be issued.

Lastly, their is a golden rule when considering "the matching" called Segment Matching which said that there is no pixel overlapping meaning their is no pixel can belong to two prediction. TP can consider with only prediction with IoU > 0.5 with their ground-truth.

SO,in the example above we can see that the blue predicted cat would be the “TP”, which would leave the top one as a false positive ( FP ).

6. Summary :

| Evaluation Metrics | Equation | uses |

|---|---|---|

| Panoptic Quality (PQ) | ∑(p,g)∈TP IoU(pred,GT) / (TP+0.5FP+0.5FN) | for evaluation panoptic segmentation tasks |

| Segmentation Quality (SQ) | ∑(p,g)∈TP IoU(pred,GT) / TP | how good are our predictions segments fit the ground-truth segments. |

| Recognition Quality(RQ) | TP / (TP+0.5FP+0.5FN) | measure how good our model in getting prediction right |

With this article at OpenGenus, you must have the complete idea of Panoptic quality (PQ), segmentation quality (SQ) and recognition quality (RQ).