Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Media Source Extensions extends HTMLMediaElement to allow JavaScript to generate media streams for playback. Allowing JavaScript to generate streams facilitates a variety of use cases like adaptive streaming and time shifting live streams.

Introduction

From early to late 2000s, video playback on web was relied on the flash plugin.This was because, at that time,there were no other techonology to stream videos in the browser.In those times user had no other choice as they have install plugins like flash or Silverlight to view the video.

The Web Hypertext Application Technology Working Group started to work on a newer version of the HTML which will include video and audio playback,without installation of third-party plugins.

The Video Tag

Linking a video in an HTML page is east as we just have add a video tag in the HTML page inside the body tag with attributes like source,width,heigth etc.

<html>

<head>

<meta charset="UTF-8">

<title>My Video</title>

</head>

<body>

<video src="some_video.mp4" width="1280px" height="720px" />

</body>

</html>

HTML will allow the page to stream video which we added as the source in the video tag,on any browser that supports the codecs.

The video provides various methods like play, pause, seek and these methods can be called directly using JavaScript, this provides more flexibility on how user wants to see the video.

Videos on web today have much more complexity than what these tags and methods allow. For example, switching between video qualities,changing language of video.

The Media Source Extensions

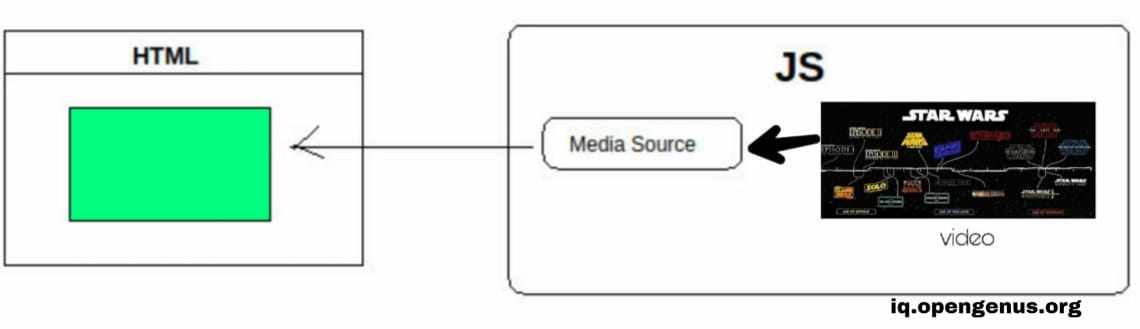

MSE allows us to replace track src value fed directly to media elements with a reference to a MediaSource object, which is a container for information like the ready state of the media for being played ,This will be the object representing our video's data.

W3C created the URL.createObjectURL static method. This method allows creates a URL, which refers to JS object created on client.

Example:

//JS code

const videoTag = document.getElementById("my-video");

// creating the MediaSource, just with the "new" keyword, and the URL for it

const myMediaSource = new MediaSource();

const url = URL.createObjectURL(myMediaSource);

// attaching the MediaSource to the video tag

videoTag.src = url;

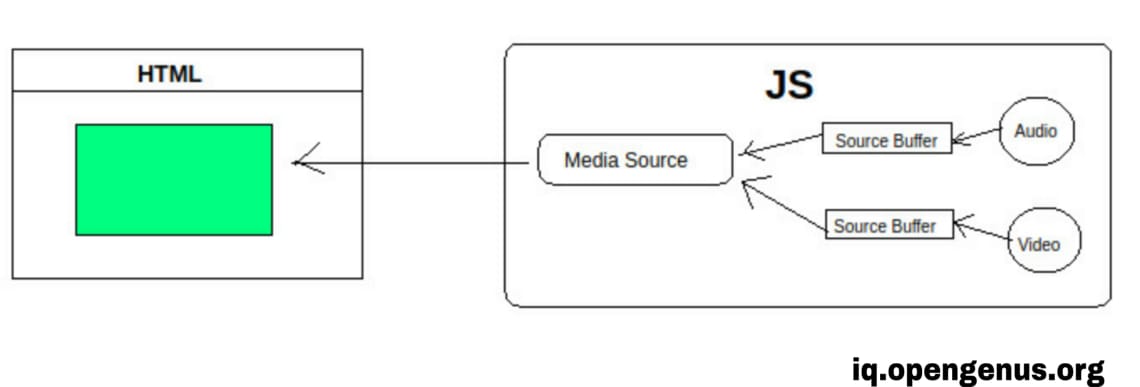

The SourceBuffer interface represents a chunk of media passed in a HTMLMediaElement and played, via a MediaSource object,video is not directly inserted into the MediaSource rather, SourceBuffers are added to MediaSource and then it is pushed in HTML page.

SourceBuffer is linked to a MediaSource and this is added to our video's data to HTML video tag using JS.

Media Segments

While using youtube,netlfix you might have seen that we can jump from different section of video, and can view that sepcific section instantly without downloading the whole video.

This is done using media segments, in some advance video players,video's data is split among different segments. These segments are of different sizes,and represent small content of video's data.

For Example: 10 sec video is split into 5 segments of 2 sec per segment.

Same can be done for audio as well.

These video segments then form a complete video content. The segmented video's data comes with a major advantage as we dont't have to push whole video at once rather, we push the segement one by one sequentially.

It is not necessary to segment video or audio's data on server, Range HTTP header is used in some cases by client to get those segemented files.

//Directory overview

./audio/

├── segment0.mp4

├── segment1.mp4

./video/

└── segment0.mp4

The segments are then loaded one by one inside the browser using JS, by doing this we don't have to wait for completion of download of video or audio. We can begin playing video or auido by downloading first segment and then progressively downloading and playing these segments.

//JS code

function fetchSegment(url) {

return fetch(url).then(function(response) {

return response.arrayBuffer();

});

}

// fetching audio segments one after another (notice the URLs)

fetchSegment("http://server.com/audio/segment0.mp4")

.then(function(audioSegment0) {

audioSourceBuffer.appendBuffer(audioSegment0);

})

.then(function() {

return fetchSegment("http://server.com/audio/segment1.mp4");

})

.then(function(audioSegment1) {

audioSourceBuffer.appendBuffer(audioSegment1);

})

.then(function() {

return fetchSegment("http://server.com/audio/segment2.mp4");

})

.then(function(audioSegment2) {

audioSourceBuffer.appendBuffer(audioSegment2);

})

// ...

// same thing for video segments

fetchSegment("http://server.com/video/segment0.mp4")

.then(function(videoSegment0) {

videoSourceBuffer.appendBuffer(videoSegment0);

});

Using this we can play a specific segment of video without downloading the whole video.

Adaptive Streaming

Nowadays most of the video players have an autoplay feature, where the quality is automatically changes depending upon user's network.

This is called adaptive streaming.

Adaptive streaming is achieved using media segments.

On server, the segments are of different qualities. These segments are then used when a user's audio/video quality changes upon network compatibility.

./audio/

├── ./128kbps/

| ├── segment0.mp4

| ├── segment1.mp4

| └── segment2.mp4

└── ./320kbps/

├── segment0.mp4

├── segment1.mp4

└── segment2.mp4./video/

├── ./240p/

| ├── segment0.mp4

| ├── segment1.mp4

| └── segment2.mp4

└── ./720p/

├── segment0.mp4

├── segment1.mp4

└── segment2.mp4

A web player will then automatically choose the right segments to download as the network or CPU conditions change.

This is done using JS.

Transport Protocols

A Manifest is a file describing which segments are available on the server.

It helps in describing most things like:

- In how many different languages audio content is available in and its location on server.

- Availability of different audio and video qualities.

- How many and What segments are available, in terms of live streaming.

Most Common transport protocols are:

DASH

Dynamic Adaptive Streaming over HTTP (DASH), is an adaptive bitrate streaming technique that enables high quality streaming of media content over the Internet,it is being used by YouTube, Netflix or Amazon Prime Video (and many others). DASH’ manifest is called the Media Presentation Description (or MPD) and is at its base XML, could deliver better quality at lower bitrates.

HLS

HLS is short for HTTP Live Streaming. It is a protocol used to stream live video over the internet. Originally developed by Apple, the purpose of HLS was to make the iPhone capable of accessing live streams, it is being used by

DailyMotion, Twitch.tv, and many others. The HLS manifest is called the playlist and is in the m3u8 format.

Smooth Streaming

Developed by Microsoft, used by multiple Microsoft products and MyCanal. In Smooth Streaming, manifests are called Manifests and are XML-based.

Hope this helps you in understanding Media Source Extension.