Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

In this article, we have explained Time & Space Complexity of Heap Sort with detailed analysis of different cases like Worst case, Best case and Average Case.

Table of contents:

- Overview of Heap Sort

- Time complexity of Heap Data Structure

- Worst Case Time Complexity of Heap Sort

- Best Case Time Complexity of Heap Sort

- Average Case Time Complexity of Heap Sort

- Space Complexity of Heap Sort

- Conclusion

Prerequisite: Heap Sort, Heap Data Structure

Let us get started with Time & Space Complexity of Heap Sort.

Overview of Heap Sort

The Heapsort algorithm mainly consists of two parts- converting the list into a heap and adding the max element from the heap to the end of the list, while maintaining the heap structure. For easy implementation, we use a max-heap structure, where the max value always exists at the root. After converting the list into a heap, we take the max element from it and add it to the end of the list. We repeat this process till the number of elements in the heap becomes zero. This indicates that we have arranged all items in the list as per the correct order. So to summarise, we focus on two main areas when implementing heap sort -

- Building max-heap

- Taking the maximum value from the heap (the root node value), add it to the end of the list, and update max-heap. Repeat till max-heap contains zero items.

The pseudocode to implement this algorithm is as follows-

lst = [a1, a2, ..., aN]

# heap sort the list

heapsort(lst):

set heap_size equal to list length

create_heap(lst)

for i from 0 to heap_size-1, decrement by 1:

lst[0], lst[i] = lst[i], lst[0]

heap_size -= 1

max_heapify(lst, heap_size, 0)

# function to style a heap as per max-heap properties

max_heapify(lst, heap_size, i):

get index of left child node of i

get index of right child node of i

create a variable to track index of largest list item

if left < heap_size and lst[left] > lst[largest]:

largest = left

if right < heap_size and lst[right] > lst[largest]:

largest = right

if largest != i:

swap(i, largest)

max_heapify(lst, heap_size, largest)

# function that creates a heap

# uses the max_heapify function to create a max-heap

create_heap(lst):

get length of list to get heap_size

for i from heap_size//2 to -1, decrement by 1:

max_heapify (lst, heap_size, i)

# print the sorted list at the end

heapsort(lst)

print(lst)

This gives a fundamental idea behind the Heapsort algorithm.

Time complexity of Heap Data Structure

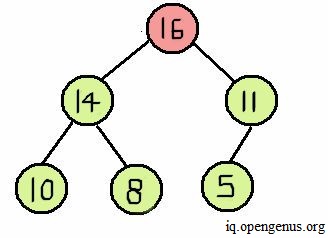

In the algorithm, we make use of max_heapify and create_heap which are the first part of the algorithm. When using create_heap, we need to understand how the max-heap structure, as shown below, works.

Because we make use of a binary tree, the bottom of the heap contains the maximum number of nodes. As we go up a level, the number of nodes decreases by half. Considering there are 'n' number of nodes, then the number of nodes starting from the bottom-most level would be-

- n/2

- n/4 (at the next level)

- n/8

- and so on

Complexity of inserting a new node

Therefore, when we insert a new value in the heap when making the heap, the max number of steps we would need to take comes out to be O(log(n)). As we use binary trees, we know that the max height of such a structure is always O(log(n)). When we insert a new value in the heap, we will swap it with a value greater than it, to maintain the max-heap property. The number of such swaps would be O(log(n)). Therefore, the insertion of a new value when building a max-heap would be O(log(n)).

Complexity of removing the max valued node from heap

Likewise, when we remove the max valued node from the heap, to add to the end of the list, the max number of steps required would also be O(log(n)). Since we swap the max valued node till it comes down to the bottom-most level, the max number of steps we'd need to take is the same as when inserting a new node, which is O(log(n)).

Therefore, the total time complexity of the max_heapify function turns out to be O(log(n)).

Complexity of creating a heap

The time complexity of converting a list into a heap using the create_heap function is not O(log(n)). This is because when we create a heap, not all nodes will move down O(log(n)) times. It's only the root node that'll do so. The nodes at the bottom-most level (given by n/2) won't move down at all. The nodes at the second last level (n/4) would move down 1 time, as there is only one level below remaining to move down. The nodes at the third last level would move down 2 times, and so on. So if we multiply the number of moves we take for all nodes, mathematically, it would turn out like a geometric series, as explained below-

(n/2 * 0) + (n/4 * 1) + (n/8 * 2) + (n/16 * 3) + ...h

Here h represents the height of the max-heap structure.

The summation of this series, upon calculation, gives a value of n/2 in the end. Therefore, the time complexity of create_heap turns out to be O(n).

Total time complexity

In the final function of heapsort, we make use of create_heap, which runs once to create a heap and has a runtime of O(n). Then using a for-loop, we call the max_heapify for each node, to maintain the max-heap property whenever we remove or insert a node in the heap. Since there are 'n' number of nodes, therefore, the total runtime of the algorithm turns out to be O(n(log(n)), and we use the max-heapify function for each node.

Mathematically, we see that-

- The first remove of a node takes log(n) time

- The second remove takes log(n-1) time

- The third remove takes log(n-2) time

- and so on till the last node, which will take log(1) time

So summing up all the terms, we get-

log(n) + log(n-1) + log(n-2) + ....log(1)

as log(x) + log(y) = log(x * y), we get

=log(n∗(n−1)∗(n−2)∗…∗2∗1)

=log(n!)

Upon further simplification (using Stirling's approximation), log(n!) turns out to be

=n∗log(n)−n+O(log(n))

Taking into account the highest ordered term, the total runtime turns out to be O(n(log(n)).

Worst Case Time Complexity of Heap Sort

The worst case for heap sort might happen when all elements in the list are distinct. Therefore, we would need to call max-heapify every time we remove an element. In such a case, considering there are 'n' number of nodes-

- The number of swaps to remove every element would be log(n), as that is the max height of the heap

- Considering we do this for every node, the total number of moves would be n * (log(n)).

Therefore, the runtime in the worst case will be O(n(log(n)).

Best Case Time Complexity of Heap Sort

The best case for heapsort would happen when all elements in the list to be sorted are identical. In such a case, for 'n' number of nodes-

- Removing each node from the heap would take only a constant runtime, O(1). There would be no need to bring any node down or bring max valued node up, as all items are identical.

- Since we do this for every node, the total number of moves would be n * O(1).

Therefore, the runtime in the best case would be O(n).

Average Case Time Complexity of Heap Sort

In terms of total complexity, we already know that we can create a heap in O(n) time and do insertion/removal of nodes in O(log(n)) time. In terms of average time, we need to take into account all possible inputs, distinct elements or otherwise. If the total number of nodes is 'n', in such a case, the max-heapify function would need to perform:

- log(n)/2 comparisons in the first iteration (since we only compare two values at a time to build max-heap)

- log(n-1)/2 in the second iteration

- log(n-2)/2 in the third iteration

- and so on

So mathematically, the total sum would turn out to be-

(log(n))/2 + (log(n-1))/2 + (log(n-2))/2 + (log(n-3))/2 + ...

Upon approximation, the final result would be

=1/2(log(n!))

=1/2(n∗log(n)−n+O(log(n)))

Considering the highest ordered term, the average runtime of max-heapify would then be O(n(log(n)).

Since we call this function for all nodes in the final heapsort function, the runtime would be (n * O(n(log(n))). Calculating the average, upon dividing by n, we'd get a final average runtime of O(n(log(n))

Space Complexity of Heap Sort

Since heapsort is an in-place designed sorting algorithm, the space requirement is constant and therefore, O(1). This is because, in case of any input-

- We arrange all the list items in place using a heap structure

- We put the removed item at the end of the same list after removing the max node from the max-heap.

Therefore, we don't use any extra space when implementing this algorithm. This gives the algorithm a space complexity of O(1).

Conclusion

As a summary, heapsort has:

- Worst case time complexity of O(n(log(n)) [all elements in the list are distinct]

- Best case time complexity of O(n) [all elements are same]

- Average case time complexity of O(n(log(n))

- Space complexity of O(1)

With this article at OpenGenus, you must have the complete idea of Time & Space Complexity of Heap Sort.