Open-Source Internship opportunity by OpenGenus for programmers. Apply now.

Introduction

In most, if not all spoken and written languages, each word is grouped into a certain category according to it's syntactic functions. These categories are known as "Parts of Speech" (POS), each category containing words of similar nature.

In English, there are eight main parts of speech, in no particular order:

- Nouns are names for persons, places, objects, or concepts (e.g. cat, mouse, house).

- Verbs describe an action or a state of being (e.g. run, jump, walk, laugh).

- Adjectives are words that describe or rather, provide a quality to a noun or pronoun (e.g. huge, red, beautiful).

- Adverbs describe other parts of speech including verbs, adjectives, or other adverbs. (e.g. quickly, slowly, happily, reluctantly).

- Pronouns replace or refer to a noun (e.g. he, she, they, it).

- Prepositions show the relationship between nouns or pronouns and other words (e.g. on, in, under, with, over).

- Conjunctions act as connectors of words and/or phrases (e.g. and, or, but, because).

- Interjections express emotions or feelings and are generally independent of the words around them (e.g. wow, ouch, bingo, ew).

In addition to these eight parts of speech, there are several other parts of speech that are less commonly used, including articles, determiners, quantifiers etc.

After this refresher on Parts Of Speech, we can move on to the next section, that is understanding POS tagging.

Understanding POS Tagging

POS tagging is a text preprocessing task within the ambit of Natural Language Processing (NLP) whose goal is to analyze the syntactic structure of a given sentence and to understand the input text in a better manner. It is mainly used to match each word of a sentence to it's corresponding part of speech. On passing a phrase to a POS tagging algorithm, a list of tuples shall be returned, each tuple containing a word of the sentence and it's corresponding part of speech. For example, passing the phrase "He runs fast" to a POS tagging algorithm shall return [(He, pronoun),(runs, verb),(fast,adverb)] or something to a similar effect.

Now that we have a basic idea about POS tagging, let's have a look at its use cases.

Text classification: POS tags may be employed as features in text classification models. By analyzing the POS tags of the words in a text, algorithms can better understand the content and tone of the text, making it useful in tasks such as spam classification and sentiment analysis.

Named entity recognition (NER): To identify and classify named entities like individuals,institutions, and locations, POS tags can be employed as a pre-processing step. This is useful for tasks such as extracting data from news articles or building knowledge bases for AI systems.

Machine translation: POS tagging can be used to translate texts from one language to another by recognising the grammatical relationships and word associations in the source language and mapping them to the target language.

Speech recognition: POS tags can be used to increase the precision of speech recognition systems by differentiating between words that sound similar but belong to different parts of speech categories. Eg. steel and steal.

Now that we're familiar with the use cases of POS tagginh, let's have a look at the various methods to implement POS tagging in python.

Implementing POS tagging in Python

Manual approach

First of all, let's try a manual approach towards POS tagging in python. For this, we shall be using the wordnet module imported from nltk. To install wordnet, run these 2 lines of code in your notebook.

import nltk

nltk.download('wordnet')

Alternatively, you can also type !pip install wordnet in your notebook and wait for the module to install.

The manual algorithm will have the following steps-

1.Define lists for all parts of speeches

2.Define a dictionary of POS tag mappings.

3.Define the sentence to be tagged.

4.Split the sentence into a list of words

5.Iterate over the tokens and assign a tag to each word.

Let's go through the manual method step-by-step.

Define lists for all parts of speeches

from nltk.corpus import wordnet as wn

noun_synsets = list(wn.all_synsets('n'))

noun_lemmas = [lemma for synset in noun_synsets for lemma in synset.lemmas()]

nouns = [lemma.name() for lemma in noun_lemmas]

#list of pronouns from https://www.thoughtco.com/

pronouns = """all

another

any

anybody

anyone

anything

as

aught

both

each

each other

either

enough

everybody

everyone

everything

few

he

her

hers

herself

him

himself

his

I

idem

it

its

itself

many

me

mine

most

my

myself

naught

neither

no one

nobody

none

nothing

nought

one

one another

other

others

ought

our

ours

ourself

ourselves

several

she

some

somebody

someone

something

somewhat

such

suchlike

that

thee

their

theirs

theirself

theirselves

them

themself

themselves

there

these

they

thine

this

those

thou

thy

thyself

us

we

what

whatever

whatnot

whatsoever

whence

where

whereby

wherefrom

wherein

whereinto

whereof

whereon

wherever

wheresoever

whereto

whereunto

wherewith

wherewithal

whether

which

whichever

whichsoever

who

whoever

whom

whomever

whomso

whomsoever

whose

whosever

whosesoever

whoso

whosoever

ye

yon

yonder

you

your

yours

yourself

yourselves"""

pronouns=pronouns.replace('\n',' ').split()

articles=["a","an","the"]

adjective_synsets = list(wn.all_synsets('a'))

adjective_lemmas = [lemma for synset in adjective_synsets for lemma in synset.lemmas()]

adjectives = [lemma.name().lower() for lemma in adjective_lemmas]

verb_synsets = list(wn.all_synsets('v'))

verb_lemmas = [lemma for synset in verb_synsets for lemma in synset.lemmas()]

verbs = [lemma.name().lower() for lemma in verb_lemmas]

adverb_synsets = list(wn.all_synsets('r'))

adverb_lemmas = [lemma for synset in adverb_synsets for lemma in synset.lemmas()]

adverbs = [lemma.name().lower() for lemma in adverb_lemmas]

"""list of all possible conjunctions from https://englishgrammarhere.com/"""

conjunctions = """A minute later

Accordingly

Actually

After

After a short time

Afterwards

Also

And

Another

As an example

As a consequence

As a result

As soon as

At last

At lenght

Because

Because of this

Before

Besides

Briefly

But

Consequently

Conversely

Equally important

Finally

First

For example

For instance

For this purpose

For this reason

Fourth

From here on

Further

Furthermore

Gradually

Hence

However

In addition

In conclusion

In contrast

In fact

In short

In spite of

In spite of this

In summary

In the end

In the meanwhile

In the meantime

In the same manner

In the same way

Just as important

Least

Last

Last of all

Lastly

Later

Meanwhile

Moreover

Nevertheless

Next

Nonetheless

Now

Nor

Of equal importance

On the contrary

On the following day

On the other hand

Other hand

Or

Presently

Second

Similarly

Since

So

Soon

Still

Subsequently

Such as

The next week

Then

Thereafter

Therefore

Third

Thus

To be specific

To begin with

To illustrate

To repeat

To sum up

Too

Ultimately

What

Whatever

Whoever

Whereas

Whomever

When

While

With this in mind

Yet""".replace('\n',' ').split()

prepositions = wn.synset('preposition.n.01')

prepositions_lemmas = prepositions.lemmas()

prepositions = [lemma.name().lower() for lemma in prepositions_lemmas]

interjections = wn.synset('interjection.n.01')

interjections_lemmas = interjections.lemmas()

interjections = [lemma.name().lower() for lemma in interjections_lemmas]

As can be seen, all possible words in all major parts of speech except conjunctions and pronouns were extracted from the wordnet module. Lists for conjunction and pronouns were created manually using web based resources as listed in the code.

Define a dictionary of POS tag mappings and the sentence to be tagged.

tag_map = {

'noun': nouns,

'pronoun':pronouns,

'adverb':adverbs,

'adjective':adjectives,

'verb': verbs,

'article': articles,

'preposition':prepositions,

'conjunction': conjunctions,

'interjection':interjections

}

sentence = "They sit on the bench"

This dictionary maps a label to each list. Do note that these labels would be the final output for each word in the sentence. The sentence to be tagged is also defined.

Create a function to split the sentence into a list of words and assign a tag to each word.

def pos_tag(sentence):

tokens = sentence.split()

tagged_words = []

for token in tokens:

for tag, words in tag_map.items():

if token.lower() in words:

tagged_words.append((token, tag))

break

else:

# If the word is not found, assign the default tag (noun)

tagged_words.append((token, 'noun'))

return tagged_words

This function splits the given sentence into a list of word and maps each word to a part of speech. if no suitable match is found, the word is assigned 'noun' as a default part of speech.

tagged_words = pos_tag(sentence)

print(tagged_words)

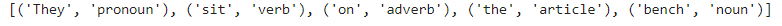

On executing this function on the sentence variable, this is the observed result.

Athough this code is able to tag the words properly, there may be edge cases which may cause improper results. Other than that, this is quite a lengthy method for POS tagging, so let's have a look at a few low code alternatives.

Using the nltk library

One simple option is to use the word_tokenize() and pos_tag() methods in the nltk library, as shown below.

import nltk

sentence = "The quick brown fox jumps over the lazy dog."

tokens = nltk.word_tokenize(sentence)

pos_tags = nltk.pos_tag(tokens)

print(pos_tags)

Output-

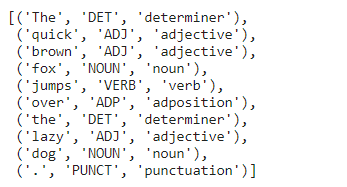

Here, the abbreviations stand for various parts of speech. Eg. DT stands for determiner, NN for noun, VBZ is a type of a verb, IN stands for preposition and so on. Follow this link to know about abreviations in the pos_tag() method.

Using Spacy for POS tagging.

Spacy is another popular Python library for NLP that provides various tools for text processing and analysis, including POS tagging. However, this approach is a bit different than the previous one as unlike nltk, we first need to load a pre-trained language model that includes a POS tagger.

The most commonly used model is en_core_web_sm, which is a small English model, including a POS tagger, a dependency parser, and a named entity recognizer.

Here's an example of using Spacy to perform POS tagging:

import spacy

nlp = spacy.load('en_core_web_sm')

doc = nlp(sentence)

pos_tags = []

for token in doc:

pos_tags.append((token.text,token.pos_, spacy.explain(token.pos_)))

pos_tags

Output:

Using spacy provides an added advantage of being able to understand the abbreviations by using certain inbuilt methods and not having to refer to documentation for the same as was the case with nltk.

By now we have had a look at three possible ways of POS tagging using Python. Now let's compare and summarise these methods.

Comparison of methods

| Method Name | Manual Tagging | Tagging using NLTK | Tagging using Spacy |

|---|---|---|---|

| Lines of direct code involved | It is a code intensive process as everything has to be defined from scratch. | Least code intensive of all as it requires only 5 lines from the user. | It is a comparatively low code process. |

| POS Labels | POS labels in the manual algorithm are limited to those parts of speech which the coder can possibly incorporate. Even in each POS list, the number of words may be constrained. | Since this is a part of the NLTK library, number of POS labels is more as compared to the manual method, although deciphering the abbreviations which refer to those lists can be tricky. | Number of POS labels is similar to the NLTK library. However, deciphering the abbreviations pertaining to each label is easier as compared to nltk with the use of inbuilt functions. |

| Time Complexity | Time complexity of the pos_tag('sentence') function defined in the manual algorithm is observed to be O(m*n) where n = number of words in the input sentence, and m = average length of the lists of words associated with each tag in the tag_map dictionary. | Time complexity of this process is O(n) where n = number of words in the input sentence. | Overall Time Complexity of this process is O(m + n) where m = Time taken to load the model and n= number of words in input sentence |

| Space Complexity | Since the required memory to store the tokenized words and the list of tagged words is directly proportional to the number of words in the input sentence, the space complexity of this code snippet can be considered O(n). | Space complexity of this method is also O(n) in the method using nltk, owing to this using logic similar to the manual method. | Space complexity of this method is also O(n) in the method using spacy, owing to this using logic similar to the manual method and the method usng nltk. Space taken by the pre trained model is not accounted for as it is a constant. |

Before wrapping up, let's have a look at a few questions.